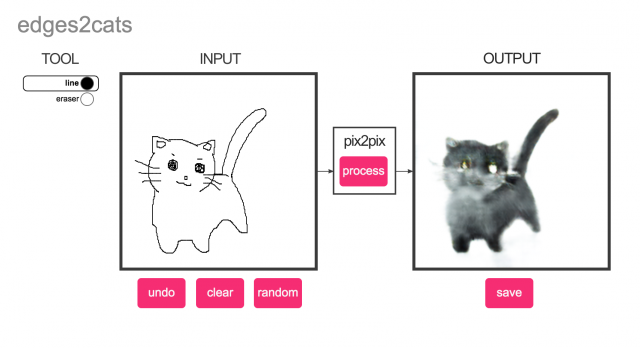

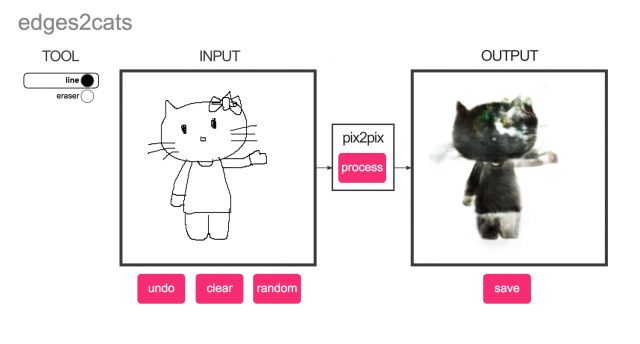

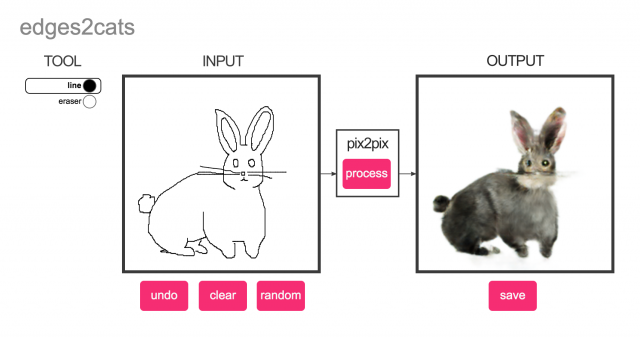

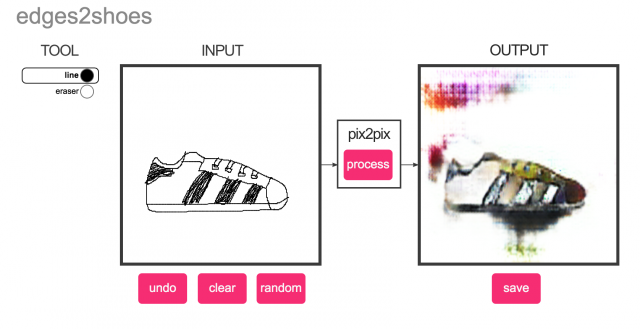

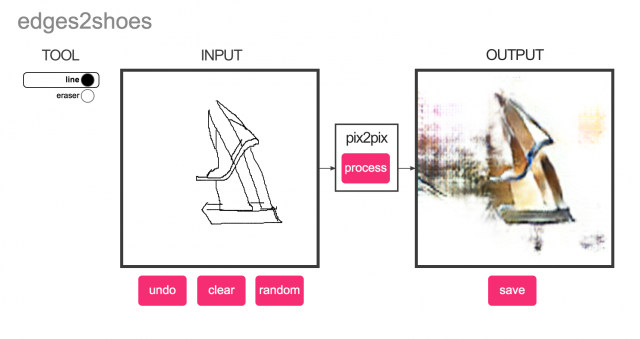

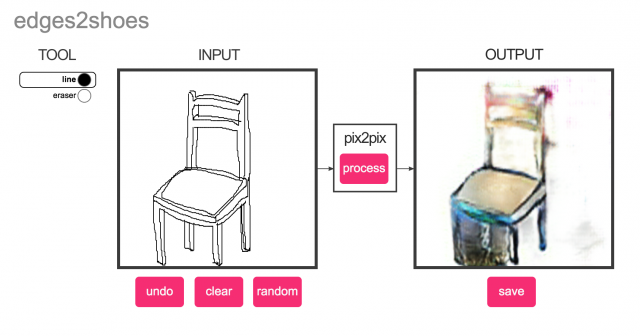

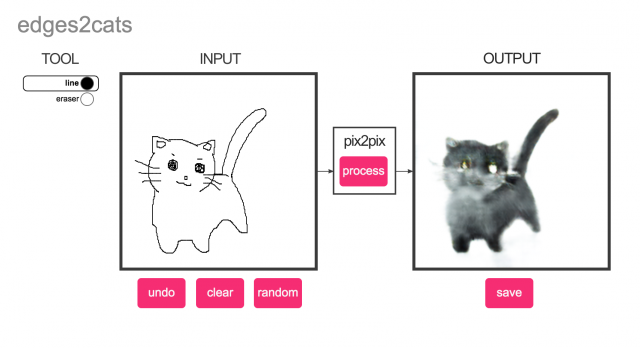

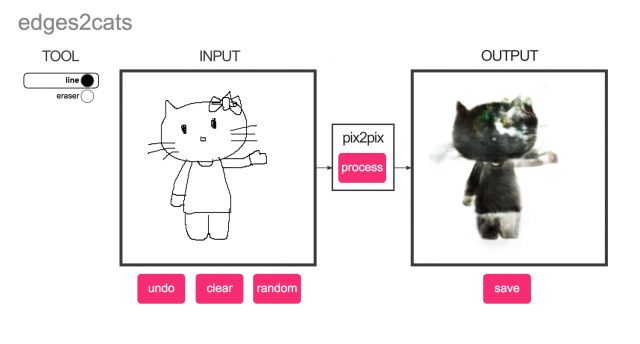

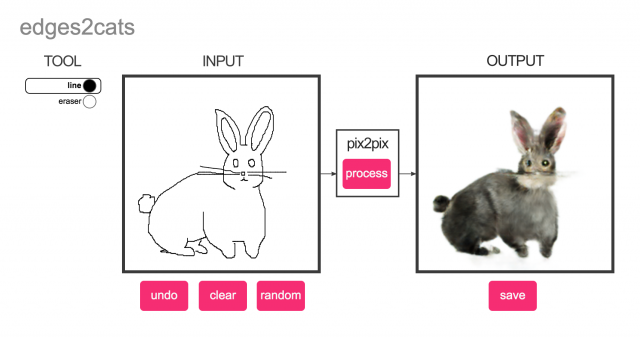

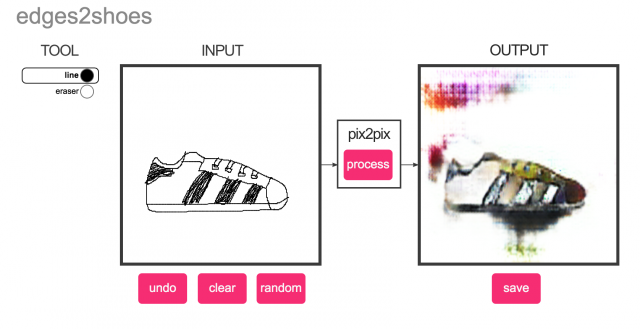

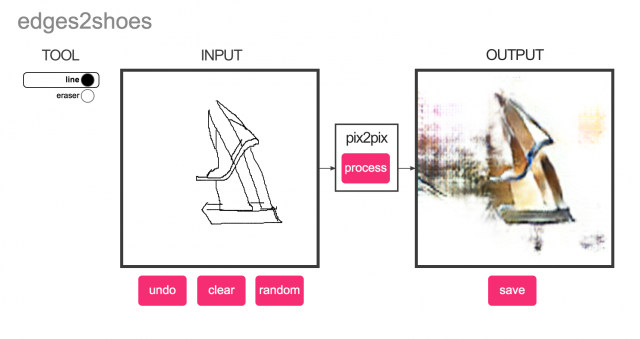

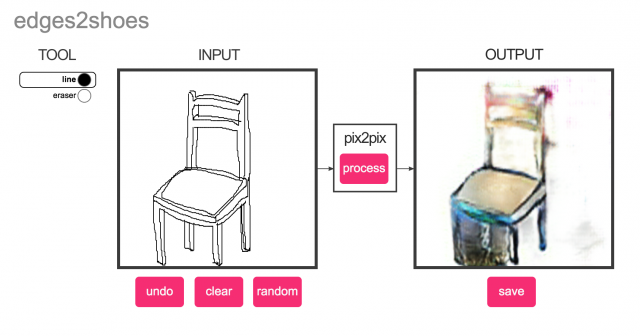

I really enjoyed exploring this tool! I tried drawing in three ways (something that fits my understanding of the object, a more stylized version, and unrelated object) for edges2cats and edges2shoes and got some interesting results.

60-212: Interactivity and Computation for Creative Practice

CMU School of Art / IDeATe, Fall 2020 • Prof. Golan Levin

I really enjoyed exploring this tool! I tried drawing in three ways (something that fits my understanding of the object, a more stylized version, and unrelated object) for edges2cats and edges2shoes and got some interesting results.

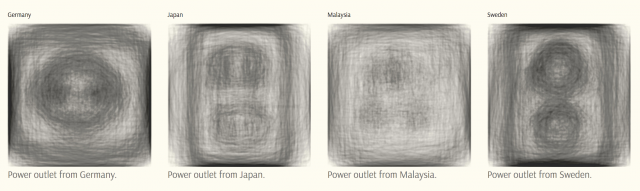

Forma Fluens collects over 100,000 doodle drawings from the game QuickDraw. The drawings represent participants’ direct thinking and offer a glimpse of collective expression of the society. By using this data collection, the artists tried to explore if we could learn how people see and remember things in relation to their local culture with the help of data analysis.

The three modes of Forma Fluens, DoodleMaps, Points in Movement, and Icono Lap, all present different insights into how people from each culture process their observations. DoodleMaps show the doodle results organized with t-SNE map, Points in Movement displays animations of how millions of drawings overlap in similar ways, and Icono Lap generates new icons from the overlap of these doodle drawings.

The part that draws my attention the most is how distinct convergence and divergence can happen with objects that we thought we might have common understandings of. Another highlight from the project is how the doodle results tell stories of different cultures, which may suggest that in a similar cultural atmosphere people observe and express in similar ways.

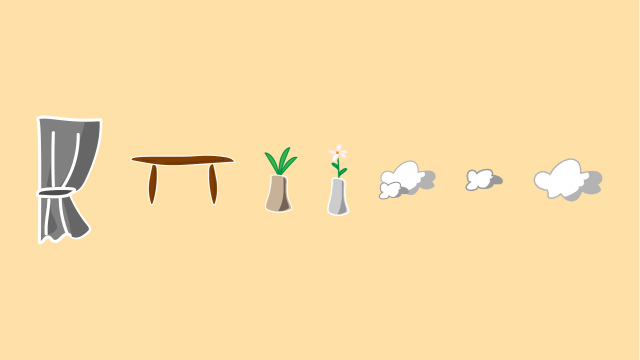

Ever since the pandemic has started, I haven’t got much chance to go out and meet with my friends yet, neither have I went back to Shanghai (and possibly won’t until next year). So, I created “ROOM WITH A VIEW” – a sharing tool for people to share the views from their windows (or from any other places people would like to share) with their friends and families in virtual apartment cells.

Each user gets assigned to an apartment cell with their local time and time zone displayed at the sides of the room. Users can share their live stream videos via this pixelated window to others. The wall colors of the rooms are mapped to the local time zones of each user. Besides from the live stream, users could also move around the touch screen to animate the clouds ☁️

Code | Demo (right now only works for desktop webcams and mobile front cameras)

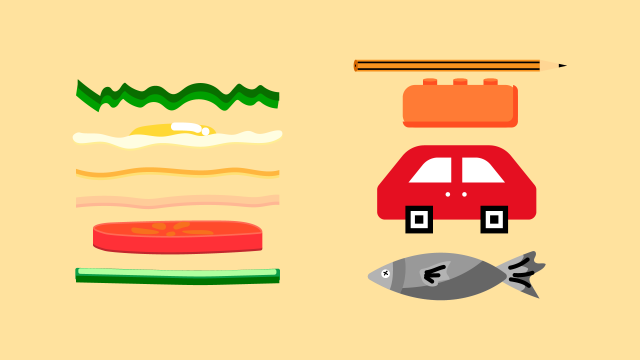

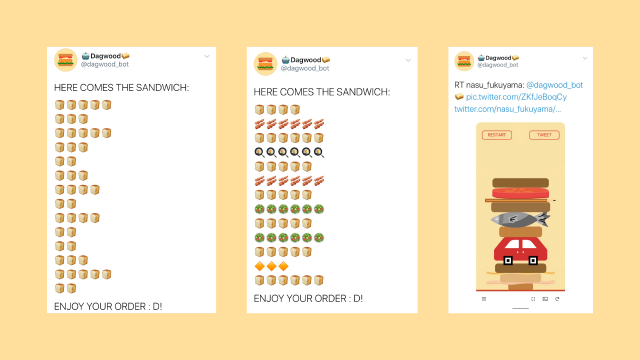

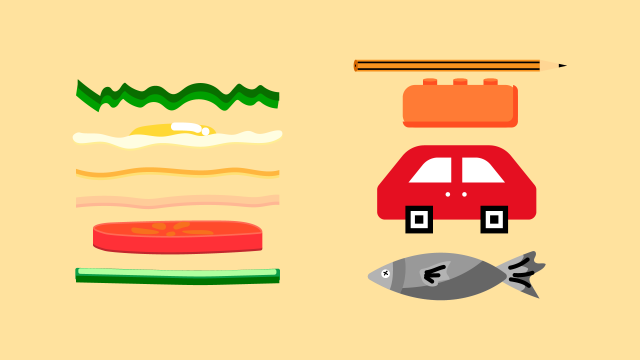

DAGWOOD is a sandwich generator that creates random Dagwood sandwiches with layers of food or non-edible stuff. By using the two Soli interaction methods, swipe and tap, users can choose to add ingredients to the sandwich or swap out the last piece of ingredient if they don’t want it.

After generating their sandwiches, users can click on the “TWEET” button to let the Dagwood bot send out an emoji version of the sandwich, or click “RESTART” to build a new sandwich.

I tried to keep the gameplay minimal and gradually added features to the project. One feature that I eventually gave up is to build an image bot, since it would take quite an amount of time to figure out image hosting and tweeting with images, and the saveCanvas() function in p5 isn’t working very well with the Pixel 4 phone. I switched to the emoji bot instead, and also tried to add a retweet when mentioned applet that I found on IFTTT (worked for twice and then it’s somehow broken :’ ))).

Code | Demo – works for Pixel 4 and laptops (view with Pixel 4 screen display size but ingredient sizes are a bit off)

🤖 ENJOY MAKING YOUR DAGWOOD SANDWICHES : D! 🥪

DAGWOOD is a sandwich generator which create random sandwiches by using the two Soli interaction methods, swipe and tap. Users can tap to add ingredients to the sandwich and swipe to remove the last piece of ingredient if they don’t want it.

Currently working on connecting p5 to Twitter for auto posting screenshots to the Twitter bot.

2 – Interfaz es un verbo (I interface, you interface…). La interfaz ocurre, es acción. (Translation: Interface is a verb. The interface happens, it is action.)

Interfaces and interactions exist together, and the moment interactions happen the interface would for sure apply changes (whether momentary or accumulative) to the surrounding space and people who are using it. The 3rd quote reminds me of a work about reinterpreting screen interaction gestures. The artist created a daily exercise based on the common interaction movements we would do with our smartphones (e.g. swiping, tapping, pinching…). The current existing interfaces for products are mainly made for users to adapt to them, the idea of adapting and changing the interface suggests an active relationship with the interface itself. It also suggests that instead of following the default there might be new insights when we alter the preset interface.

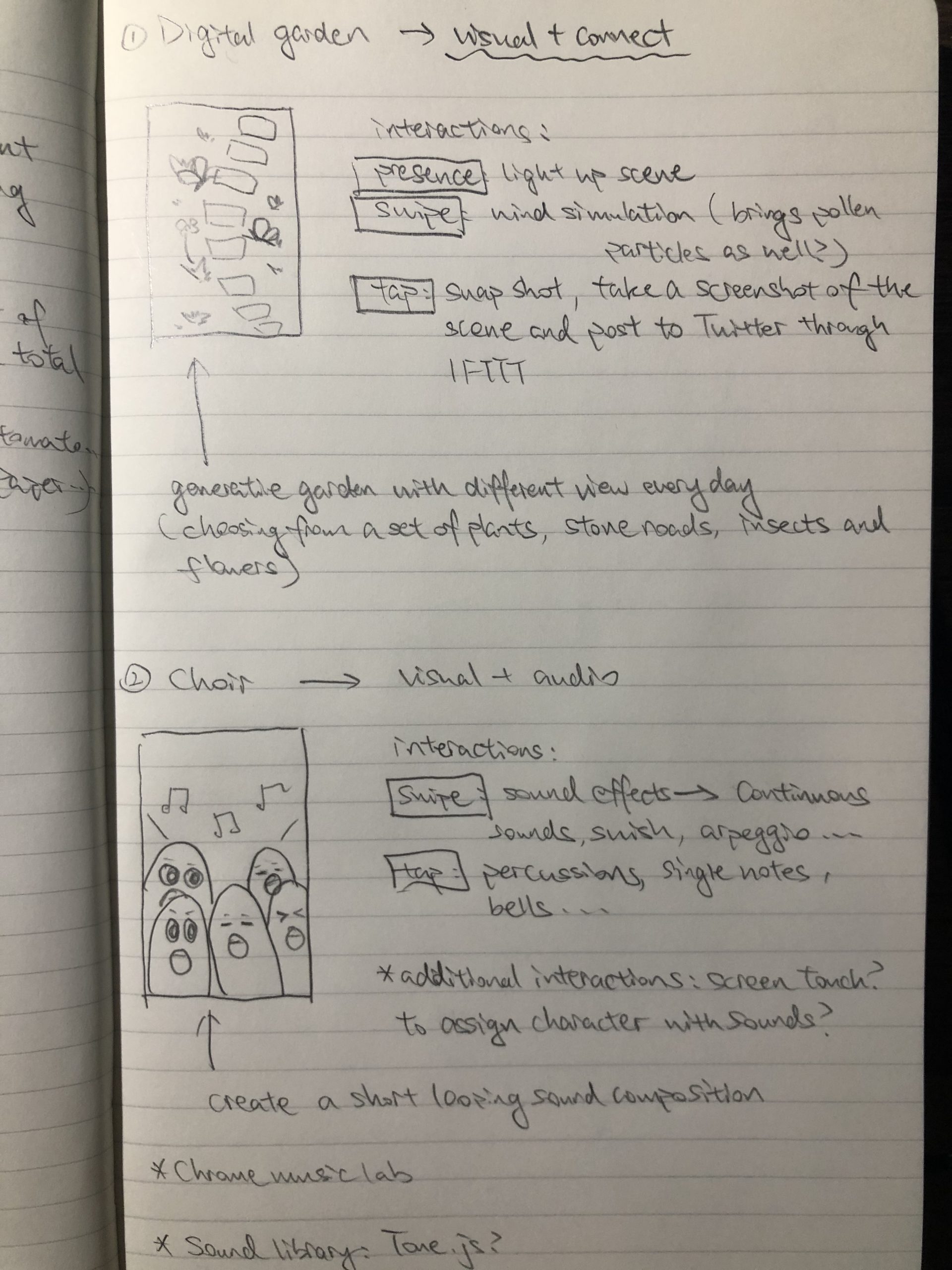

Digital garden – visual + connect

Concept: generative garden scene with different views everyday made up of a selection of plants, stone roads, insects and flowers.

Interactions: stand in front of the phone (presence) to light up the scene, swipe to add wind/pollen particles to the garden, tap to take screenshot of the garden to send to twitter bot

Choir – visual + audio

Concept: create a short looping sound composition by assigning different types of sound samples to the animated characters.

Interactions: swipe to add continuous sounds/swish/chord progressions, tap to add percussion/single notes/bell sounds…

(Additional interaction: selecting the exact character for the sound and choosing bpm?)

Something to start with:

How to compose a loop that does not sound too random but personalized enough for users to play around with?

Sound: additional library (p5 sound, tone.js?)

*Inspiration: Chrome music lab

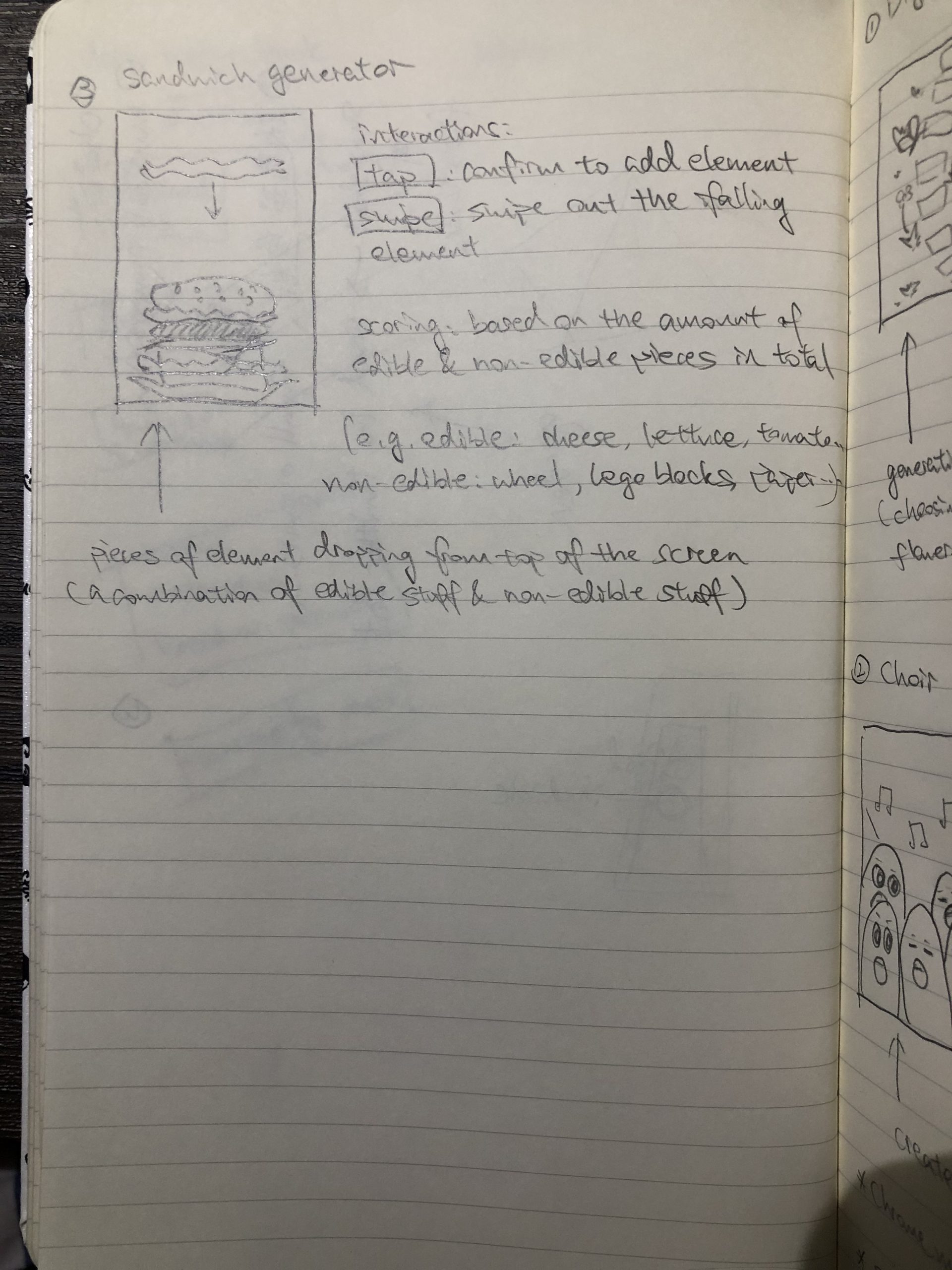

Sandwich generator – visual + connect

Concept: a tower-builder type game that instead of tower you build a sandwich with pieces of ingredients falling from top of the screen.

Interactions: Tap to catch the ingredients and swipe for switching out those you don’t want.

Ingredients would be an assortment of edible ingredients (e.g. cheese, lettuce, tomato…) and non-edible ingredients (e.g. wheels, lego blocks, batteries, laptops…)

You will get a score based on how edible your final sandwich is!

Something to start with:

Scoring: automatically scored or get another person to score?

Connect with IFTTT: send sandwich screenshots to twitter bot?

Visual: 2D, physics simulation? (With additional library)

This work created by artist and composer Dylan Sheridan is called Bellow. It is a digital installation that plays live music through an artificial irrigation system, automated fingers and electronic sensors. I was searching through powland.tv’s page and found this post, which eventually led me to this project. I found this work particularly interesting because of how the artist tries to synthesize natural phenomena with artificial sounds and physical components. While it is a manmade recreation of a cave-like environment, the installation does not attempt to copy what an actual cave would look and sound like other than showing a tiny piece of turf. Instead it focuses on the sound composition, how the sounds are being played, and how the interaction should happen. It somehow reminds me of a Rube Goldberg machine with its usage of different trigger components and complexity of the sound composing process.