Chalk Shark

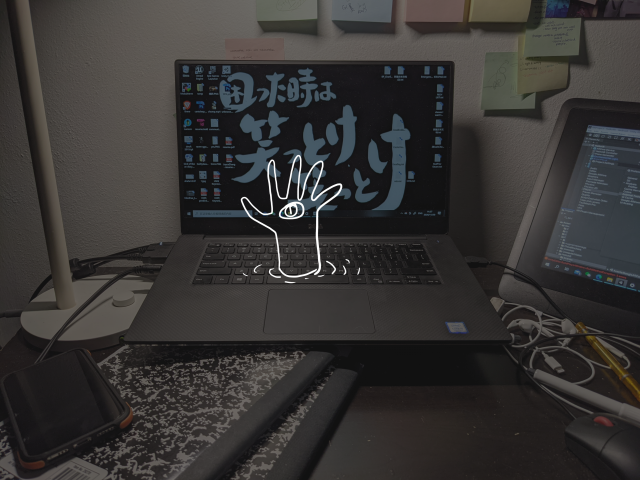

As I kid I drew on the sidewalk a lot, and indulge myself in my fantasy world. Thus for this project, I want to make such a virtual world come alive to me through AR.

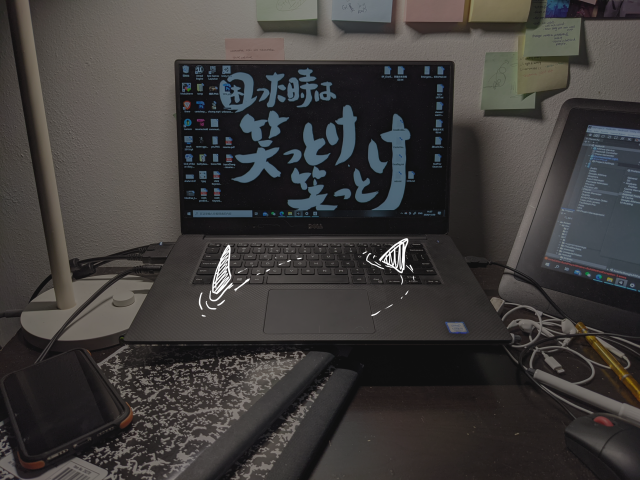

I wanted to make a 3D chalk shark drawing, that surfaces from the ground drawing. However, I’ve been testing in my apartment hallway and wasn’t testing outside, and it really didn’t look as good as I wanted it to be 🙁

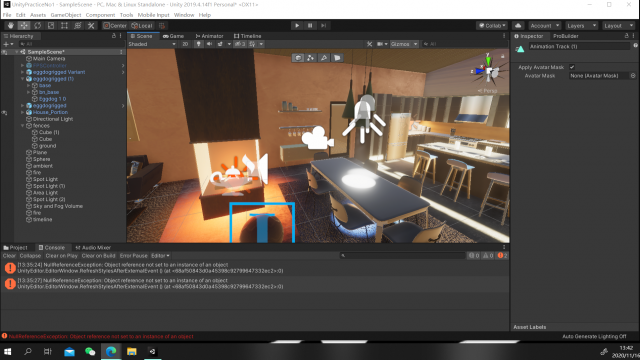

For the programming portion, I modified the code of the AR Controller so that the mesh is always placed sideways (90 degrees) towards the camera.

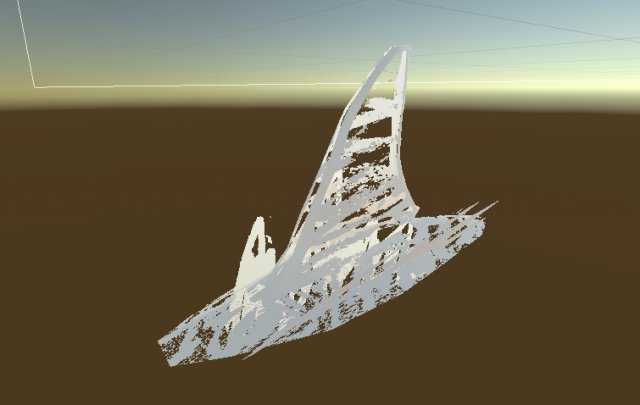

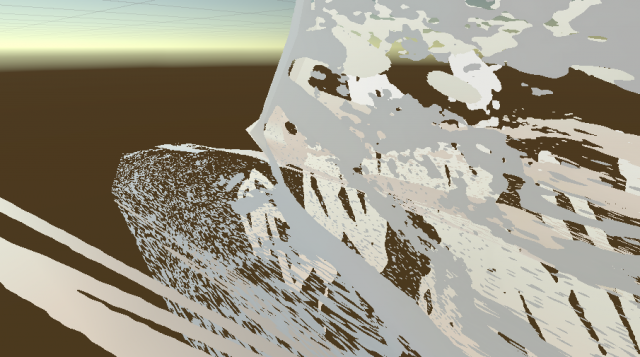

I made the 3D model and handpainted the texture(in Substance painter and Photoshop) to give it a hand-drawn quality. I planned to hand particle systems and flipbook textures to animate the water, but I didn’t get that to work, so I’m just animating the two pieces of mesh that represent the water splashes by bobbing them up and down.

Hallway Test Vid:

More Documentation: