- From Brian Reverman’s Mask Around the World video, the narrator mentions the idea of masks used to celebrate the coming of seasons. The arrival of seasons is a concept so familiar in my head that I completely neglected how special it is in some cultures. It’s also interesting to see masks almost try to portray something that isn’t tangible and become wearable.

- “Asians and African-Americans are a 100 times more likely to be misidentified than white men” In John Oliver’s video, he mentions this extremely alarming fact about failures that exist within the technology of facial recognition. Not only that, but they struggle to identify between a male and a female. When this system is used in ways that it wasn’t designed to be used, the public is surely in danger. It worries me that people may use this technology in serious situations while misunderstanding its accuracies, resulting in harming an innocent.

Student Area

thumbpin-facereadings

“Some judges use machine-generated risk scores to determine how long an individual is going to spend in prison.”

This is from Joy Buolamwini’s Ted Talk and the most shocking thing I’ve heard from the readings. It’s terrifying that the length of people’s prison sentences are determined by their facial features, something that they do not control. How is this even legal?

Face recognition is used extensively by law enforcement despite it inaccuracies.

This is a general idea I got from a couple of the readings. Why has face recognition been used by the law enforcement and government when it algorithms are known to be biased and inaccurate?

sticks-facereadings

- From John Oliver’s Facial Recognition video, I found Clearview.ai and facial recognition technology in general to be concerning and even frightening in the way in which facial recognition could be exploited and used outside of entertainment. The accurate and elite facial recognition program is well developed as it has collected over 3-billion photos of people from the internet. However, despite Clearview.ai being solely used for “law enforcement” agencies, the facial recognition service is said to be used in businesses such as Kohl’s and Walmart, and has been implemented secretively used in many other areas and countries. The amount of ways which facial recognition could be exploited is frightening, and makes me question the functionality and legality of facial recognition technology.

- Why should we desire our faces to be legible for efficient automated processing by systems of their design? It’s no doubt that development of this kind of technology has been made possible by data from millions, even billions of people’s faces, and the idea that they have access to our faces could potentially result in the creation of new, oppressive technologies and ways in which our faces could be exploited.

gregariosa-facereadings

From watching John Oliver’s video, I was shocked by the use of facial recognition by Clearview.ai. The audacity of the company to undermine basic human rights and weaponize technology against civilians was horrifying. After reading another article related to it, I found that Clearview.ai only has an unverified “75% accuracy” of detecting faces. Not only are they capable of scraping 3 billion photos against people’s will, but they also take no responsibility for potentially mis-identifying people and further perpetuate racial profiling. I wonder, then, whether the federal government is even capable of regulating these technologies, as previous hearings with tech giants have proven that lawmakers have little to no understanding of how technology operates.

After reading Nabil Hassein’s response to the Algorithmic Justice League, I found it interesting that Hassein would rather see anti-racist technology efforts put into meddling with machine learning models, rather than filling up the missing gap of identifying black faces. I wonder whether facial detection should’ve never been invented in the first place, as more efforts need to be placed to combat the growing technology, rather than help develop it more.

junebug-SoliSandbox

Soli Sandbox: Helping Me Heal

Backstory:

My Soli project is based on the grief and loss I have/am experiencing over the loss of my roommate who recently passed away. She passed away this summer after battling leukemia. Her parents are driving down to Pittsburgh this weekend to pack up her belongings from our apartment, and my feelings of grief are slowly coming back in waves thinking that I will be losing one more connection with her. I’ve previously made an intaglio print dedicated to her as she was completing her second round of chemo, and I view this project as a continuation of my work that is dedicated to her.

Concept:

This project is inspired by the “cheesy” haiku/poem posts that exist everywhere on the internet where the background exists a calming scene and the foreground contains a poem. In my piece, the phone in some aspects is comforting the user by providing different poems about grief and loss every time the user taps the phone and also playing a melancholic song quietly in the background. The flowers in the corners are called spider lilies – a summer flower native throughout Asia, and in Asian cultures (especially Japan) the red spider lily means “the flower of the heavens” & is associated with final goodbyes and funerals.

Self-Critique:

This project’s execution was way too simple. I completely understand and totally agree with that. But in some aspect, I think for the concept and idea I was going for, the simplicity makes it better because the content is very heavy and I wanted to focus on the user dealing with their grief and loss while reading the poems without any distracting background visuals.

Inspiration – the “cheesy” poem posts

Project gif

pinkkk-facereadings

"expression alone is sufficient to create marked changes in the autonomic nervous system. " I'm most intrigued by this idea that a simple movement of our facial muscle actually influence our biological state. Yet overdoing an expression can leave trauma. The fact that if you smile too much, you get a disorder named smile mask disorder if the reason behind your smiles was unnatural, i.e. smiling because your boss told you too. I'm very interested in exploring creating motivations for people to have certain facial expression that leave them with change of their mental state.

From the talk by Joy Buolamwini, The theme of what you do not see can matter more than what you do see is echoed throughout this talk. This is something I definitely did not recognize and was made aware of, and I am very glad that now I know this issue.

lampsauce-facereadings

- At the end of the Kyle McDonald reading, there were images of people’s faces burned onto toast as examples of how facial recognition software can be fooled. I think it is interesting to explore these edge cases because it is helps put the power of facial recognition in perspective: sure, it’s cool that this code can tell me from you, but it can’t tell me from a picture of me burned onto piece of bread.

- An idea that struck me from Joy Buolamwini’s Ted Talk is that as facial recognition gets more and more powerful, the edge cases mentioned before become a lot less funny; the implication of a piece of code not working for people with darker skin is that those people do not exist, which furthers inherent and existing biases in society.

junebug-facereadings

One thing I found super interesting from the readings was the conflict of wanting the facial recognition programs to be more inclusive and removing the algorithmic bias that exists within technology, but also the problems that will arise with better facial recognition technology in the hands of an oppressive and racist society. Maybe the fact that there is color bias in existing facial recognition programs is a good thing in our current racially biased justice and prison system to help provide a temporary advantage to those who will be targeted with this technology. I’m just disappointed in the fact that it is our reality where we have to debate about whether to fight for our inclusivity in technology that could potentially danger the lives of those who we are fighting inclusivity for.

The second thing I found interesting is just the whole concept of how we (as human civilization) is now just moving in uncharted territory with our innovations and improvements in technology. In regards of whether something is moral or not is the debate we have with many things related to new technology. In my Designing Human-Centered Software course, we discussed the morals and ethics of A/B testing. If you think generically, the idea of A/B testing is in no way of violating anyone’s privacy or morals – for example, Google’s 41 shades of blue test for their new Google search button. However, depending on how the test was conducted or what the test is testing, it could quickly become immoral (for example, Facebook’s “Mood Manipulation Study”). It just makes me wonder how the future will look like when we imagine how much further we plan on incorporating technology in our lives; will our boundaries for privacy change in about 30 years? Will our definition of moral/ethical change too?

marimonda-facereadings

I tried to keep this somewhat short since I have a bad habit of writing essays on these blog posts:

- In law enforcement: I think one main topic that got covered in most of the readings was using facial recognition in law enforcement. The reason this topic is super interesting to me is because it’s literally one of the most dystopian applications of AI. It is not just seen with facial recognition, but it’s even being used in decisions of who gets a longer sentence than not.

- Is being recognized a good thing? I think the whole section of the John Oliver video that focused on Clearview.ai was INSANE, I think this makes me wonder how this doesn’t already bypass federal regulations or any sort of fair use copyright, especially when multiple corporations like Facebook have requested Clearview to stand down. I was under the impression that this type of applications of AI would be illegal, and its so terrifying to know that its not.

miniverse-mobiletelematic

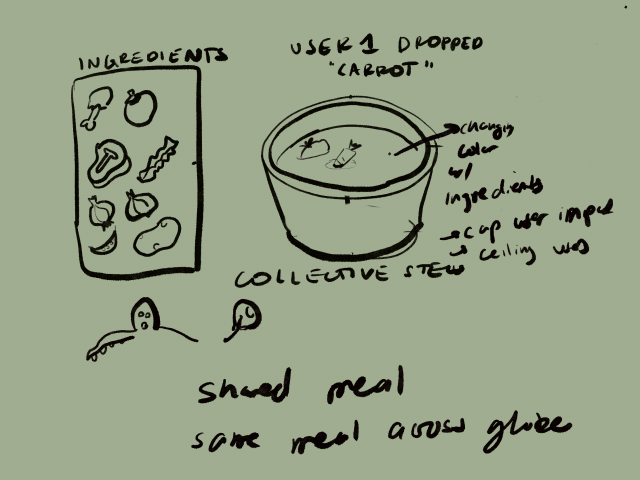

This project allows users to collectively cook and eat a stew with their friends.

To address the local versus remote principle, this project is made for people far away. Cooking is an intimate task of creation that requires being in the same place with resources. During quarantine people who would cook together can’t. This is to help simulate that bond.

My process:

- made the sketch

- made some assets

- pulled them up and fixed the orientation and interaction

- added the ability for other people to contribute to the soup

My sketch:

Project Gif:

game link:

https://collaborative-kitchen.glitch.me/

this is a two person game. Crash the server with this link:

https://collaborative-kitchen.glitch.me/?crash

video: