Month: November 2017

Final Project Proposal: One of Two options

I explored a couple other ideas and included them just to show my process.

https://samanthaho.myportfolio.com/final-project

My actual project proposal is to make an interactive lamp that requires a group of people to coordinate with each other. The lamp shade itself will be lasercut be on a vertical track. One person of the team controls the vertical height of the shade and the other, the brightness of the bulb.

The height of the shade will directly correspond with person A and how far away they are from the lamp. Similarly, person B will need to take their phone out and use their phone light to control the brightness of the bulb via photoresistor.

It requires the coordination of both parties to properly project shadows and silhouettes onto the table.

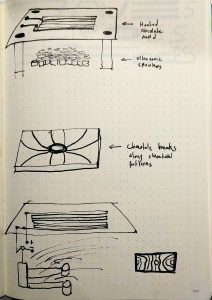

Project proposal: chocolate temperer

I want to create a tool to temper chocolate bars with designed weak points; this will allow chocolate to break into specific shapes. To accomplish this, a heated bar mold to control the rate of temper of chocolate will be made. Ultrasonic speakers will be paced below the chocolate bar mold. As the material cools, standing interference waves will vibrate the chocolate to create low pressure areas. The chocolate’s crystal structure / density will be different along specific lines.

Steps:

- Design 3D model for chocolate bar

- 3D print

- Screen print resistive, conductive ink on thermoform, food-safe plastic to create heating element

- Thermoform printed plastic over 3D print

- Test heating element by running current through print

- Mount 2-4 ultrasonic speakers under the bar mold

- Program arduino to run through a sound (sine wave) sweep, user can press button when the desired interference pattern is shown.

- test!

Tools:

- Arduino

- ultrasonic speakers

- push button (or capacitive sensor)

- thermoform plastic

- 3D print of chocolate mold

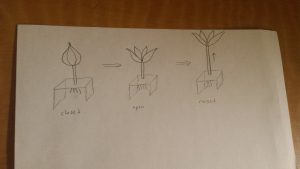

An Interactive Mechanical Flower

Abstract/Concept

For my final project I want to create an interactive mechanical flower. The idea behind the interaction is that the flower thrives off of human touch and connection rather than sunlight and water. I am imagining a structure with a single flower bud and stem mounted on a clear box or frame, and roots suspended underneath (see images below).

Conceptually: I want to accomplish a few things with this. First, I want to explore an organic, fluid, and natural side to mechanical design as opposed to linear and strictly functional. Second, I want to portray the idea that living things need human connection just as much as they need the basics of food, water, shelter, etc.

In terms of what the flower will actually do: I want the flower to close when nobody is around and open when people come closer (the analog to opening/closing in response to sunlight). If possible it would be cool if it could “grow”, maybe get taller or raise and lower, when people touch its roots (the analog to growing in response to water and nutrients).

Hardware

- Flower

- Wood (probably ⅛” basswood, which has worked well for me in the past)

- Distance sensor

- DC motor

- H-bridge

- Stem

- Long threaded rod and thing to screw it into

- DC motor

- H-bridge

- Roots

- Thin wires for the roots

- Capacitive touch sensor

- Base

- Clear acrylic

Software

- Flower

- I need code to read the distance sensor and open/close the flower accordingly

- Stem/roots

- I need code that will read capacitive touch input and raise/lower the stem accordingly

Plan

- Make simple petals that open and close using a motor and H-bridge

- Make the opening/closing respond to distance sensor

- Create a raise/lower mechanism using motor

- Mount flower to this

- Make the raise/lower respond to touch sensors

The Indexical Mark Machine

I want to make a drawing machine. What interests me about machines drawing is rhythms in mark making, rather than accuracy and depiction. I think what’s beautiful about mechanical drawing is the pure abstraction of endless uniform marks done in a pattern, simple or complex, that is evidence of the same motion done over and over again.

I feel what’s most beautiful about all art is the presence of the indexical mark: the grain of a brush stroke, the edge and slight vibrations in a line of ink that prove it was drawn with a human hand, or the finger prints in a clay sculpture. I make the case that the difference between artistic media is defined by indexical marks. Do two works have different indexical marks? Then they are different forms of art entirely, showing us different aspects of compositional potential.

So I want to invent new indexical marks, ones that the human hand is not capable of producing. I want to see patterns fall out of a mechanical gesture that I built, but didn’t anticipate all the behaviors of, and to capture a map of these patterns on paper.

I don’t care if the machine can make a representational image; rather I want to make a series of nodes and attachments that each make unique patterns, which can each be held by mechanical arms over a drawing surface, each hold a variety of drawing tools, and be programmed into “dancing” together.

Hardware

- 5 V stepper motors

- 12 V Stepper motors

- 12 V DC motors

- Sliding potentiometers; light and sound sensors (I want the frequencies of the mark making mechanisms to be adjustable by both controlled factors and factors influenced by the environment. )

- Controller frame

- Card board for prototyping the structure of the machine

- Acrylic to be laser cut for the final structure

Software

- Built from the ground up. The most complex programing will be that of the arms which position the drawing attachments over different places on the drawing surface. I may use a coordinate positioning library for a configuration of motors that pushes and pulls a node into various positions with crossing “X and Y” arms.

Timeline

- Weeks 1 and 2

Make several attachable drawing tool mechanisms which each hold a drawing tool differently, and move it about in a different pattern.

- Week 3

Build a structure that holds the attachable nodes over a drawing surface, with the capability of arms to move the nodes across different areas of the surface.

- Week 4

Control board and sensory responders that can be used to change patterns of the arms, and the nodes.

- Week 5

Program built-in patterns that the controls will influence factors of.

- Week 6

Make some more nodes, and make some drawings!

Project Proposal: Fishies

Concept statement:

I plan on making an automatic fish feeder/ pump system that responds to texts (or emails, or some similar interaction) – certain key phrases will trigger specific responses in the system. I want to use this project to synthesize a more human interaction between people and their fish — while texting isn’t the most intimate form of communication, it’s such a casual means of talking to other people that I think it will be useful in creating an artificial sense of intimacy.

Hardware: some sort of feeding mechanism (motor-based?), submersible pump (small), lights (LEDs), fish tank, fish (I already have the last two, don’t worry)…. I’m not sure what I’d need to connect w/ an arduino via sms or through wifi

Software: I’ll need software to make the arduino respond to texting (or something similar), and then perform fairly straightforward mechanical outputs

Order of constructing and testing: first I need to get the arduino response down pretty well, since the project largely hinges on that, then creating a feeding mechanism will be the next priority… everything after that will largely be “frills”/things that aren’t crucial to the project. As I add components, I’ll need to figure out how to display them non-ratchetly. I’m also definitely going to need constant reminders to document my process.

Final project proposal: smartCUP (updated)

Here is the updated proposal

Abstract

How do we make art, and better yet, how do we express ourselves through art? Traditionally, people express thoughts and feelings through intentional choices of color, stokes, medium. I want to experiment with idea of skipping artists’ intentional choices and allowing their body (or physical properties of their body make these choices. I believe this will allow artists’ to have a closer (physical) connection with their artwork because their body would go through the same change in states as their artwork.

I want to upcycle paper cups (ones you would get at starbucks) as a tool for drawing. The cup can animate the user’s interaction (opening cup, drinking, warming up drinks, even socializing with cups), and then it will take these (unstaged) interactions into art.

Concept drawing

Cup design: to be added

Inspiration:

Hardware

- temperature sensor

- Neopixel LED

- tilt sensor

- potentiometer

- photo-sensitive paint

Software (tentative)

- blynk — connecting arduino data to PC

- pygame (looking for better option for UI)

Order of construction and testing

- Potentiometer + tilt sensor -> color change of Neopixel LED

- temp sensor -> color change of Neopixel LED

- Fit things into cup

- proximity sensor -> 2 cups interact

- make an information poster for the final show

- write up my artist’s statement for the final show

=======================================

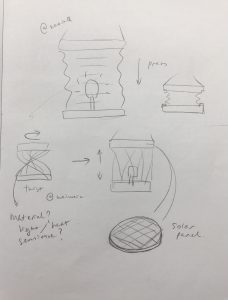

Abstract

How do we make art, and better yet, how do we express ourselves through art? Traditionally, people express thoughts and feelings through intentional choices of color, stokes, medium. I want to experiment with idea of skipping artists’ intentional choices and allowing their body (or physical properties of their body make these choices. I believe this will allow artists’ to have a closer (physical) connection with their artwork because their body would go through the same change in states as their artwork.

I want to make a lantern as a tool for drawing. The lantern will be tied to the user’s hand. User’s body temperature controls how much the lantern expands, which then changes the intensity of color; User change color by changing the orientation of their hand and lantern. A virtual canvas will then capture the states and motions of the lantern to form a painting.

Concept drawing

Lantern design:

Lantern motion:

Sample output:

Hardware

- accelorometer

- temperature sensor

- Neopixel LED

Software

- blynk — connecting arduino data to PC

- pygame (looking for better option for UI)

Order of construction and testing

- Accelerometer -> color change of Neopixel LED

- make lantern shade

- temp sensor -> lantern shade expand and contract

- work on data threading (location + orientation)

- work on UI

- QR code (for user to save artwork)

- make an information poster for the final show

- write up my artist’s statement for the final show

Assignment 6: Text-to-Speech Keyboard

Abstract / concept statement

I want to make a mobile keyboard that reads aloud the words typed into it. If someone were to lose their ability to speak, this device could be an easy way for them to quickly communicate by typing what they want to say, since typing has become so natural to most people.

Hardware

The most crucial element of hardware in my project is the keyboard. After doing some research I believe that using a keyboard with the older PS/2 port I will be able to have my keyboard interact with the arduino. I will also need a speaker and power supply or maybe rechargeable battery (it would be nice to have it all be wireless).

Software

The trickiest part of the software process will probably be the text-to-speech. I have found a library on github that is used for arduino text-to-speech and an example of it being used online so hopefully I wont run into any issues with that. I’ve also found a library for the keyboard.

https://github.com/jscrane/TTS text to speech

https://github.com/PaulStoffregen/PS2Keyboard ps/2 keyboard

Order of construction and testing

1. get keyboard input

2. use keyboard input to make text-to-speech happen

3. figure out wireless power supply

4. craft some sort of container that hides wires, leaving just the keyboard and speaker exposed.

I’m not sure if this project is maybe too simple for a final project, but I like this idea because of the accessibility it could provide someone in need. It is possible that I will add to or modify this idea to better suit the assignment if needed.

Assignment 6 : Gaurav Balakrishnan

Please click on the link to see all information!

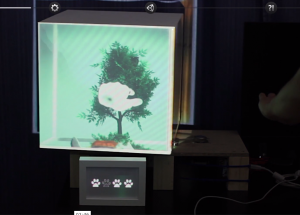

Final Project Proposal

Abstract

I’d like to make an interactive 3D drawing box. Users can draw an object in 3d space and see their drawing projected onto an interactive cube in real time. It will use Unity, Arduino, a projector, and the Leap Motion Sensor. It is heavily inspired by Ralf Breninek’s project: https://vimeo.com/173940321

As well as Leap Motion’s Pinch Draw:

Unfortunately, Pinch Draw is currently only compatible with VR headsets, so it won’t translate directly to my project idea. That’s where I think some of the technical complexity comes in- I will probably have to write my own custom program.

Hardware

- Projector

- Cube (made from white foam core)

- Stand for cube

- Stepper motor

- Arduino

- Leap Motion Sensor

- Power supply

Software

- Uniduino

- Unity

- Firmata for Arduino

- Arduino

Order of Construction and Testing

- Order supplies and follow tutorials for 3D drawing tutorials for Unity

- Connect projector to computer and figure out dimensions/projection logistics for program

- Build projection cube

- Use Firmata and Uniduino to control Arduino and motor based on Unity output

- Put whole project together: project Unity game onto cube, have cube respond to hand gesture commands, finalize user interface

- Information poster and artist’s statement for final show