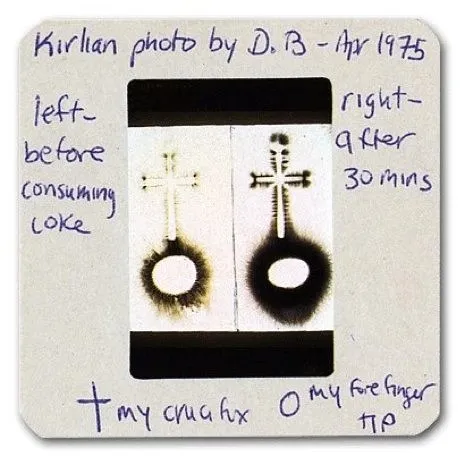

The ethnography of Nafus and Sherman (2014) shows how those in the Quantified Self movement “collect extensive data about their own bodies” to become more aware of their mood, health, and habits, redeeming the liberatory and interpretive potential from the same technologies which usually “attract the most hungrily panoptical of the data aggregation businesses” in service of capital, carceral, or managerial ends.

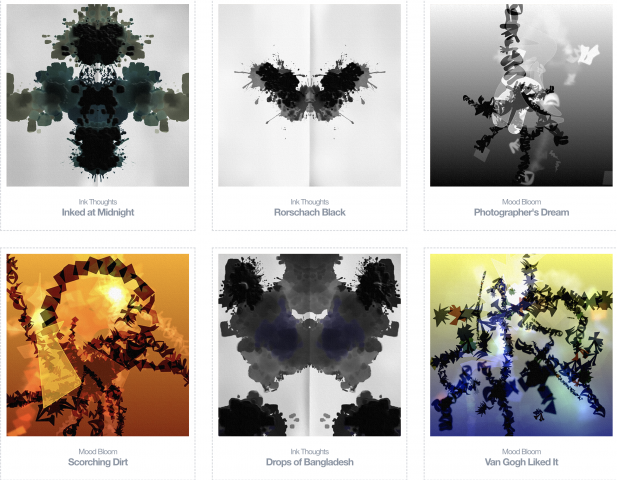

In this “Looking Outwards” report, I encountered Berlin-based fashion designer and sports scientist Anna Franziska Michel, who creates designs for fabric and clothes based on self-capture of her health and sport data. In her presentation, she wears a red and blue marble-patterned dress she created using an “AI” “neural painter” from her self-tracking data. She observes that the prominence of red demonstrates that she is sitting more after founding a fashion design company.

However, I found this work fell short in ways I see other new media art falling short: exciting conceptual impulses motivate the exploration of new technological possibilities, but without a coherent link in the other direction. In what way does the affordances of the hyped new technological artifact inform new conceptual ideas or possibilities, in turn? For example, how does her outfit inform her sense of self, help her better understand her health as she wears it, or comment on the idea of self more generally? When she sells her designs based on her own data for others to wear, what does this represent for the wearers? Do they feel any connection to her or her data they are wearing, or do they just receive it as a cool looking design, made sexy by the imprimatur of “AI”?

***

Nafus, Dawn, and Jamie Sherman. This One Does Not Go Up to 11: The Quantified Self Movement as an Alternative Big Data Practice. 2014, p. 11.

Michel, Anna Franziska. Using Running And Cycling Data To Inform My Fashion. https://quantifiedself.com/show-and-tell/?project=1098. Quantified Self Conference.

The title quotes John von Neumann, from: Chandler, Daniel. Technological or Media Determinism. 1995.

06.01.2020 18.39 (2022). Video projection, sound and fans

06.01.2020 18.39 (2022). Video projection, sound and fans