see Sarah Choi’s post

Author: gkupfers@andrew.cmu.edu

Gretchen Kupferschmid-Looking Outwards 12

My partner and I were inspired by two specific generative artworks for our project. The first being a project on “codepen” by Sara B. This project works to create an interactive map in which the user can click through different aspects of the map and view different areas. Though there is not as much interaction/information as we would like to provide in our final project, there is a quality to the visuals of the map as well as the concept of creating an interactive map from code to view certain details of the map.

https://codepen.io/aomyers/pen/LWOwpR

The second generative project was created for a hotel in NYC, Sister City. Created by Microsoft and the musician Julianna Barwick, they were able to create a project which takes from what is happening outside in the sky and reflects it in a music/composition form. So, for example. when clouds pass by as certain sound in the composition is generated, and changes based of things such as the size and color of the clouds. This inspired my partner and I through looking at cities in terms of represented as sounds, rather than the usual representations of pictures, words, and feelings.

https://www.microsoft.com/inculture/musicxtech/sister-city-hotel-julianna-barwick-ai-soundscape/

Gretchen Kupferschmid-Project 12-Proposal

For Sarah Choi and I’s final project, we wanted to focus on the aspects of living in a city- from the noises inside them to the places we go to eat to how we feel the atmosphere of different parts of the city is as a whole. Our project would be specific to Pittsburgh, and would show different areas of the city, along with the differences among them through clicking throughout the map. The user would also be able to hear sounds/music to match the vibe of different areas or locations which we would show as some of the places we visit/recommend (like coffee shops or restaurants).

Since both of us grew up in city areas, we see and importance in highlighting aspects of the city in a visual way, along with allowing people to better understand the “vibe” of specific places or areas in cities before visiting. We also wanted to highlight how much we enjoy recommending places to others from our point of view as opposed to other services like yelp that have so many random people’s reviews added.

Though we would both equally work together and help each other, one of us would focus more heavily on the aesthetic creation of the map and building it, while one of us would work towards the interactive sounds and texts of the map.

Gretchen Kupferschmid-Looking Outward-11

As a graduating project, Hannah Rozenberg of the Royal College of Art created a project by the name of “Building without Bias: An Architectural Language for Post-Binary”. This project is a digital aimed to calculate underlying gender bias in our english architectural terms to hopefully create more gender-neutral environments. The project works by using a algorithm which helps you find out whether or not a building is biased & helps the user add or subtract elements to improve the balance. The calculator in the program basically assigns a “gender unit” to various architecture terms (such as steel, cement, or nursery) and based of their “gu”, the user can then create spaces that are not weighted either more feminine or masculine by the calculation of all the components. I appreciate how Rozenberg has began to tackle something like gender biases and spaces in architecture because I think its something that isn’t given enough attention in terms of gender-studies. Rozenberg’s project allows design to still occur in a responsible manner and align traditional practice like architecture with ideas and societal advancements in the 21st century.

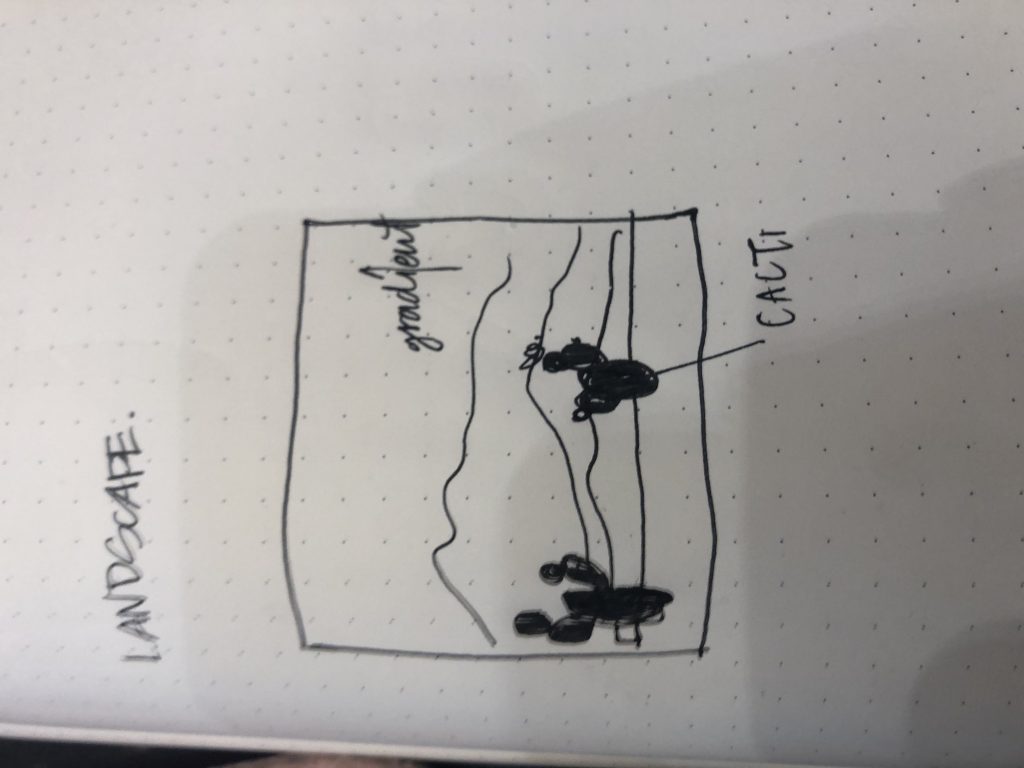

Gretchen Kupferschmid-Project 11- Landscape

//initialize variables

var terrainSpeed = 0.0005;

var terrainDetail = 0.005;

var groundDetail= 0.005;

var groundSpeed = 0.0005;

var cactus = [];

var col1, col2;

function setup() {

createCanvas(480,480);

frameRate(10);

//start landscape out with 3 cacti

for(i = 0; i < 3; i++){

var rx = random(0,width);

cactus[i] = makeCactus(rx);

}

}

function draw (){

//create gradient colors

c1= color(255, 149, 0);

c2= color(235, 75, 147);

setGradient(c1, c2);

//call functions

drawDesertMtn();

updateCactus();

removeCactus();

addCactus();

}

function setGradient(c1, c2){

//create the gradient background

noFill();

for(i = 0; i < height; i++){

var grad = map(i, 0, height, 0, 1);

var col = lerpColor(c1, c2, grad);

stroke(col);

line(0, i, width, i);

}

}

function drawDesertMtn(){

//back mtn

fill(255, 155, 84);

noStroke();

beginShape();

//make the terrain randomize and go off screen

for (var x = 0; x < width; x++) {

var t = (x * terrainDetail) + (millis() * terrainSpeed);

var y = map(noise(t), 0,2, 50, height);

vertex(x, y);

}

vertex(width,height);

vertex(0,height);

endShape();

//middle mtn

fill(245, 105, 98);

noStroke();

beginShape();

//make the terrain randomize and go off screen

for (var x = 0; x < width; x++) {

var t = (x * terrainDetail) + (millis() * terrainSpeed);

var y = map(noise(t), 0,1, 100, height);

vertex(x, y);

}

vertex(width,height);

vertex(0,height);

endShape();

//closest mtn

fill(224, 54, 139);

noStroke();

beginShape();

//make the terrain randomize and go off screen

for (var x = 0; x < width; x++) {

var t = (x * terrainDetail) + (millis() * terrainSpeed);

var y = map(noise(t), 0,1,200,height);

vertex(x, y);

}

vertex(width,height);

vertex(0,height);

endShape();

//groud

fill(165, 196, 106);

noStroke();

beginShape();

//make the ground randomize and go off screen

for (var x = 0; x < width; x++) {

var t = (x * groundDetail) + (millis() * groundSpeed);

var y = map(noise(t), 0, 1,400,480);

vertex(x, y);

}

vertex(width, height);

vertex(0,height);

endShape();

}

//change x value of cactus as cactus moves

function moveCactus(){

this.x += this.speed;

}

function makeCactus(cactusX){

//create the cactus as an object w some random parts

var cactus={

x:cactusX,

speed: -6,

breadth:311,

color: random(0,100),

length: random(50,100),

move: moveCactus,

display: displayCactus,

}

return cactus;

}

function updateCactus(){

//loop through an array of cactus to update as adding and moving

for(i = 0; i < cactus.length; i++){

cactus[i].move();

cactus[i].display();

}

}

function displayCactus(){

//create the cactus visuals with object parts

noStroke();

fill(this.color, 107, 9);

//translate to x value of new cactus

push();

translate(this.x+157, height-40);

ellipse(0,0,94,137);

fill(this.color, 107,9);

ellipse(61, -52, this.length,48);

fill(this.color, 115, 28);

ellipse(47,-83,19,27);

fill(90, 115, 22);

ellipse(81, -74, 24,24);

fill(90, 115, 22);

ellipse(-34, -86, this.length, 74);

fill(this.color, 115, 22);

ellipse(-18, -131, 41,41);

fill(252, 197, 247);

//create flower on cactus

beginShape();

curveVertex(-1, -150);

curveVertex(-7,-152);

curveVertex(-7, -147);

curveVertex(-10, -157);

curveVertex(-5, -141);

curveVertex(-4, -135);

curveVertex(1, -140);

curveVertex(8, -135);

curveVertex(8, -142);

curveVertex(8, -147);

curveVertex(3, -148);

curveVertex(3, -153);

curveVertex(-1, -150);

endShape();

pop();

}

function addCactus(){

//add a new cactus to the array

var newCactus = 0.01;

if(random(0,1)< newCactus){

cactus.push(makeCactus(width));

}

}

function removeCactus(){

//remove cactus from array as it goes off the screen

var keepCactus = [];

for(i = 0; i < cactus.length; i++){

if(cactus[i].x + cactus[i].breadth > 0){

keepCactus.push(cactus[i]);

}

}

cactus = keepCactus;

}

I was inspired by desert landscapes in which the sun is setting and there are cacti as well as communicate the vastness of a place like joshua tree.ca.

Gretchen Kupferschmid-Project 10 Sonic Sketch

//gretchen kupferschmid

//gkupfers@andrew.cmu.edu

//project 10

//section e

var banana;

var lick;

var mouth;

var eyes;

function preload() {

// call loadImage() and loadSound() for all media files here

banana = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/11/banana.wav");

lick = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/11/lick.wav");

mouth = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/11/mouth.wav");

eyes = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/11/eyes.wav");

}

function setup() {

createCanvas(480, 480);

useSound();

//======== call the following to use sound =========

}

function soundSetup() { // setup for audio generation

banana.setVolume(0.5);

lick.setVolume(0.5);

mouth.setVolume(0.5);

eyes.setVolume(0.5);

}

function draw() {

background(241,198,217);

//mouth lips

noStroke();

fill(255,0,0);

beginShape();

curveVertex(68,359);

curveVertex(101,319);

curveVertex(133,304);

curveVertex(150,312);

curveVertex(175,302);

curveVertex(196,329);

curveVertex(188,360);

curveVertex(197,416);

curveVertex(156,431);

curveVertex(111,404);

curveVertex(68,359);

curveVertex(101,319);

endShape();

//mouth black

fill(0);

beginShape();

curveVertex(87,357);

curveVertex(100,350);

curveVertex(112,337);

curveVertex(130,328);

curveVertex(146,327);

curveVertex(170,320);

curveVertex(181,330);

curveVertex(178,363);

curveVertex(142,363);

curveVertex(87,357);

curveVertex(100,350);

endShape();

//mouth toungue

fill(240,110,270);

noStroke();

beginShape();

curveVertex(84,357);

curveVertex(127,358);

curveVertex(176,365);

curveVertex(177,408);

curveVertex(133,398);

curveVertex(84,357);

curveVertex(127,358);

endShape();

//mouth teeth

fill(255);

beginShape();

curveVertex(107,341);

curveVertex(129,328);

curveVertex(148,327);

curveVertex(171,320);

curveVertex(180,327);

curveVertex(175,340);

curveVertex(158,343);

curveVertex(150,340);

curveVertex(146,342);

curveVertex(127,341);

curveVertex(120,345);

curveVertex(114,340);

curveVertex(107,341);

endShape();

//banana

fill(235,222,176);

beginShape();

curveVertex(325,39);

curveVertex(325,45);

curveVertex(326,10);

curveVertex(336,9);

curveVertex(347,39);

curveVertex(363,77);

curveVertex(352,90);

curveVertex(332,89);

curveVertex(325,39);

endShape();

//banana peel

fill(240,201,66);

beginShape();

curveVertex(304,166);

curveVertex(311,149);

curveVertex(310,102);

curveVertex(318,80);

curveVertex(331,86);

curveVertex(343,92);

curveVertex(349,86);

curveVertex(364,80);

curveVertex(364,64);

curveVertex(407,89);

curveVertex(423,115);

curveVertex(412,117);

curveVertex(395,108);

curveVertex(379,104);

curveVertex(373,96);

curveVertex(383,132);

curveVertex(412,189);

curveVertex(398,194);

curveVertex(372,181);

curveVertex(340,134);

curveVertex(331,104);

curveVertex(329,113);

curveVertex(331,134);

curveVertex(326,161);

curveVertex(306,167);

curveVertex(304,166);

curveVertex(311,149);

endShape();

//banana dots

fill(96,57,19);

ellipse(359,155,6,6);

ellipse(366,160,4,5);

ellipse(363,165,3,5.5);

//ice cream cone

fill(166,124,82);

triangle(337,437,290,310,384,310);

//ice cream cone shadow

fill(124,88,53);

ellipse(337,320,80, 20);

//ice cream

fill(240,110,170);

beginShape();

curveVertex(275,296);

curveVertex(283,279);

curveVertex(299.270);

curveVertex(304,259);

curveVertex(317,255);

curveVertex(321,240);

curveVertex(330,236);

curveVertex(336,220);

curveVertex(349,210);

curveVertex(347,226);

curveVertex(357,234);

curveVertex(362,245);

curveVertex(364,247);

curveVertex(378,256);

curveVertex(362,268);

curveVertex(394,292);

curveVertex(397,311);

curveVertex(352,319);

curveVertex(351,314);

curveVertex(293,316);

curveVertex(277,304);

curveVertex(275,296);

curveVertex(283,279);

endShape();

//eye line

noFill();

stroke(0);

strokeWeight(4);

beginShape();

curveVertex(59,129);

curveVertex(97,102);

curveVertex(150,99);

curveVertex(184,124);

curveVertex(182,128);

curveVertex(152,145);

curveVertex(100,147);

curveVertex(60,132);

curveVertex(59,129);

curveVertex(97,102);

curveVertex(150,99);

endShape();

//eye interior

noStroke();

fill(0,174,239);

ellipse(126,124,50,50);

fill(0);

ellipse(133,119,14,14);

//eyelashes

strokeWeight(4);

stroke(0);

line(65,123,52,108);

line(75,114,64,95);

line(94,104,87,86);

line(108,98,105,82);

line(129,95,130,77);

line(150,99,155,81);

line(163,107,178,88);

line(178,118,192,102);

}

function mousePressed(){

if(mouseX > 305 & mouseX < 423 && mouseY > 7.2 && mouseY < 195){

banana.play();

}

if (mouseX > 275 & mouseX < 398 && mouseY > 211 && mouseY < 436){

lick.play();

}

if (mouseX > 70 & mouseX < 196 && mouseY > 302 && mouseY < 432){

mouth.play();

}

if (mouseX > 52 & mouseX < 192 && mouseY > 76 && mouseY < 150){

eyes.play();

}

}

I wanted to play with icons and the sounds that could possibly go with these when clicked on. I could see myself implementing this into my website later on so that sounds can accompany my illustrations.

Gretchen Kupferschmid-Looking Outward-10

A new piece of sound art was installed at the San Fransisco Art Institute which mimics and/or satirizes the sinking & tilting Millennium Tower in SF. Created by Cristobal Martinez and Kade L. Twist (known together as Postcommodity), the piece uses computational algorithms to represent the movement of the tower. These sounds are supposed to create a soothing audio and beats which transforms this sinking and tilting into a therapeutic sound. The goal of the project was to encourage relaxation through the power of SF’s scenic beauty. I appreciate how the project aims to take a dire situation and flip it to call for relief from stress in a city. It is not often that art installed in museums is meant to aim to calm people or help soothe anxieties, so I appreciate this approach to art, especially through all you can do with something that can be so calming as sound. Though it doesn’t debut until the 15th, I am excited to see how the sounds themselves might sound and the effect it has on its viewers/listeners.

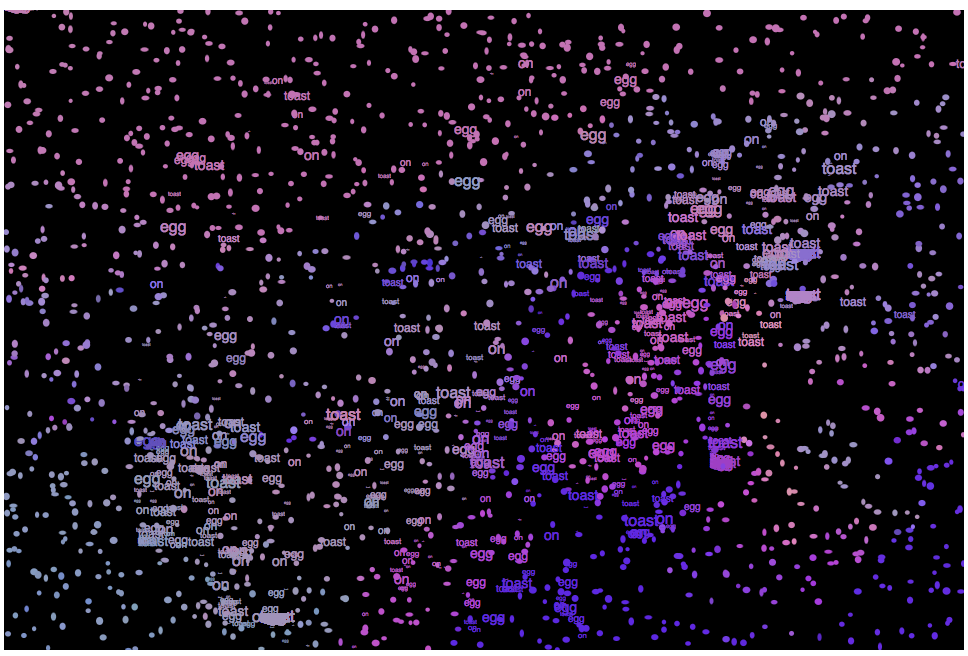

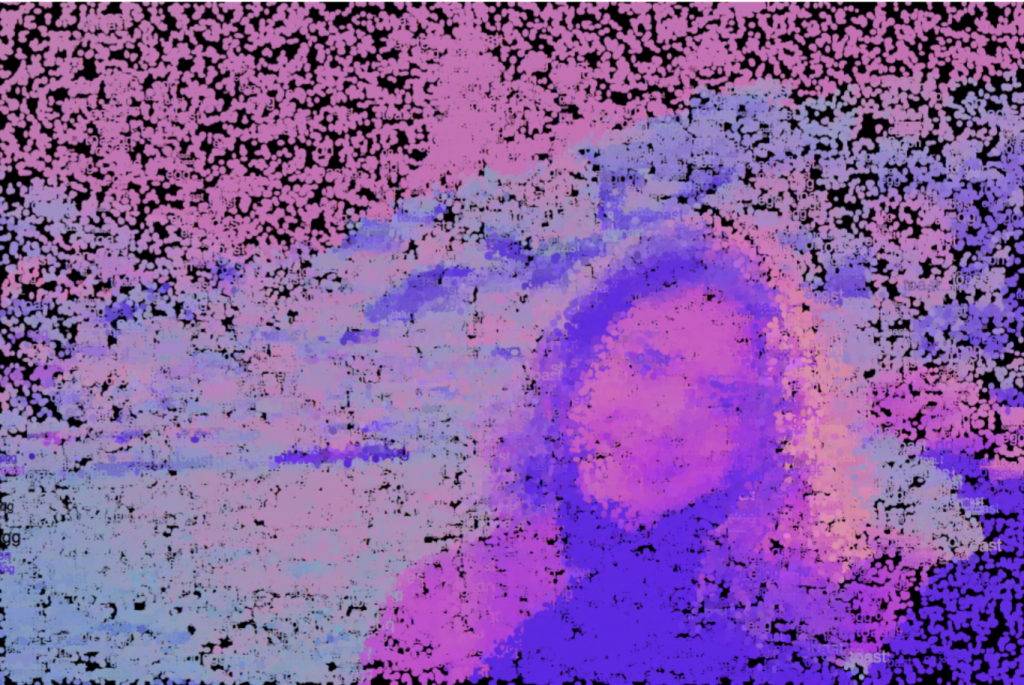

Gretchen Kupferschmid- Project 09- Portrait

// Gretchen Kupferschmid

// gkupfers@andrew.cmu.edu

// Section E

// Project-09

var underlyingImage;

var myText = ['egg','on','toast']

function preload() {

//loading the image

var myPic = "https://i.imgur.com/bgyIRdv.jpg";

underlyingImage = loadImage(myPic);

}

function setup() {

createCanvas(480, 320);

background(0);

underlyingImage.loadPixels();

//changing how fast loaded

frameRate(1000);

}

function draw() {

//variable to fill photo

var px = random(width);

var py = random(height);

var ix = constrain(floor(px), 0, width-1);

var iy = constrain(floor(py), 0, height-1);

//color at current pixel

var ColorAtLocationXY = underlyingImage.get(ix, iy);

//text at mouseX & mouseY

var ColorAtTheMouse = underlyingImage.get(mouseX, mouseY);

fill(ColorAtTheMouse);

//fill image with text based off mouse

textSize(random(8));

text(random(myText), mouseX, mouseY);

//ellipse at random

noStroke();

fill(ColorAtLocationXY);

//ellipses to fill photo with random sizes

ellipse(px, py, random(2,4), random(2,4));

}In this project, I want to work with two different shapes that come together at different times to make a portrait. I also want to make my portrait in colors that were different than a traditional photo.

Gretchen Kupferschmid-Looking Outward-09

For this looking outwards post, I will be analyzing Angela Lee’s Looking Outward-07 post. Like Angela described, I also am intrigued by this project’s large focus on emotion and how it relates to humans as well as how emotion can be created into a data visualization even though its not necessarily quantitive data. I thought a couple other things were interesting about this project, like how it was asked to be created by the Dalai Lama and the circles/shapes themselves represent much more complex ideas than the shape would suggest. Each emotion is represented as its own “continent” and the movement of these continents reflects how emotion varies in strength and frequency in other people’s lives. This project is also an attempt to express ideas visually and crisply even though the data has varying levels and accuracy and reliability. In relation to the Dalai Lama, he hopes that in order to reach a level of calm, we must map the emotions. I appreciate this entire work towards looking deeper at our emotions, especially in a way in which we can see them interactively and comparatively next to each other.

https://stamen.com/work/atlas-of-emotions/

Gretchen Kupferschmid- Looking Outwards-08

Jake Barton is the creator/founder of Local Projects which is an experience design company that has won multiple awards for museums and public spaces. The work he does focuses on storytelling and engaging audiences through emotions. He is well known for the algorithm 9/11 victims by affinity instead of alphabetically. He also created the Cooper Hewitt interactive museum (which I visited a couple times in the past couple years and always love) which allows visitors to have experiences where they can save different things throughout the museum that you want to view later and you can also do things such as create your own “art” pieces on the interactive tables on each floor of the museum. Jake Barton is not a new name to me, in fact we learned quite a bit about his work in my design course “Environments”, which focuses on the hybrid of physical and digital spaces. Through this course we learned about a lot of the ideas that Barton hopes to include in his work- from creating a narrative in your designs, to finding ways to balance digital and physical, and also focusing on the user and how they fit into the space.

A couple of things Barton mentioned in his talk were different projects he has created, which I listed two below.

-Urbanology Project: You get to chose different tokens (affordability, transportation, livability, sustainability, & wealth); take your place and argue your convictions-this models how cities actually work. Effective way to teach people about cities themselves with technology that is modeling scenarios and creates a data set that can be compared with other datasets and cities. This helps to connect people with the future of cities.

– For the Cleveland Museum of Art: A place with amazing history and architecture, they wanted a new visitor experience. A smart table was the first idea to give an access of information, but Barton wanted to actually make the art more relevant and meaningful. Pulled all interfaces into the center of the gallery and worked with slogans/guiding principles and looked to these principles the curator was trying to explain and create things like representation into experiences. Question of “What does a lion look like? then can chose your definition and see how other visitors voted – crowdsourcing and getting visitors to think about perception- you can experience and interpret with other visitors.

Through his talk, I realized he truly tries to connect with his audience by bringing his work in the form of telling a story or how he solved a problem. But, it is not in a analytical or robotic way, instead, he brings emotions and human experience to the table so that his audience can connect with the work he’s done.

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2020/08/stop-banner.png)