//Siwei Xie

//Section B

//sxie1@andrew.cmu.edu

//Final Project

var size = 10;//original size of brush stroke

function setup() {

createCanvas(480, 480);

background("white");

frameRate(500);//speed of drawing balls

}

function draw() {

//fill unwanted borders with purple rectangels

noStroke();

fill(194, 192, 216);

rect(0, 0, 480, 70);

rect(0, 0, 150, 480);

rect(450, 0, 30, 480);

rect(0, 450, 480, 30);

//titles & functions of drawing pad

textStyle(NORMAL);

textStyle(BOLD);

textSize(27);

fill("white");

text('My Drawing Pad', 200, 50);

textSize(15);

fill(101, 67, 89);

text('1. Drag mouse', 9, 115);

text('to draw', 25, 133);

text('2. Click buttons', 15, 220);

text('to add patterns', 20, 235);

//notes for buttons

textSize(12);

fill("black");

textStyle(ITALIC);

text('Press B for big brush', 15, 153);

text('Press S for small brush', 15, 168);

//buttons for BACKGROUND

fill(185, 88, 84);//maroon square

square(20, 250, 20);

fill("gray");//gray square

square(60, 250, 20);

fill("yellow");//yellow square

square(100, 250, 20);

//button for ERASE

fill("white");

rect(40, 360, 60, 40);

fill("black");

text('ERASE', 50, 385);

//draw by using BRUSH

if (mouseIsPressed) {

fill(random(255), random(255), random(255));

ellipse(mouseX, mouseY, size);

}

}

function keyPressed(){

if (key === "B" || key === "b") {

size += 10;//increase width of stroke

}

if (key === "S" || key === "s") {

size -= 10;//decrease width of stroke

}

}

function mouseClicked(){

//PATTERN 1: use nested Loop to create shapes

if(mouseX > 20 & mouseX < 40 &&

mouseY > 250 && mouseY < 270){

for (var j = 0; j < 12; j++) {

for (var i = 0; i < 8; i++) {

//maroon circles

noStroke();

fill(185, 88, 84);

circle(i * 40 + 160, j * 30 + 95, 20);

//white verticle lines

fill("white");

rect(i * 40 + 155, 80, 3, 370);

}

}

noLoop();

}

//PATTERN 2:

if(mouseX > 60 & mouseX < 80 &&

mouseY > 250 && mouseY < 270){

fill("white");

rect(150, 70, 300, 380);

for (var y = 0; y < 360; y += 45) {

for (var x = 0; x < 270; x += 45) {

fill(182, 182, 182);

circle(x + 180, y + 105, 45);

}

}

noLoop();

}

//PATTERN 3:

if(mouseX > 100 & mouseX < 120 &&

mouseY > 250 && mouseY < 270){

for (var a = 0; a < 280; a = a + 1) {

strokeWeight(20);

stroke("yellow");

//curve's starting point, height - curving extent * direction

point(a + 160, 250 - 100 * sin(radians(a)));

stroke(253, 241, 161);

point(a + 160, 250 - 100 * cos(radians(a)));

}

noLoop();

}

//ERASE:

if(mouseX > 40 & mouseX < 100 &&

mouseY > 360 && mouseY < 400){

noStroke();

fill("white");

rect(150, 70, 300, 380);

}

}

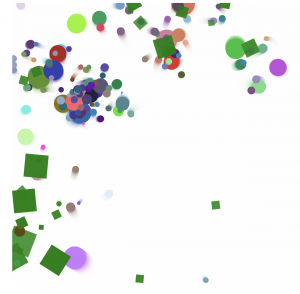

In my final project, I created an “Interactive drawing pad.” Firstly, users can drag mouse on the white drawing pad to draw colorful stroke. They can change width of stroke by pressing S or B. Secondly, users can click on the 3 buttons on the left to select different patterns to fill the pad. Finally, they can use ERASE function to erase previously drawn strokes or patterns.

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../wp-content/uploads/2020/08/stop-banner.png)