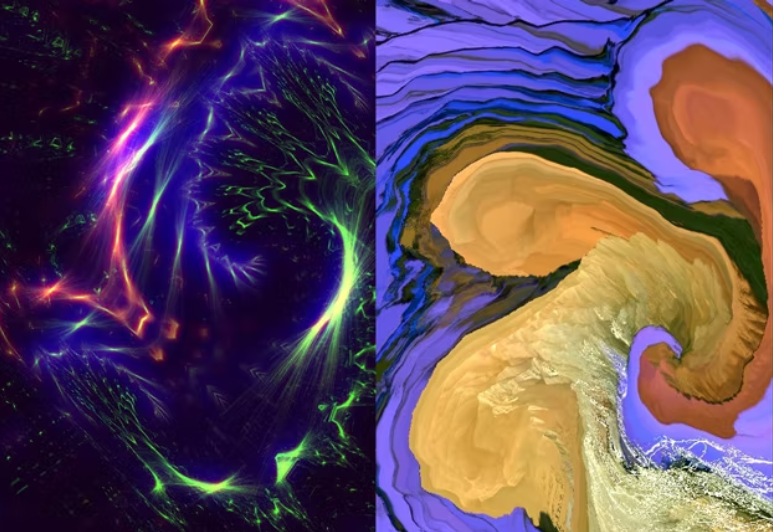

All Seeing Eye.

sketch

function setup() {

createCanvas(400, 300);

background(73, 80, 87);

}

function draw() {

stringLeftLower(204, 255, 255, 55);

stringLeftUpper(204, 255, 255, 55);

stringRightUpper(204, 255, 255, 55);

stringRightLower(204, 255, 255, 55);

translate(width/2, height/2);

stringCircle(204, 255, 255);

noLoop();

}

function stringLeftLower(r, g, b, t) {

var numLines = 40;

var x1 = 0; var y1 = 0; var y2 = height;

var x2 = xInc = (width/numLines);

var yInc = (height/numLines);

stroke(r, g, b, t);

for (index = 0; index < numLines; index ++) {

line(x1, y1, x2, y2);

y1 += yInc;

x2 += xInc;

}

}

function stringLeftUpper(r, g, b, t) {

var numLines = 40;

var x1 = 0;

var y1 = height; var y2 = 0;

var x2 = xInc = width/numLines;

var yInc = height/ numLines;

stroke(r, g, b, t);

for (index = 0; index < numLines; index ++) {

line(x1, y1, x2, y2);

y1 -= yInc; x2 += xInc; }

}

function stringRightUpper(r, g, b, t) {

var numLines = 40;

var x1 = xInc = width/numLines;

var x2 = width;

var y1 = 0;

var y2 = 0;

var yInc = height/ numLines;

stroke(r, g, b, t);

for (index = 0; index < numLines; index ++) {

line(x1, y1, x2, y2);

y2 += yInc; x1 += xInc; }

}

function stringRightLower(r, g, b, t) {

var numLines = 40;

var x1 = width; var x2 = 0;

var xInc = width/numLines;;

var yInc = height/numLines;

var y1 = height - yInc; var y2 = height;

stroke(r, g, b, t); for (index = 0; index < numLines; index ++) {

line(x1, y1, x2, y2);

y1 -= yInc; x2 += xInc; }

}

function stringCircle(r, g, b) {

var circlePoints = 36;

var angle = 0;

var rotDeg = 0;

for (index = 0; index < circlePoints; index++) {

push();

angle = map(index, 0, circlePoints, 0, TWO_PI);

var radius = 90;

var circleX = radius * cos(angle);

var circleY = radius * sin(angle);

translate(circleX, circleY);

rotate(radians(rotDeg));

var circleX2 = -radius * 2;

var circleY2 = 0;

var smallCircleDiam = 10;

var offset = 15;

stroke(r, g, b, 255);

circle(0, 0, smallCircleDiam * .2);

noFill();

circle(0, 0, smallCircleDiam);

stroke(r, g, b, 125);

line(0, 0, circleX2, circleY2);

line(0, 0, circleX2, circleY2 + offset);

line(0, 0, circleX2, circleY2 -offset);

stroke(r, g, b, 50);

line(0, 0, offset * 8, circleY2);

line(0, 0, offset * 8, circleY2 + offset);

line(0, 0, offset * 8, circleY2 -offset);

backgroundCircles(index, offset, r, g, b, 80);

pop(); rotDeg += 10; }

}

function backgroundCircles(index, offset, r, g, b, t) {

push();

stroke(r, g, b, t); translate(25, 0);

if (index % 2 == 0) {

circle(0, 0, 20);

circle(110, 0, 70);

} else {

var diam = offset * 4; circle(offset * 3, 0, diam);

var shiftValue = 10;

circle(offset * 3, -shiftValue, diam/2);

circle(offset * 3, shiftValue, diam/2);

circle(offset * 3 + shiftValue, 0, diam/2);

circle(offset * 3 - shiftValue, 0, diam/2);

}

pop()}

![[OLD SEMESTER] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2023/09/stop-banner.png)