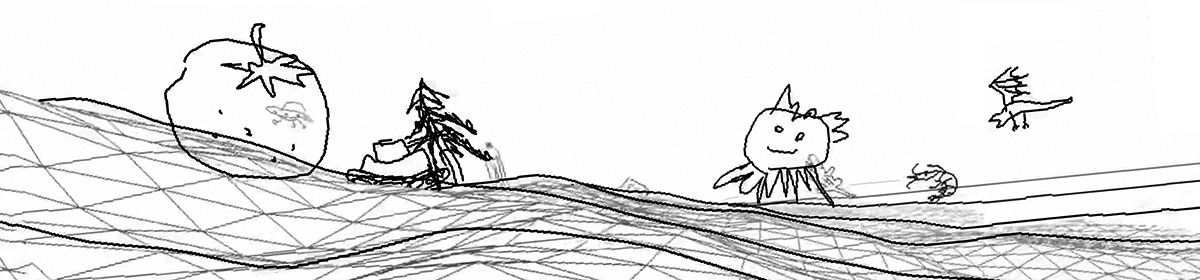

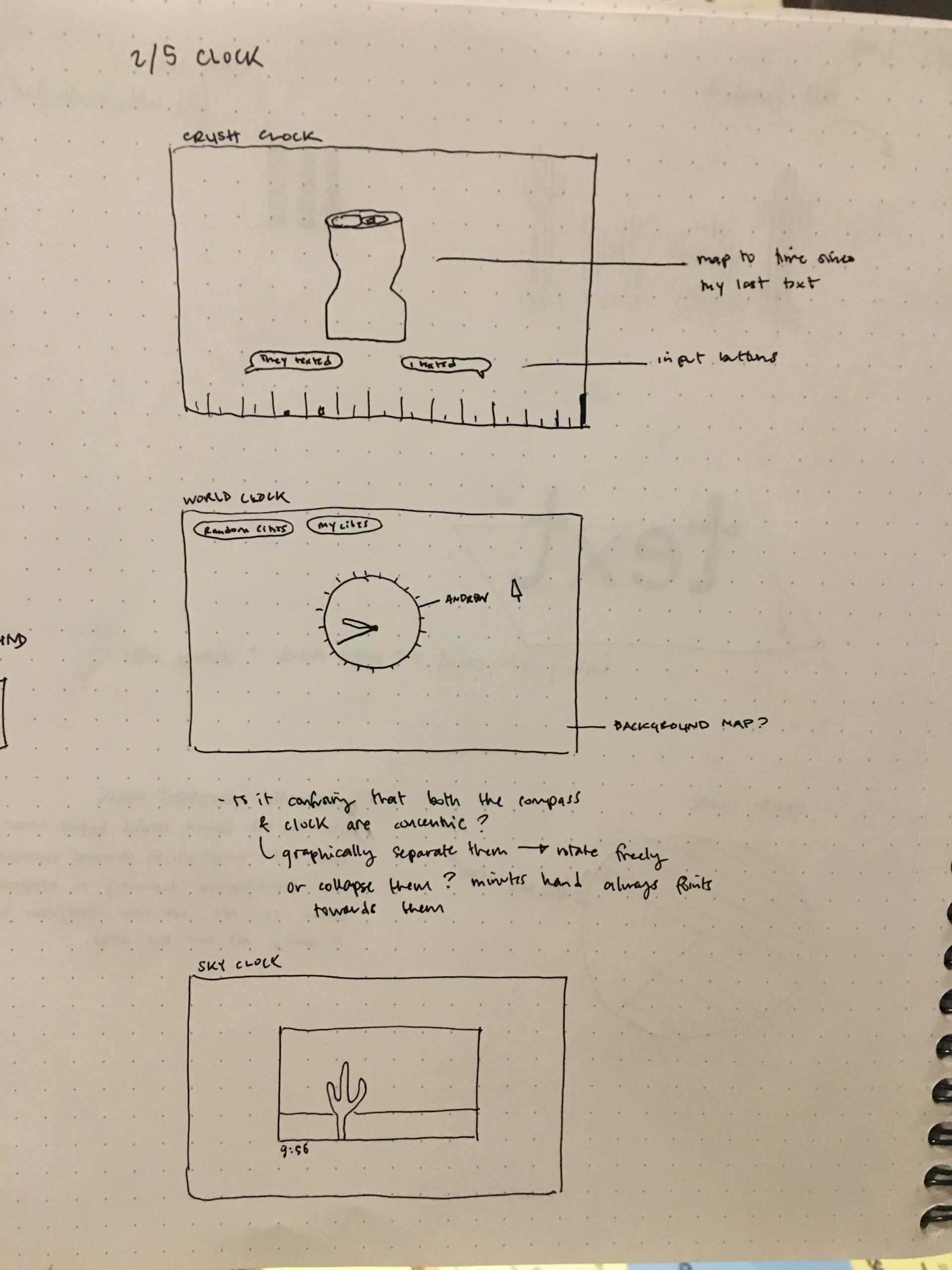

Sky Clock

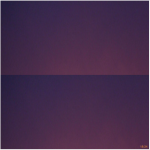

The Sky Clock shows an image of the sky every minute, that was captured at that minute. I first wrote a Python script (here) to get photos using the Flickr API that matched the search “sky,” which were then parsed to only use photos with EXIF metadata (to get the actual capture time, not the time the photo was uploaded to Flickr), and then saving into a json with keys for each ‘HH:MM’ time and values with the urls of matching photos. I loaded this json into my p5 sketch to then display an image matching the current time. If there is more than one image for the current time, a random one is chosen.

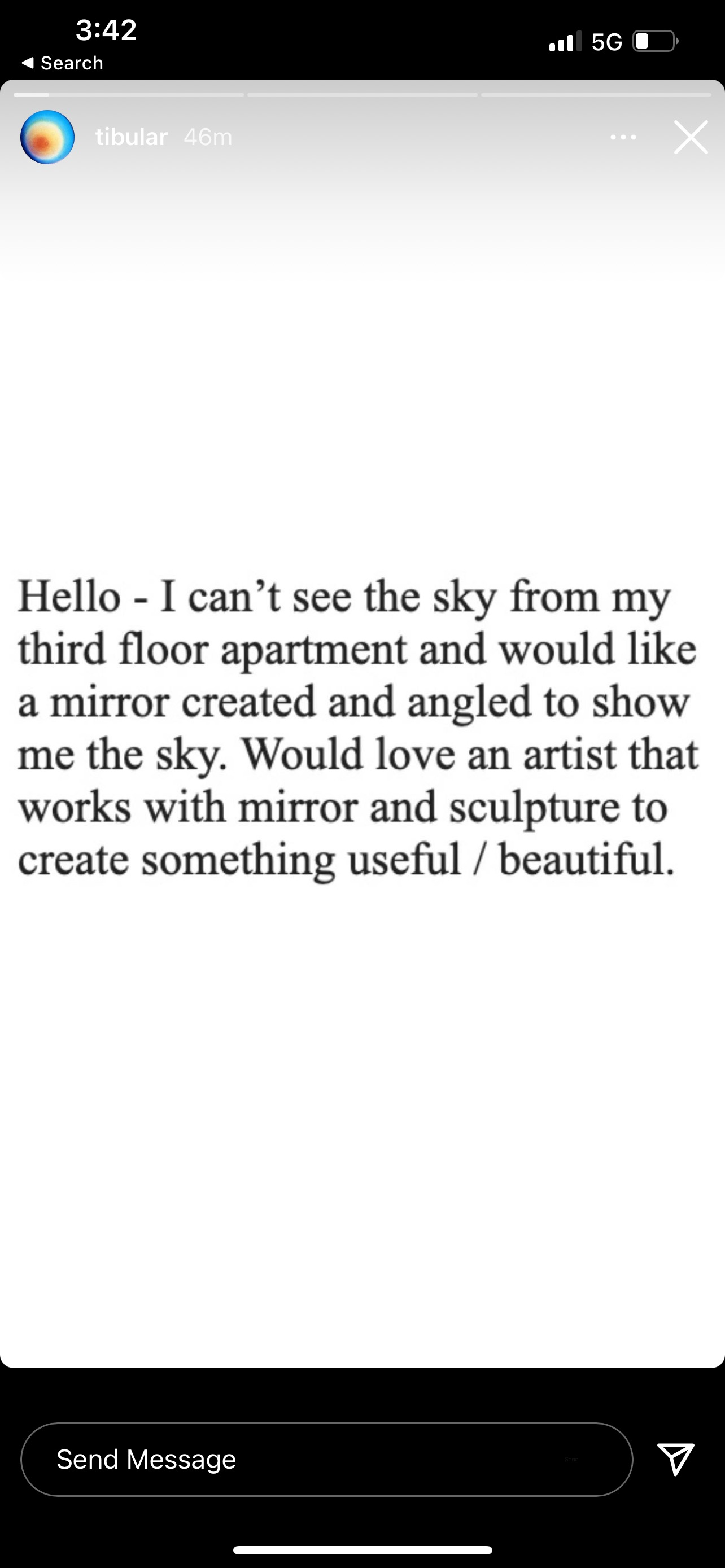

My hope was this would give a real-time sense of the sky that would shift throughout the day, from sunrise through daytime, sunset, and night. It was in part inspired by this post sent to me by a friend a few months ago:

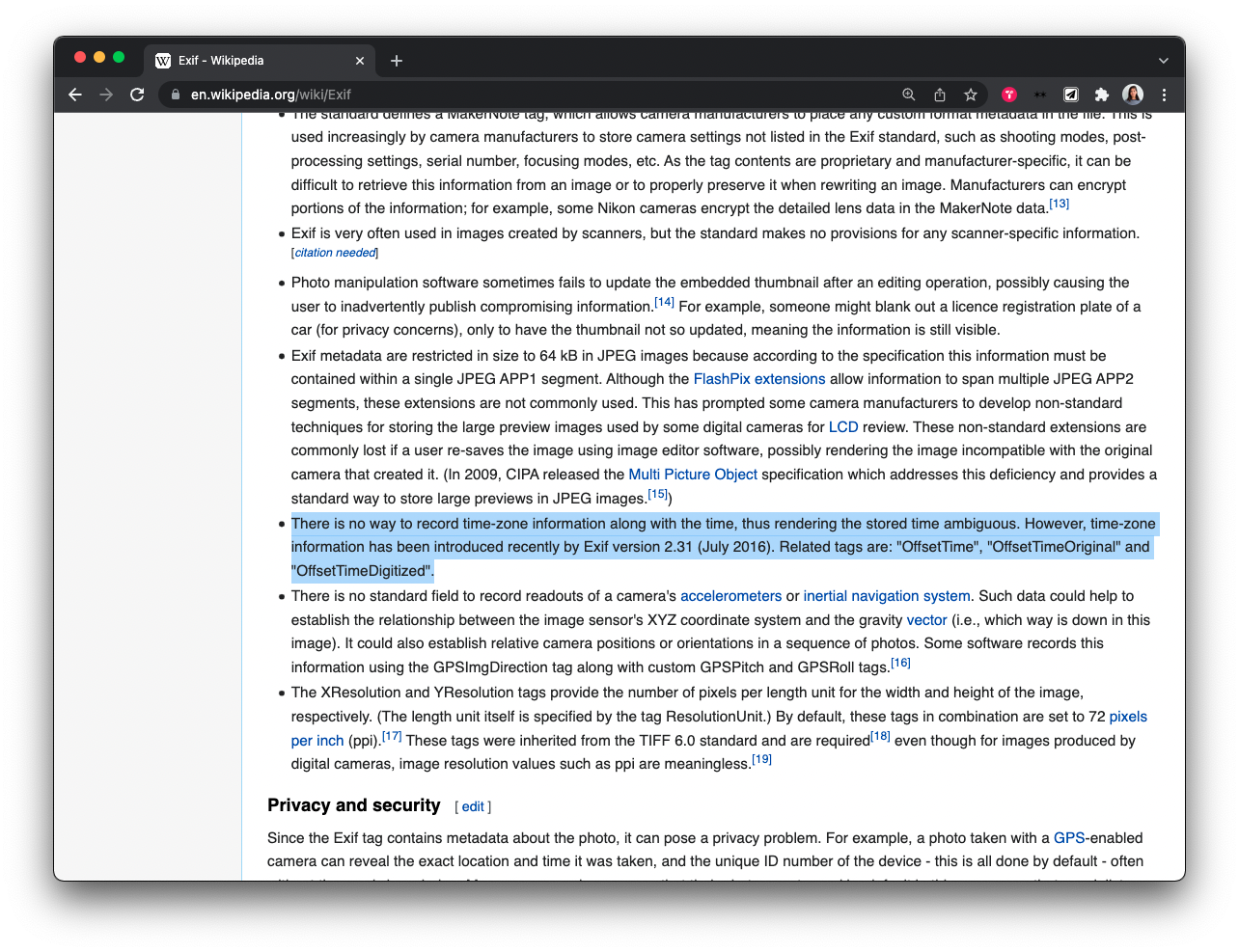

I think the concept is a poetic contribution to the genre of crowdsourced clocks we saw in examples like The Human Clock, especially if it worked as described above: a shifting image of the sky throughout the day, along with (charmingly imperfect) intrusions of cropped bits of things that are not the sky. Unfortunately, I quickly ran into problems that challenged this vision. I knew 1) that the same time of day will look differently depending on the season and latitude at which is was taken, but did not anticipate 2) getting the actual time a photo was captured is incredibly difficult. Flickr’s API provides a “date_taken” but they also state “The ‘taken’ date represents the time at which the photo has taken. This is extracted from EXIF date if available, else set to the time of upload.” Ok, so to tell if date_taken is the actual time at which the photo was taken, I check if that photo has EXIF data. EXIF data is basically all the metadata you see that comes along with photos, sometimes including GPS coordinates, the camera model it was taken on, and camera settings, as well as a number of date fields. This is where I really got into trouble…

In short, there is NO RELIABLE STANDARD for getting the local time at which a photo was taken. In addition, actually going through all the data to cross-check EXIF data added tons of computation time (it was going to take upwards of 17 hours to process before I killed it).

I put in quite a few hours learning about XML parsing and then trying to figure out the EXIF timezones, and I couldn’t find a way to consistently get the local capture time of an image. I found the best solution I could given the time constraint, but it disrupts that vision of a smoothly shifting sky. Perhaps a lesson is that you can’t have specific of a vision when it comes to crowd-sourced data, or else you need to be prepared to put a lot of time in!