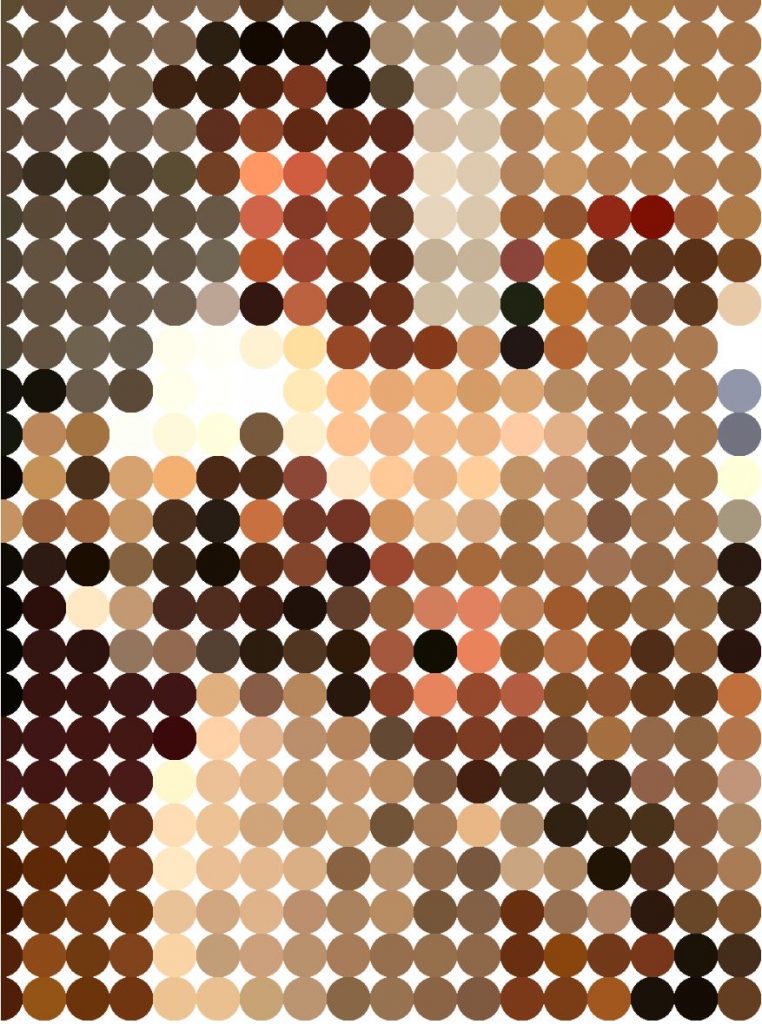

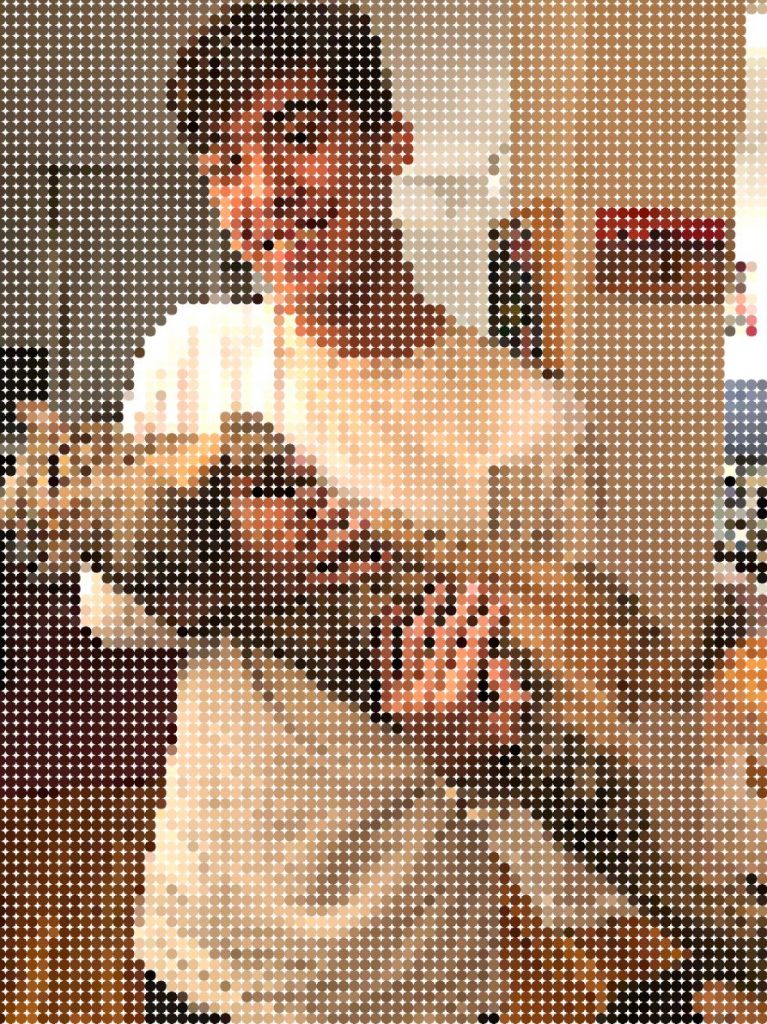

The two projects I chose were Mirror Number 2 by Daniel Rozin and Milkdrop by Geisswerks. Mirror Number 2 is projected screens or kiosks connected to video cameras and computers. Daniel Rozin has done a number of mirror inspired pieces. When a viewer stands in front of one of these pieces, their image is reflected on the screen after it has been interpreted by the computer. The displays change rapidly yielding a smooth transition tightly linked to the movements of the viewer. For this particular piece, 6 different effects cycle for a minute each, totalling 6 minutes. In all 6 effects there are 1000 moving pixels on the screen. These pixels move around following a few rules, such as moving in circles or in random. As they move around they adopt the color makeup of the viewer standing in front of the piece, resulting in a rough approximation of the image of the viewer that resembles an impressionist painting. I chose this project because it is an interesting way to change an image of the user. When making our project, we could possibly change the image of the user in a similar manner.

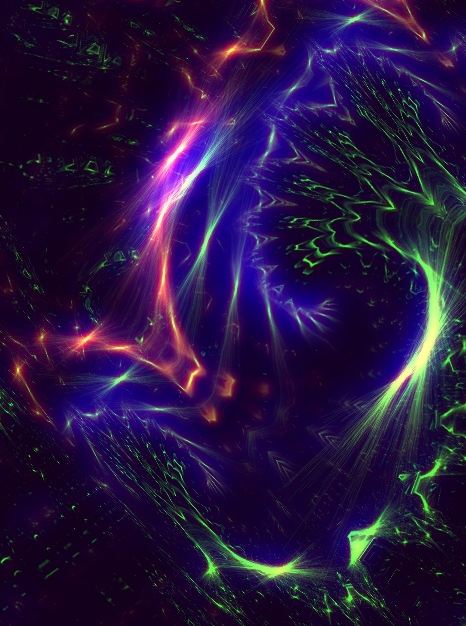

Milkdrop is a music visualizer first written in 2001, but later updated in 2007 to include pixel shaders – flexible programs written to run on modern GPUs that can result in spectacular imagery and special effects. MilkDrop’s content is comprised of presets, which each have a certain look and feel. These presets are created by a dozen or so short pieces of computer code, as well as several dozen variables that can be tuned. Users can play with MilkDrop to create their own new presets, even writing new computer code on-screen, while they run the preset and see the effects of their changes. This has spawned a large community of people authoring many thousands of presets, creating new visuals and making it react to the music. I chose this project because it is an interesting way to visualize music. When creating a music player, this is one way to think about what is displayed while the music plays.

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2020/08/stop-banner.png)