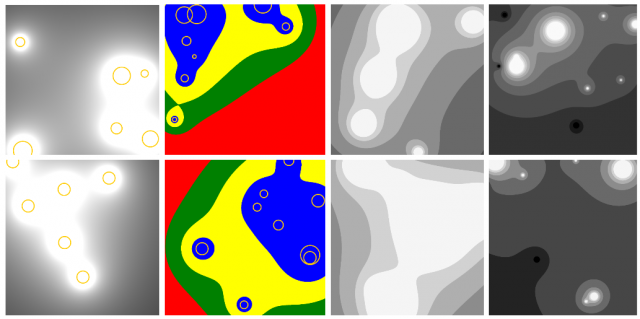

Compton’s “10,000 Bowls of Oatmeal Problem” becomes important when it comes to the situation where multiples of individual items are rendered and used. As Compton explained in the article, every oatmeal piece in a bowl is unique and different from each other in terms of size, weight, location, orientation, etc. In other words, there cannot be two or more oatmeal pieces that are exactly identical when the oatmeal was poured into a bowl straight out of the package (randomness). Because there doesn’t exist two or more oatmeal pieces molded to be exactly the same intentionally(not random), every piece is independant and different, mathematically speaking.

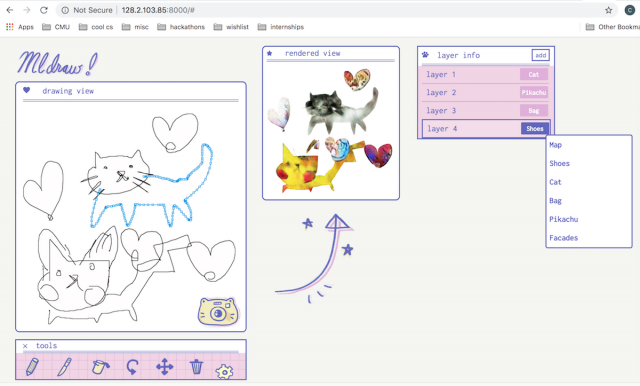

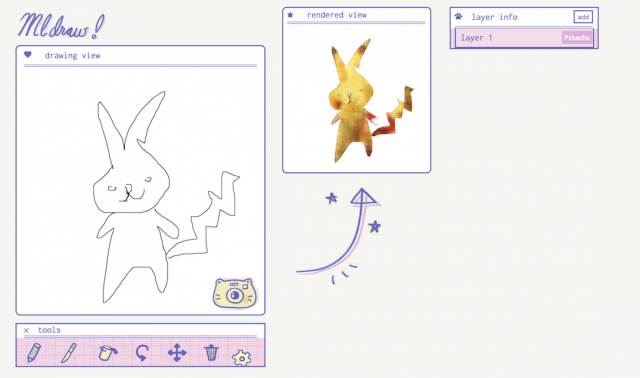

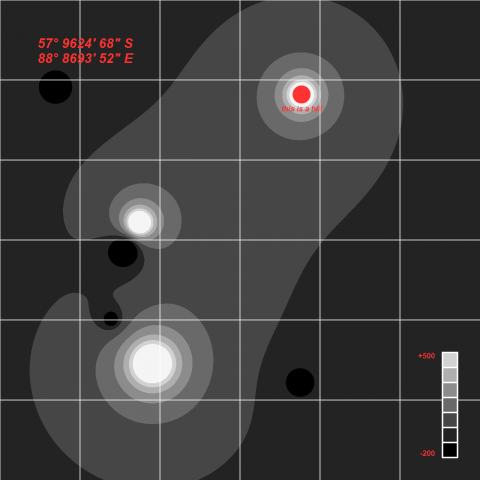

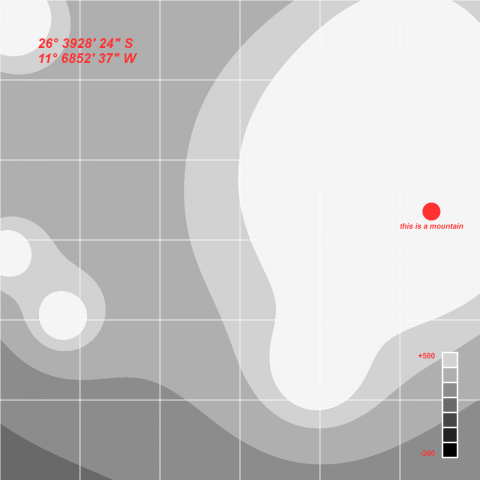

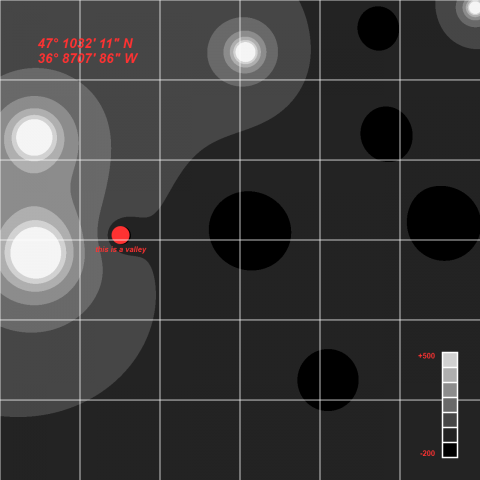

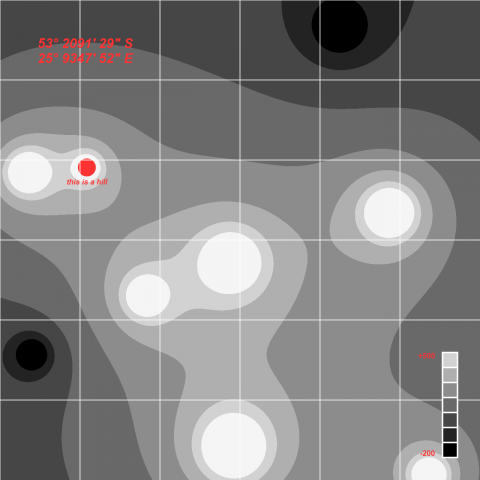

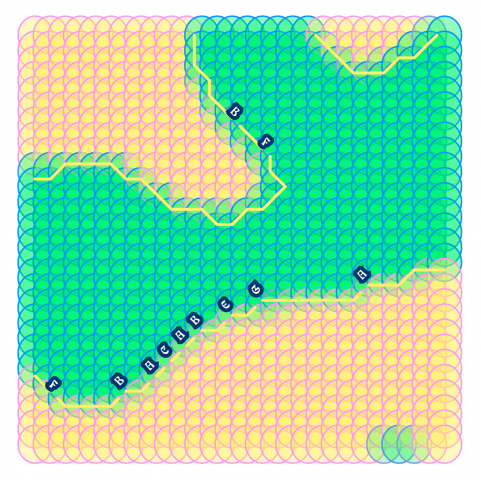

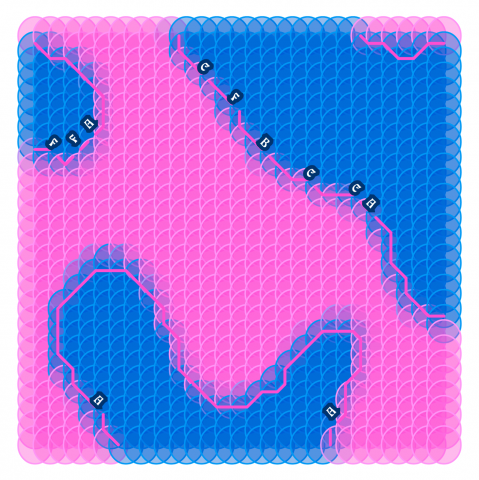

This would be a problem, for example, when your intention of randomly generating mountains is to create a background of a game you’re developing and wanted to create a user experience of playing in a green mountain map. If the randomly generated mountains turn out to have different color, hue, saturation, shape that are too far away from the general image of mountains, then such intention wouldn’t be fulfilled by the random generation. This could potentially be solved by indicating certain features of the random generation before generating. To go back to the mountain example, we could limit the color spectrum to green and shape to more triangular looking. With such indication of certain features, we could then achieve what we intended to get out of the generator.

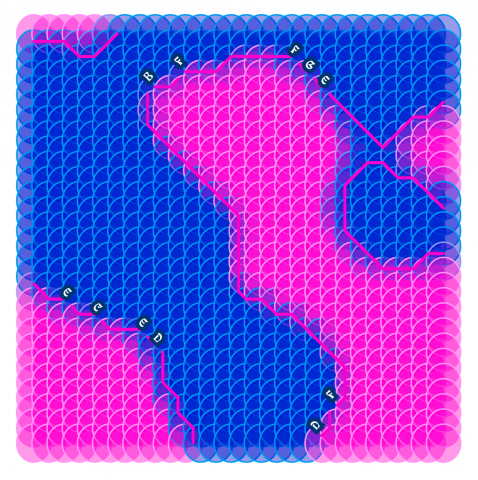

On the other hand, randomness and the uniqueness of the random generation would be beneficial when you intend to create a crowd of people, for instance. Often times a crowd of people in a film, animation, or game isn’t the main spotlight the developers want the users to focus on. The crowd of people are meant to create an impression that a lot of people/characters are there at the scene. Just like how it’s not common in real life to observe two or more people who look identical including the outfits, hairstyles, and body shapes at a crowded cafe, for example, it’d be funny if the scene you intend to create has a crowd made out of multiplication of a few the same exact looking characters.