I want to make a drawing machine. What interests me about machines drawing is rhythms in mark making, rather than accuracy and depiction. I think what’s beautiful about mechanical drawing is the pure abstraction of endless uniform marks done in a pattern, simple or complex, that is evidence of the same motion done over and over again.

I feel what’s most beautiful about all art is the presence of the indexical mark: the grain of a brush stroke, the edge and slight vibrations in a line of ink that prove it was drawn with a human hand, or the finger prints in a clay sculpture. I make the case that the difference between artistic media is defined by indexical marks. Do two works have different indexical marks? Then they are different forms of art entirely, showing us different aspects of compositional potential.

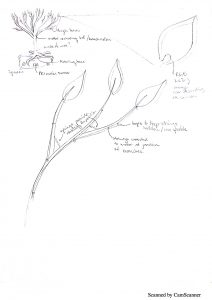

So I want to invent new indexical marks, ones that the human hand is not capable of producing. I want to see patterns fall out of a mechanical gesture that I built, but didn’t anticipate all the behaviors of, and to capture a map of these patterns on paper.

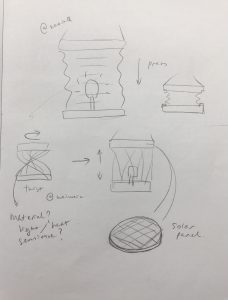

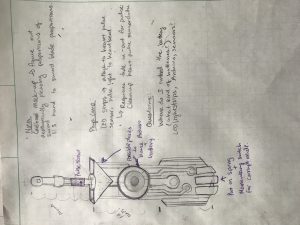

I don’t care if the machine can make a representational image; rather I want to make a series of nodes and attachments that each make unique patterns, which can each be held by mechanical arms over a drawing surface, each hold a variety of drawing tools, and be programmed into “dancing” together.

Hardware

- 5 V stepper motors

- 12 V Stepper motors

- 12 V DC motors

- Sliding potentiometers; light and sound sensors (I want the frequencies of the mark making mechanisms to be adjustable by both controlled factors and factors influenced by the environment. )

- Controller frame

- Card board for prototyping the structure of the machine

- Acrylic to be laser cut for the final structure

Software

- Built from the ground up. The most complex programing will be that of the arms which position the drawing attachments over different places on the drawing surface. I may use a coordinate positioning library for a configuration of motors that pushes and pulls a node into various positions with crossing “X and Y” arms.

Timeline

- Weeks 1 and 2

Make several attachable drawing tool mechanisms which each hold a drawing tool differently, and move it about in a different pattern.

- Week 3

Build a structure that holds the attachable nodes over a drawing surface, with the capability of arms to move the nodes across different areas of the surface.

- Week 4

Control board and sensory responders that can be used to change patterns of the arms, and the nodes.

- Week 5

Program built-in patterns that the controls will influence factors of.

- Week 6

Make some more nodes, and make some drawings!

Abstract

Abstract