/* Chelsea Fan

Section 1B

chelseaf@andrew.cmu.edu

Project-10

*/

//important variables

var myWind;

var myOcean;

var myBirds;

var mySands;

function preload() {

//load ocean image

var myImage = "https://i.imgur.com/cvlqecN.png"

currentImage = loadImage(myImage);

currentImage.loadPixels();

//loading sounds

//sound of wind

myWind = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/10/winds.wav");

myWind.setVolume(0.1);

//sound of ocean

myOcean = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/10/oceans.wav");

myOcean.setVolume(0.1);

//sound of birds

myBirds = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/10/birds.wav");

myBirds.setVolume(0.1);

//sound of sand

mySand = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/10/sand.wav");

mySand.setVolume(0.1);

//birds https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/10/birds.wav

//oceans https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/10/oceans.wav

//sands https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/10/sand.wav

//winds https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/10/winds.wav

}

function soundSetup() { // setup for audio generation

}

function setup() {

createCanvas(480, 480);

}

function sandDraw() {

noStroke();

//sand background color

fill(255, 204, 153);

rect(0, height-height/4, width, height/4);

//sand movement

for (i=0; i<1000; i++) {

var sandX = random(0, width);

var sandY = random(height-height/4, height);

fill(255, 191, 128);

ellipse(sandX, sandY, 5, 5);

}

}

var x = 0;

var cloudmove = 1;

function skyDraw() {

noStroke();

//sky color

fill(179, 236, 255);

rect(0, 0, width, height/2);

//cloud color

fill(255);

//cloud move

x = x + cloudmove;

if(x>=width+100){

x = 0;

}

//cloud parts and drawing multiple clouds in sky section

for (i=0; i<=4; i++) {

push();

translate(-200*i, 0);

ellipse(x + 10, height / 6, 50, 50);

ellipse(x + 50, height / 6 + 5, 50, 50);

ellipse(x + 90, height / 6, 50, 40);

ellipse(x + 30, height / 6 - 20, 40, 40);

ellipse(x + 70, height / 6 - 20, 40, 35);

pop();

}

}

function birdDraw() {

noFill();

stroke(0);

strokeWeight(3);

//Birds and their random coordinates (not randomized

//because I chose coordinates for aesthetic reasons)

curve(100, 150, 120, 120, 140, 120, 160, 140);

curve(120, 140, 140, 120, 160, 120, 180, 150);

push();

translate(-110, 0);

curve(100, 150, 120, 120, 140, 120, 160, 140);

curve(120, 140, 140, 120, 160, 120, 180, 150);

pop();

push();

translate(-100, 80);

curve(100, 150, 120, 120, 140, 120, 160, 140);

curve(120, 140, 140, 120, 160, 120, 180, 150);

pop();

push();

translate(-30, 40);

curve(100, 150, 120, 120, 140, 120, 160, 140);

curve(120, 140, 140, 120, 160, 120, 180, 150);

pop();

push();

translate(70, 50);

curve(100, 150, 120, 120, 140, 120, 160, 140);

curve(120, 140, 140, 120, 160, 120, 180, 150);

pop();

push();

translate(100, 100);

curve(100, 150, 120, 120, 140, 120, 160, 140);

curve(120, 140, 140, 120, 160, 120, 180, 150);

pop();

push();

translate(150, 25);

curve(100, 150, 120, 120, 140, 120, 160, 140);

curve(120, 140, 140, 120, 160, 120, 180, 150);

pop();

push();

translate(200, 75);

curve(100, 150, 120, 120, 140, 120, 160, 140);

curve(120, 140, 140, 120, 160, 120, 180, 150);

pop();

push();

translate(250, 13);

curve(100, 150, 120, 120, 140, 120, 160, 140);

curve(120, 140, 140, 120, 160, 120, 180, 150);

pop();

}

function draw() {

//draw sand

sandDraw();

//draw ocean

image(currentImage, 0, height/2);

//draw sky

skyDraw();

//draw birds

birdDraw();

//implement sound when mouse is pressed

mousePressed();

}

function mousePressed() {

//if mouse is in section of canvas where clouds are

if (mouseIsPressed & mouseY>=0 && mouseY<=height/4) {

//sound of wind

myWind.play();

}

//if mouse is in section of canvas where birds are

if (mouseIsPressed & mouseY>height/4 && mouseY<=height/2) {

//sound of birds

myBirds.play();

}

//if mouse is in section of canvas where ocean is

if (mouseIsPressed & mouseY>height/2 && mouseY<=3*height/4) {

//sound of waves

myOcean.play();

}

//if mouse is in section of canvas where sand is

if (mouseIsPressed & mouseY>3*height/4 && mouseY<=height) {

//sound of sand

mySand.play();

}

}

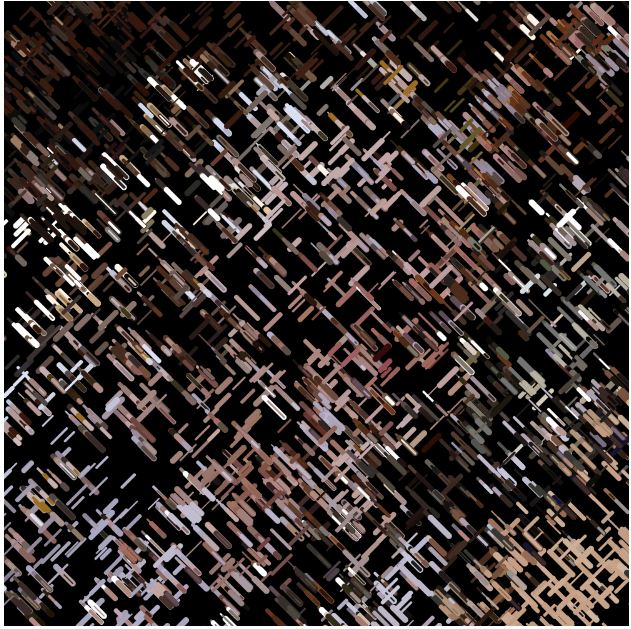

My code has four different sounds (sounds of wind, birds, waves, and sand). Each is enabled by clicking on the respective quarter of the canvas. For example, the wind sound is enabled by clicking the top layer where the clouds are located.

This took me a very long time because I couldn’t get the sounds to work. But, the idea of having an ocean landscape with different sounds came quickly to me.

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2020/08/stop-banner.png)