Pazhutan Ateliers is a computational music education and production project by duo M. Pazhutan and H. Haq Pazhutan. The course topics listed on the website include (but are not limited to) electronic/computational music, music appreciation, and sound art.

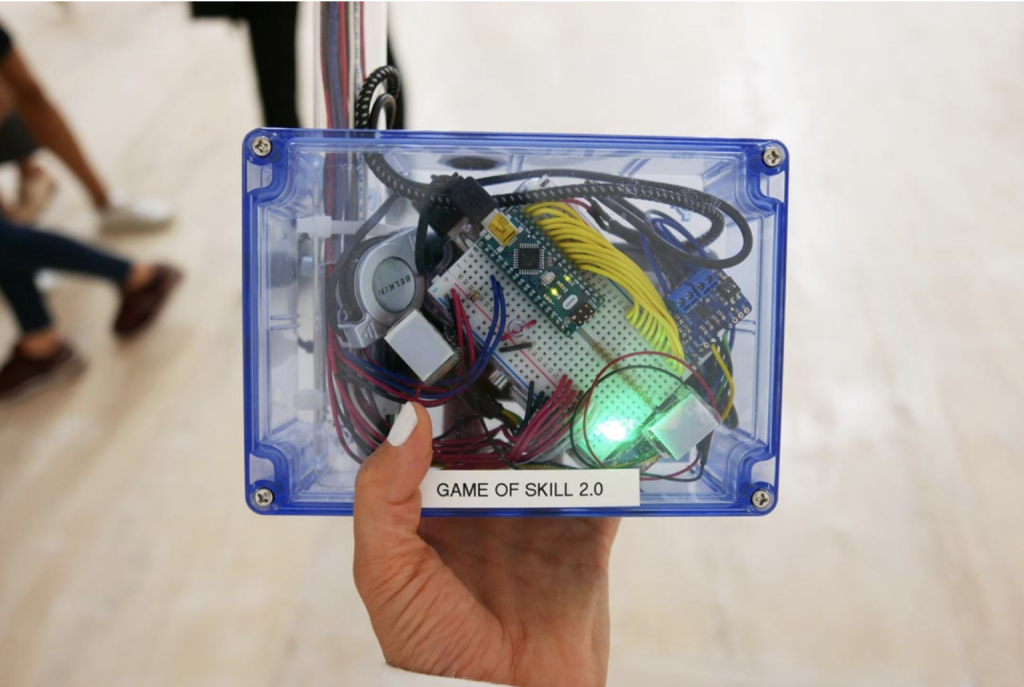

The particular project I looked at was “Cy-Ens,” short for cybernetic ensemble. To quote the project page, “Cy-Ens is our computer music project with the ambition of expanding the potentials of understanding the aesthetics of computational sound and appreciation of logic, math and art.” The album consists of 15 to 30 minute tracks of ambient computer generated noise. The creation of the work involved the use of open-sourced audio and programming languages, as well as various physical MIDI controllers such as knobs, sliders, and percussion pads. The concept of the project is to create abstract sound compositions by allowing them to arise from mathematical patterns.

![[OLD FALL 2020] 15-104 • Introduction to Computing for Creative Practice](https://courses.ideate.cmu.edu/15-104/f2020/wp-content/uploads/2021/09/stop-banner.png)