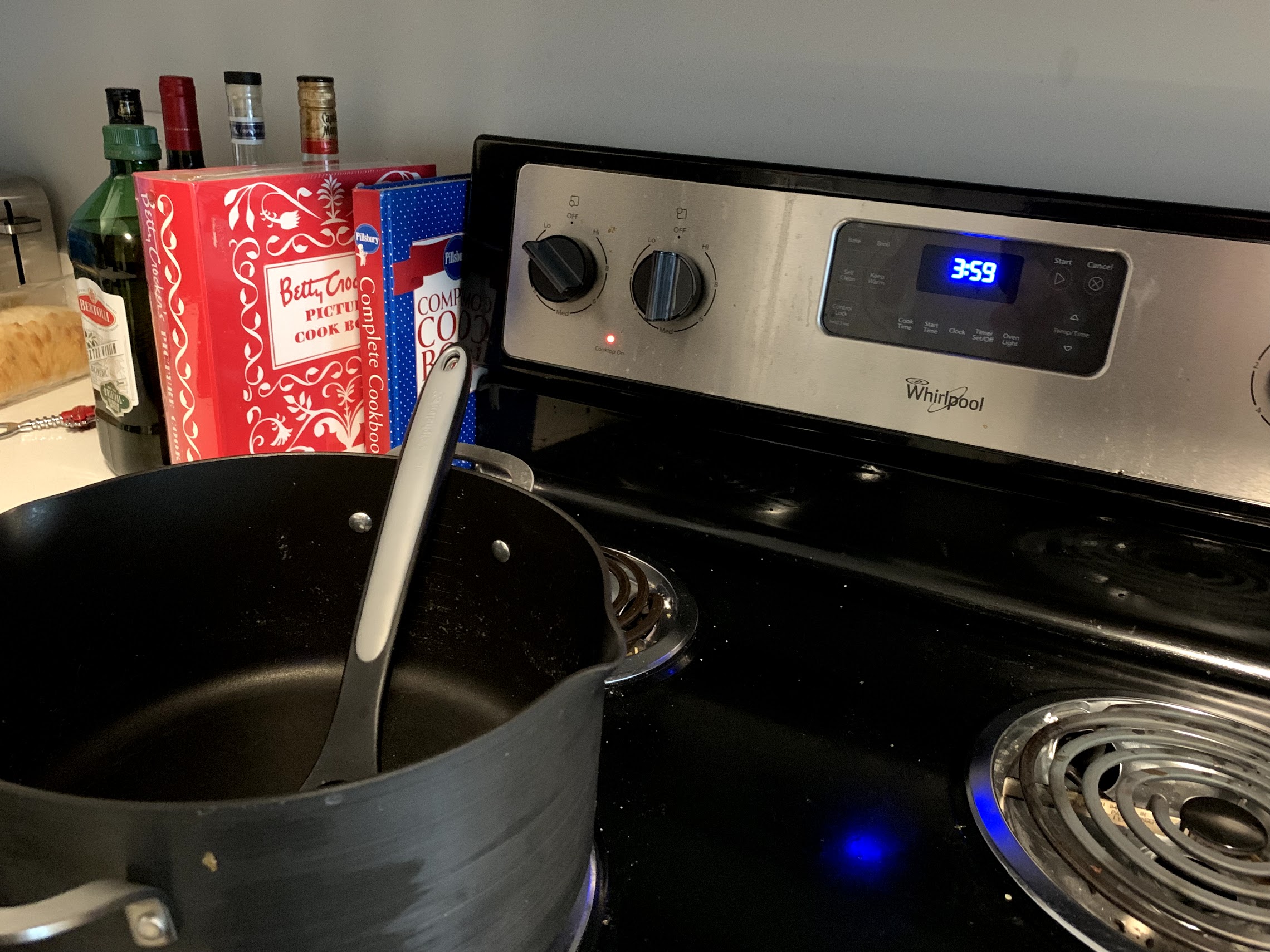

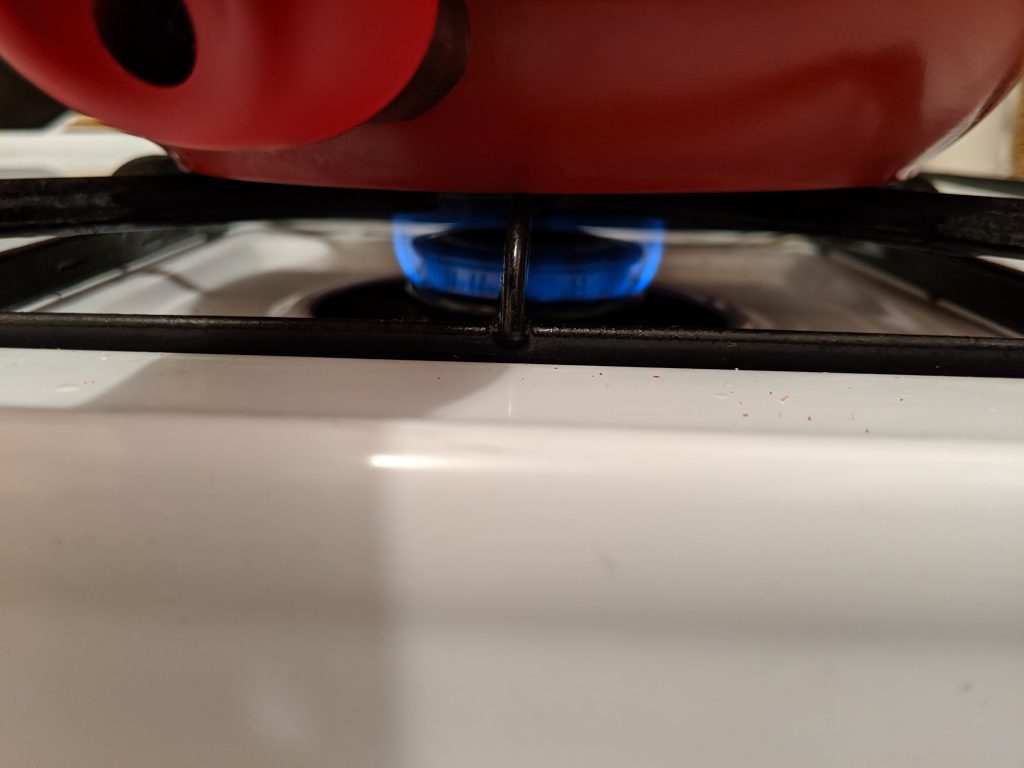

I have to admit, I kinda thought this particular solution was a little cliche at first, but then just this week, I accidentally left a burner on low, and walked away for an hour. Luckily nothing got too badly damaged, but I’ve gained a new respect for practical solutions to everyday problems.

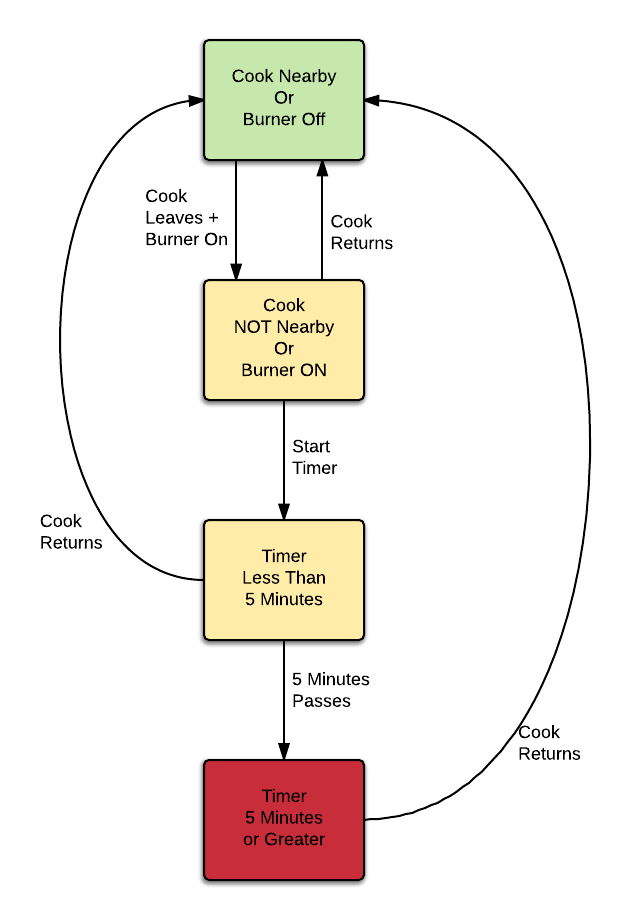

Here’s the plan:

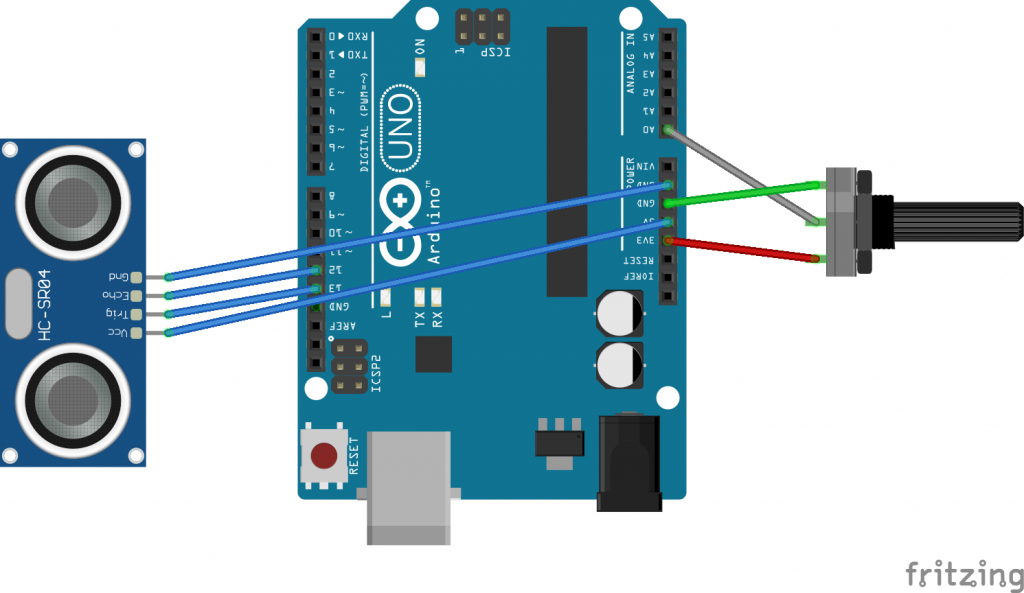

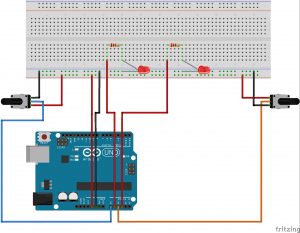

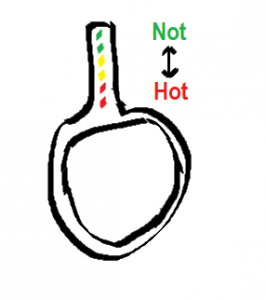

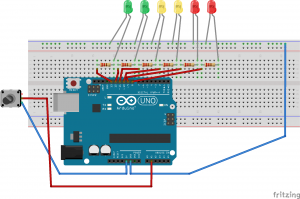

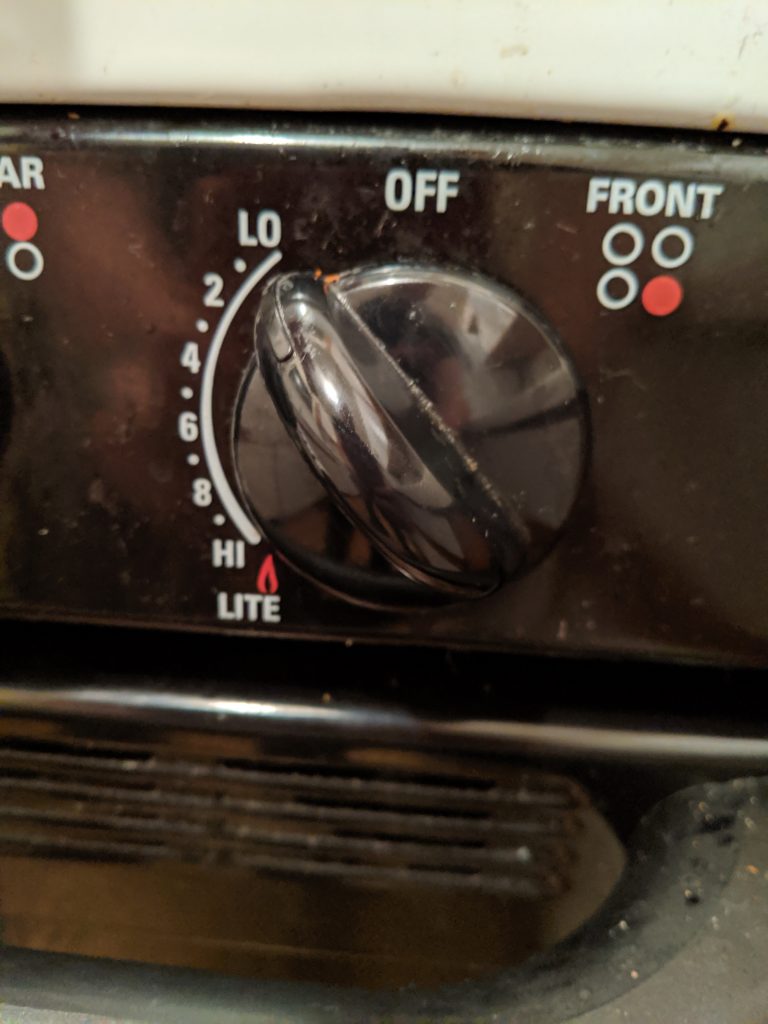

Attach a sensor to the knob to know when it’s not in the off position. This could even be a simple switch (today we’re using a potentiometer in case we someday want to know how high the burner is set).

From there we add a sensor to tell when the cook has walked away. We don’t really want a visual indicator that’s always on when the burner is on, or we’ll learn to ignore it. Today I’m using the HC-SR04 provided in class.

From there it’s just a matter of selecting a timeframe, and an indicator. For the purposes of the demo, we’ll use 5 seconds, but in real life something like 5 minutes is probably about right. For an indicator, I’ve gone with a red LED for the demo, but perhaps a text message or IFTTT notification on my watch would be more practical long term.

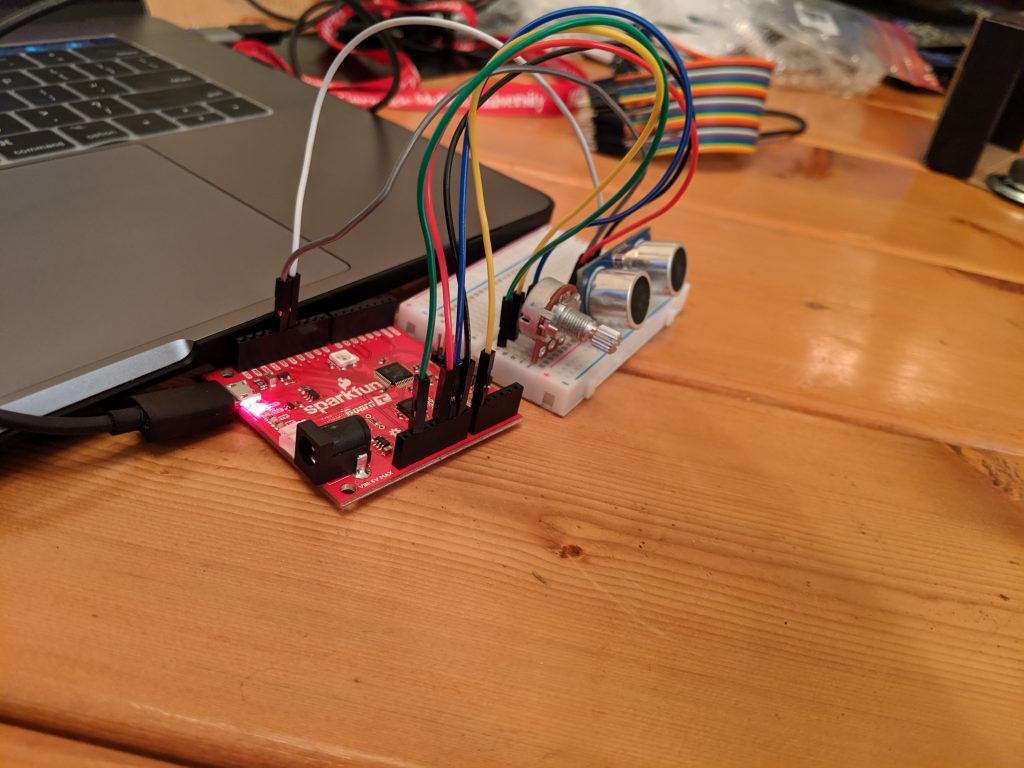

Below I’ve laid out the state diagram, wiring of the demo, and a picture of it in action. There’s a link to the zipped code at the bottom.

Let me know what you think!