Abstract / concept statement

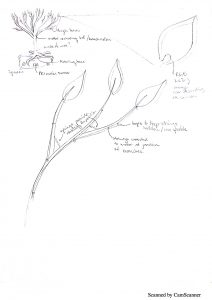

I want to make a mobile keyboard that reads aloud the words typed into it. If someone were to lose their ability to speak, this device could be an easy way for them to quickly communicate by typing what they want to say, since typing has become so natural to most people.

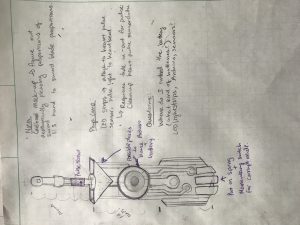

Hardware

The most crucial element of hardware in my project is the keyboard. After doing some research I believe that using a keyboard with the older PS/2 port I will be able to have my keyboard interact with the arduino. I will also need a speaker and power supply or maybe rechargeable battery (it would be nice to have it all be wireless).

Software

The trickiest part of the software process will probably be the text-to-speech. I have found a library on github that is used for arduino text-to-speech and an example of it being used online so hopefully I wont run into any issues with that. I’ve also found a library for the keyboard.

https://github.com/jscrane/TTS text to speech

https://github.com/PaulStoffregen/PS2Keyboard ps/2 keyboard

Order of construction and testing

1. get keyboard input

2. use keyboard input to make text-to-speech happen

3. figure out wireless power supply

4. craft some sort of container that hides wires, leaving just the keyboard and speaker exposed.

I’m not sure if this project is maybe too simple for a final project, but I like this idea because of the accessibility it could provide someone in need. It is possible that I will add to or modify this idea to better suit the assignment if needed.

Abstract

Abstract