(note: I turned in a rushed version of this project with garbage documentation and someone in crit felt they needed to absolutely obliterate me, so I spent the next two days putting together something I feel dignified presenting. That’s why the recording was posted two days after the project deadline).

I make music through live coding. Explaining what that entails usually takes a while, so I’ll keep it brief. I evaluate lines of code that represent patterns of values. Those patterns denote when to play audio samples on my hard drive, and with what effects. I can also make patterns that tell the system when to play audio from a specified input (for example, when to play the feed coming from my laptop mic). Bottom line, everything you hear is a consequence of either me speaking to a mic, or me typing keys bound to shortcuts that send lines of code to be evaluated, modifying the sound. I have many many shortcut keys to send specific things, but it all amounts to sending text to an interpreter.

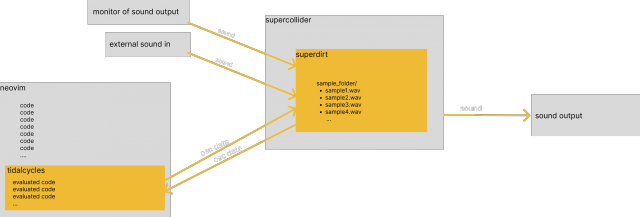

Here’s a rough diagram of my digital setup

neovim – text editor

tidalcycles – live coding language/environment

supercollider – audio programming language/environment

osc – open sound control

If you’re interested in the details, feel free to reach out to @c_robo_ on instagram or twitter (if it’s still up by the time you read this).

Anyway, this setup is partially inspired by two big issues I see with contemporary electronic music.

The first is the barrier to performance. Most analog tools meant for electronic music are well engineered and fully intended for live use, but by their nature have a giant cost barrier associated with them. Anyone can pirate a digital audio workstation, but most digital music tools are made prioritizing composition. If they’re made also for performance like ableton live, they usually have some special view suggesting the use of a hardware interface to control parameters more dynamically, which to me is just another cost barrier implicitly refuting the computer keyboard as a sufficiently expressive interface for making music. This seems goofy to me, given how we’ve reduced every job related to interfacing with complex systems to interfacing with a computer keyboard. It’s come out as the best way to manage information, but somehow it’s not expressive enough for music performance?

The other problem is that electronic music performance simply isn’t that interesting to watch. Traditionally, it’s someone standing in front of a ton of stationary equipment occasionally touching things. In other words, there are no gestures clearly communicating interaction between performer and instrument. Live visuals have done quite a bit to address this issue and provide the audience something more visually relevant to the audio. Amazing stuff has been done with this approach and I don’t mean to diminish its positive impact on live electronic music, but it’s a solution that ignores gesture as a fundamental feature of performance.

400 words in, and we’re at the prompt: electronic music performance has trouble communicating the people in time actually making music. What the hell do they see? What are their hands doing? When are they changing the sound and when are they stepping back? To me, live coding is an interesting solution to both problems in that there are no hardware cost barriers and “display of manual dexterity” (ie. gesture) is literally part of its manifesto. I don’t abide by the manifesto as a whole, but it’s one of a few components I find most interesting given my perspective.

This is an unedited screen recording of a livecoding performance I did, with a visualization of my keyboard overlayed on top (the rectangles popping up around the screen are keys I’m pressing). I spent the better part of two days wrangling dependency issues to get the input-overlay plugin for obs working on my machine. Such issues dissuaded me from experimenting with this earlier, but I’m incredibly happy that I did because it’s both visually stimulating and communicates most of the ways through which I interact with the system.

The sound itself is mostly an exploration of feedback and routing external sound with tidalcycles. I have sound from the laptop going through a powered amplifier in my basement picked up again going back into the system through my laptop mic. You can hear me drumming on a desk or clicking my tongue into the mic at a few points (to really make sure a person is expressed in this time, cause I know y’all care about the prompt). I also have some feedback internally routed so I can re-process output from supercollider directly (there’s a screen with my audio routing configuration at 1:22).

Not gonna lie, it’s a little slow for the first minute but it gets more involved.

CONTENT WARNING: FLASHING LIGHTS and PIERCING NOISES

(best experienced with headphones)