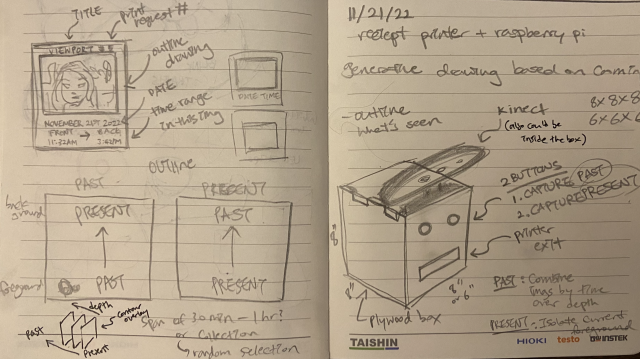

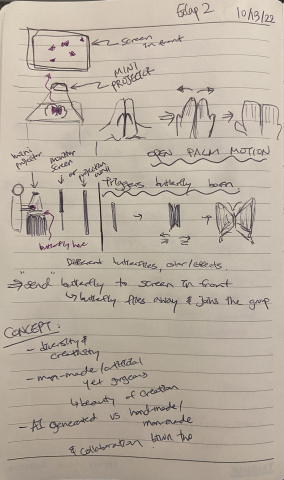

Snapshot is a Kinect-based project that displays a composite image of what was captured over time through depth. Each layer that builds up the image contains the outline of objects captured at different depth range at different time. The layers are organized by time, meaning the oldest capture is at the background and the newest capture is at the foreground.

Extending the from my first project, I wanted to further capture location over time for my final project. Depending on the time of the day, the same location may be empty or extremely crowded. Sometimes an unexpected passes by the location, but it would be only seen at that specific time. Wondering what the output would look like if each depth layer is the capture of the same location from different point of time, I developed a program that separately saves the kinect input image by depth range and compiles randomly selected images per layer from the past in a chronological order. Since I wanted the output to be viewed as a snapshot of longer interval of time (similar to how camera works but with longer interval of time beingcaptured), I framed the compiled image like a polaroid with a timestamp and location written below the image. Once combined with the thermal printer, I see this project to be at a corner of a location with high traffic, providing an option for passerby to take a snapshot of the location over time and take the printed photo as a souvenir.

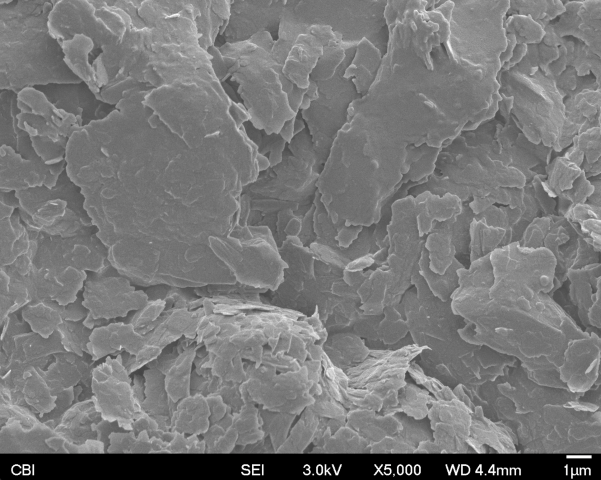

Overall, I like how the snapshots turned out. Using the grayscale to indicate the depth and the noise in the pixel made the output image look like a painting that focuses on the shape/silhouette rather than the color. Future opportunity wise, I would like to explore further with this system I developed and capture more spaces over longer period of time. I’ll also be looking into migrating this program into raspberry pi3 so that it can function in a much smaller space without having to supervise the machine while it’s in work.

Example Snapshots:

Process

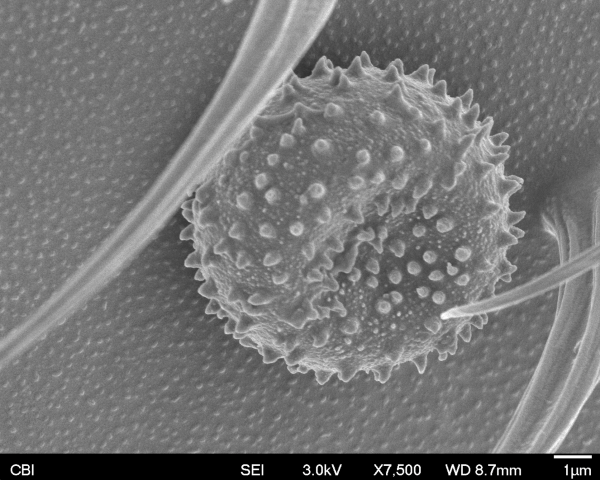

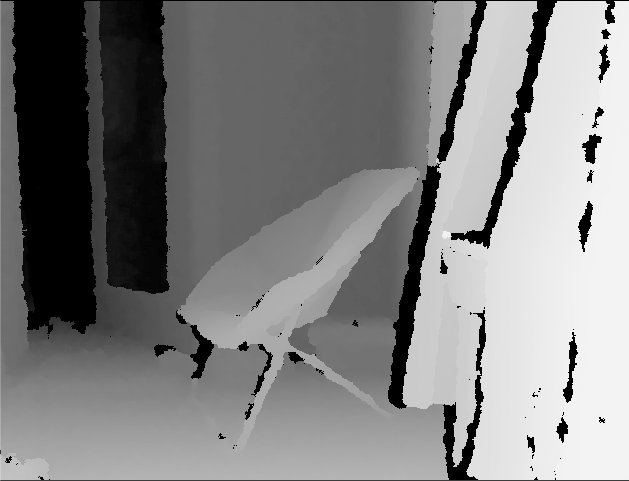

I first used Kinect to get the depth data of the scene:

Then I created point cloud with the depth information as z:

I then divided the points into 6 buckets based on their depth range with HSB color value (also based on depth).

I also created triangular mesh out of points per bucket.

I wrote a function that would automatically save the data per layer every certain time interval (e.g. every minute, every 10 seconds, etc.)

These are different views of Kinect:

Upon pressing a button to capture, the program generates 6 random numbers and sorts them in order to pull captured layers in chronological order. It then combines the layers all together by taking the biggest pixel value across 6 layer images. Once the image is constructed, it frames in a polaroid template with location and timeframe (also saved and retrieved along with the layers) below the image.