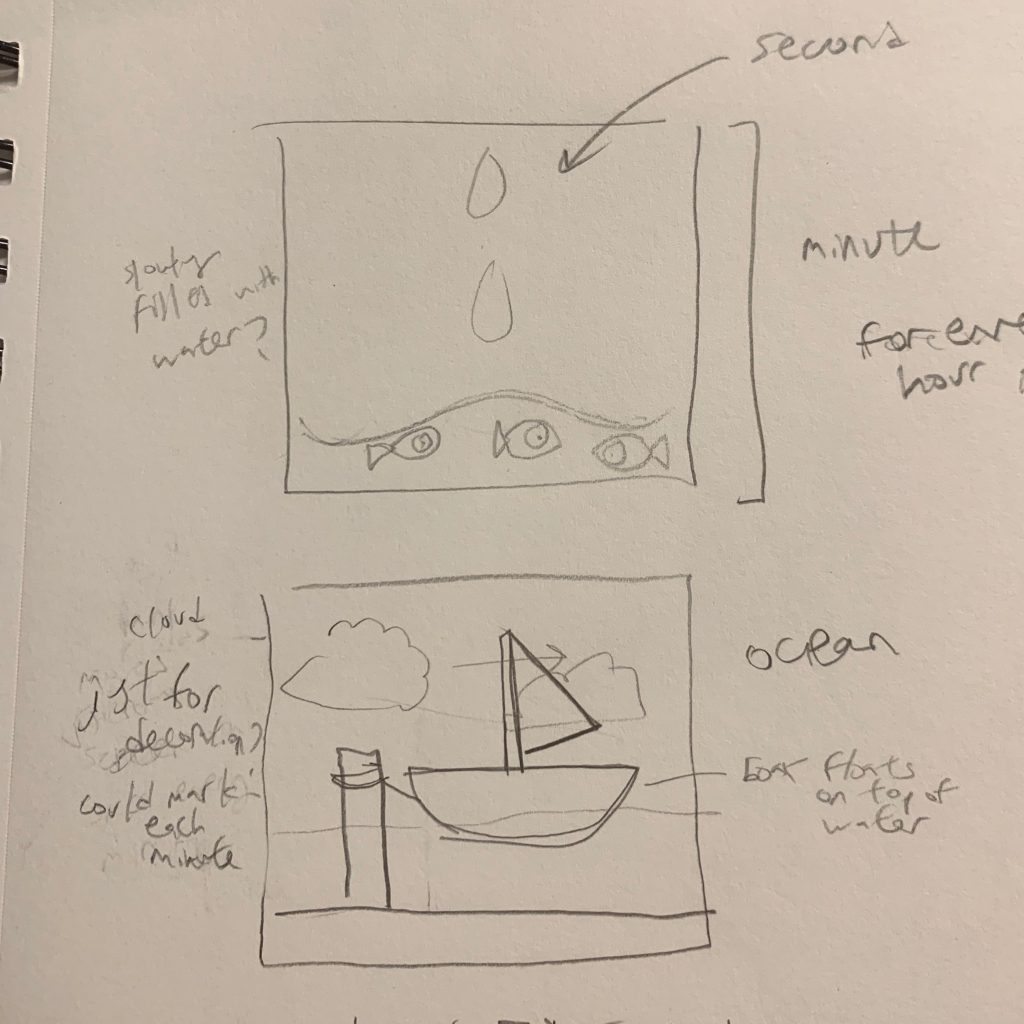

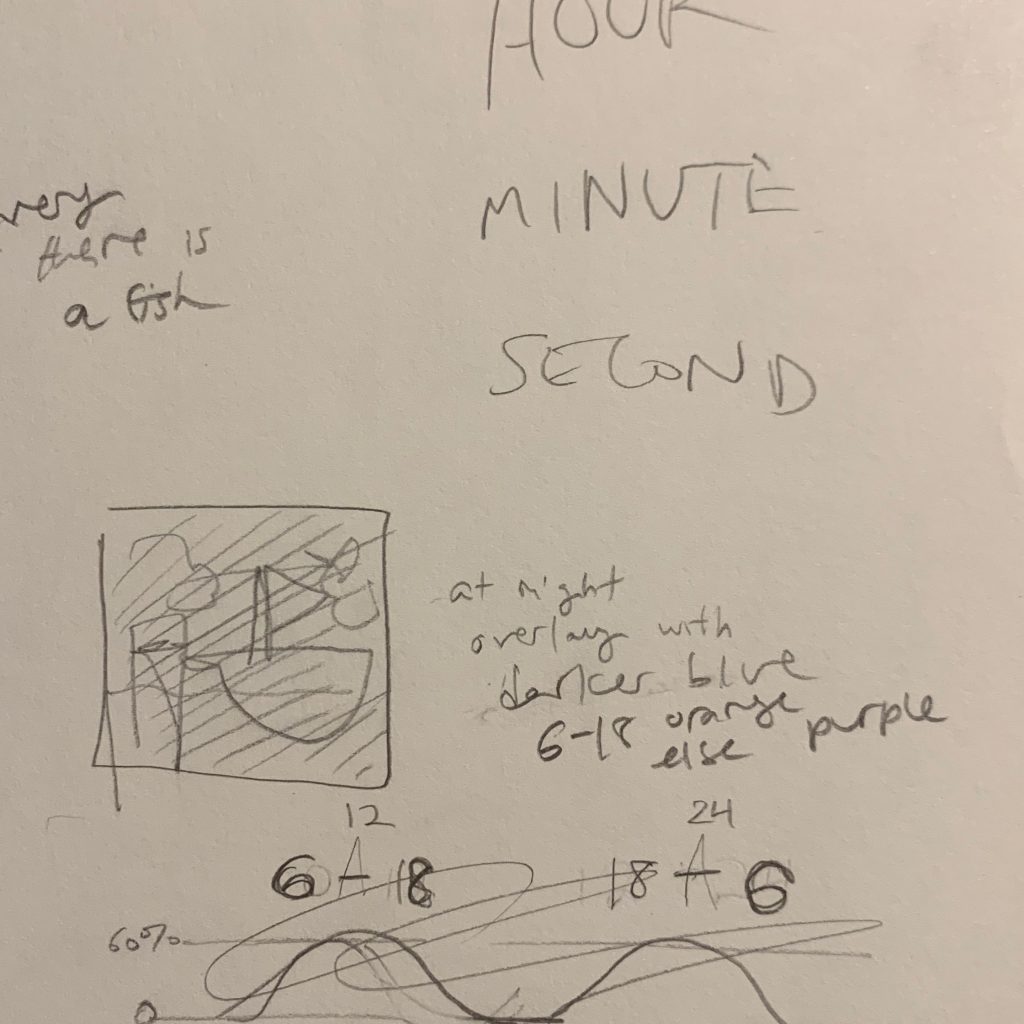

For my clock I made a scene at the ocean. The small clock shows the progression of an hour (when it’s near the end of the screen the hour’s about to change) and the big cloud shows the progression of a minute. The water is controlled by a sin function so it makes a whole cycle in a minute. There is a transparent layer on top of the water (called oceanLight) that also expands and contracts sinusoidally. It has the same x,y value (0,2*height/3+yMin) so that it follows the blue water exactly.

There is also a simple function to make the sky more orange during specific hours (6am to 6pm) and more blue otherwise.

clock

var skyColor;

var oceanColor;

var oceanColorLight;

var sandColor;

var logColor;

var notchColor;

var orangeSky;

var purpleSky;

var boatColor;

var prevSec;

var millisRolloverTime;

var hr;

function setup() {

skyColor = color(140,220,240);

oceanColor = color(60,140,190);

oceanColorLight = color(219,222,236,150);

sandColor = color(220,190,110);

logColor = color(97,54,35);

notchColor = color(141,94,43);

orangeSky = color(248,170,66,40);

purpleSky = color(129,36,229,40);

boatColor = color(200,50,50);

createCanvas(480, 480);

noStroke();

hr = hour() + minute()/60 + second()/60/60;

}

function draw() {

background(skyColor);

var H = hour();

var M = minute();

var S = second();

if (prevSec != S) {

millisRolloverTime = millis();

}

prevSec = S;

var mils = floor(millis() - millisRolloverTime); var secondsWithFraction = S + (mils / 1000.0);

var minutesWithFraction = M + (mils / 60000.0);

var cloudPositionSec = map(secondsWithFraction, 0, 60, -150, width + 50);

var cloudPositionMin = map(minutesWithFraction, 0, 60, -75, width + 25);

var seconds = hr + (millis() * 0.001) /60;

var yMin = sin(seconds * TWO_PI) * height/4; var yMin2 = sin(seconds * TWO_PI) * height/20;

fill(oceanColor);

rect(0,2*height/3+yMin,

width,height/4-yMin);

fill(logColor);

rect(height/4,height/2-30,55,height/2-30,5,5);

fill(notchColor);

triangle(height/4+55,2*height/3-5, height/4+35,2*height/3,

height/4+55,2*height/3+10);

fill(oceanColorLight);

rect(0,2*height/3+yMin,

width,height/20+yMin2)

fill(sandColor);

ellipse(width/2,height-30,width+500,70);

ellipse(height/4+55/2,height-53,120,30);

fill(225,235,240);

drawCloud(cloudPositionMin,height/3,50); fill(250,250,250);

drawCloud(cloudPositionSec,height/5);

boatY=2*height/3+yMin+20;

drawBoat(width/2,boatY);

if(H >= 6 & H < 18) {

fill(orangeSky);

} else {

fill(purpleSky);

}

rect(0,0,width,height);

}

function drawCloud(x,y,size = 100) {

push();

noStroke();

ellipse(x, y, size, size);

ellipse(x + size*.5, y + size*.05, size, size);

ellipse(x + size, y, size, size*.8);

ellipse(x + size*.3, y - size*.2, size*.8, size*.8);

ellipse(x + size*.7, y - size*.35, size*.8, size*.8);

pop();

}

function drawBoat(x=width/2,y=height/2) {

fill("black");

rect(x+30,y-220,

12,220);

fill("white");

triangle(x+42,y-220,

x+150,y-103,

x+42,y-90);

fill(boatColor);

beginShape();

vertex(x-20, y-80);

vertex(x+220, y-80);

vertex(x+170, y);

vertex(x, y);

endShape(CLOSE);

}

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)