Brian Foo draws from his fascination and interest in brain activity in “Rhapsody in Grey” by translating brainwave data into music. He specifically studied the brainwaves of a female pediatric patient with epilepsy so that listeners can empathize or briefly experience what may be going on during a seizure. I was intrigued by the distinctiveness of this project, as well as his creative approach to a scientific topic such as brainwaves. I admire Foo’s usage of his fluency and skills in programming to portray a personal interest(brain activity) in his own creative way(music). I found this project to be inspiring in the endless possibilities of programming and computational art.

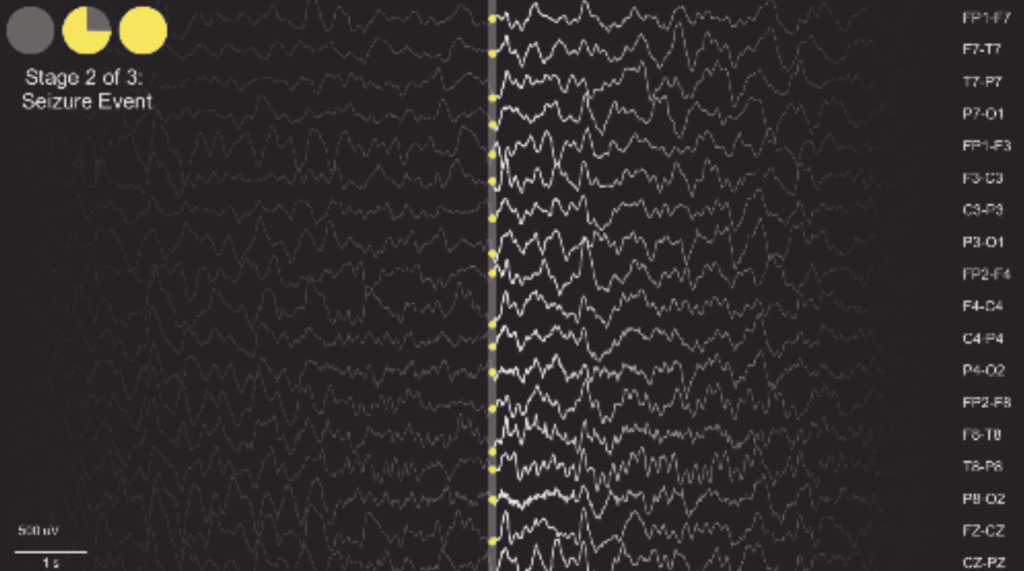

Foo uses different variables from EEG brainwave data to computationally generate the intensity and fluidity of the rhapsody. He used Python to extract an excerpt from the EEG data, which he then calculated the average amplitude, frequency, and synchrony of. He then assigned instrumental and vocal samples in correlation to the calculations. The sounds were synthesized into a rhapsody using a music creating program called ChucK. Lastly, he used Processing, a visual programming language, to generate the visual waves that play with his music in the video above.

You’re able to see Foo’s artistic sensibilities in the final work in the sound samples he chose as well as connections he made to the EEG data. For example, he raised the pitch of string instruments during higher frequencies, while adding louder sounds for higher amplitudes. The connections he makes between his calculated algorithms and sound samples are representative of his interest in the human subject, as well as his artistic priorities and decisions.

“Rhapsody in Grey” by Brian Foo (2015)

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](wp-content/uploads/2020/08/stop-banner.png)