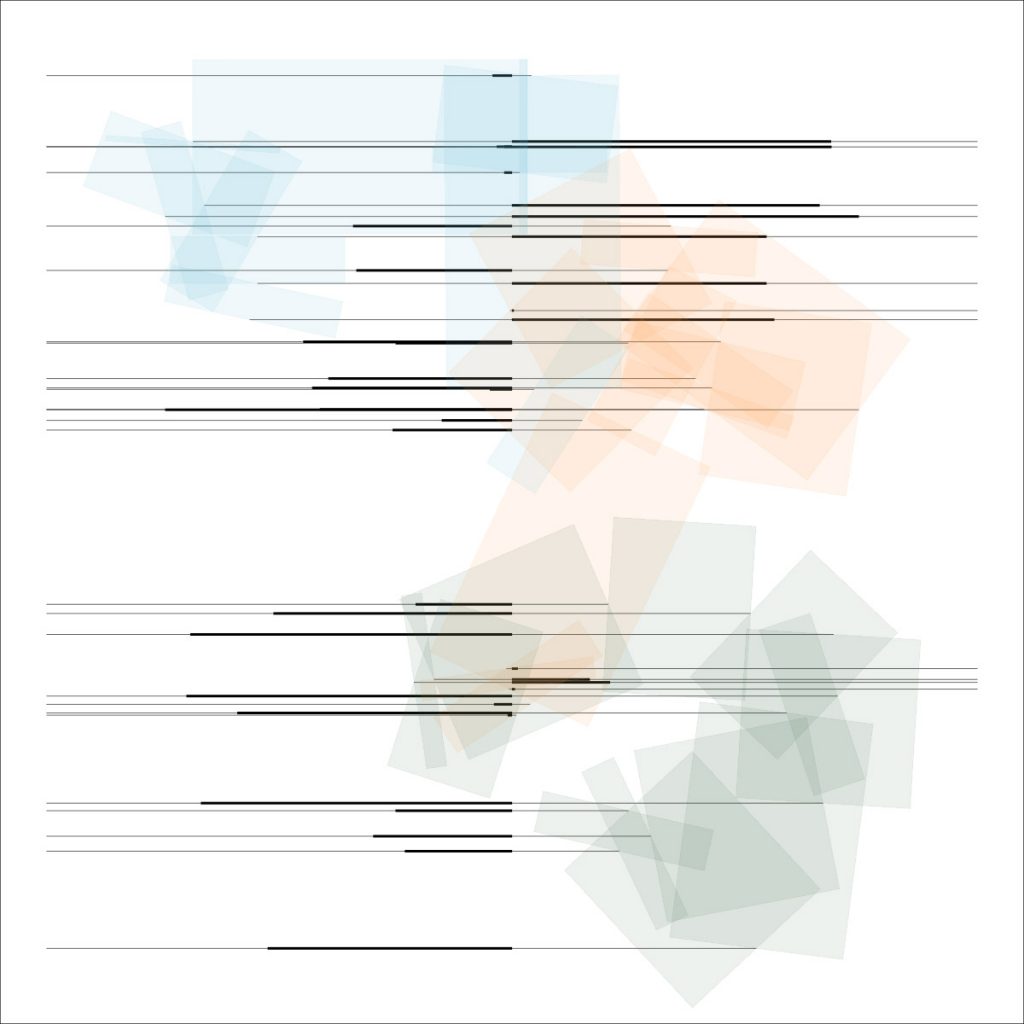

variablefacevar faceWidth = 300;

var faceHeight = 320;

var eyeSize = 60;

var faceC = 180

var eyeC = 255

var pC = 0

var maskC = 200

var eyebC = 50

var hairC = 255

function setup() {

createCanvas(640, 480);

background(220);

}

function draw() {

var colorR = random(0, 255); //red

var colorG = random(0, 255); //green

var colorB = random(0, 255); //blue

background(colorR, colorG, colorB)

stroke(0)

strokeWeight(1)

fill(0,0,0)

rect(25, 25, 590, 430)

//hair back

fill(hairC)

noStroke()

ellipse(width / 2, height / 2, faceWidth + 150, faceHeight + 150)

//face

stroke(0)

fill(faceC)

strokeWeight(1)

ellipse(width / 2, height/ 2, faceWidth, faceHeight);

var eyeL = width / 2 - faceWidth * .25

var eyeR = width / 2 + faceWidth * .25

//hair front

fill(hairC)

noStroke()

ellipse(width / 2, height / 6, faceWidth/ 1.3, faceHeight / 3.1)

fill(eyeC)

stroke(0)

ellipse(eyeL, height / 2, eyeSize, eyeSize); //eyeLeft

ellipse(eyeR, height / 2, eyeSize, eyeSize); //eye Right

var eyeP = 30

fill(pC)

ellipse(eyeL + eyeSize / 8, height / 2 + eyeSize / 5, eyeP, eyeP); //pupil L

ellipse(eyeR + eyeSize / 8, height / 2 + eyeSize / 5, eyeP, eyeP); //pupil R

//mask string

noFill()

stroke(hairC)

strokeWeight(10)

ellipse(eyeL - 60, height / 1.4, faceWidth / 3, faceHeight / 3)

ellipse(eyeR + 50, height / 1.4, faceWidth / 3, faceHeight / 3)

//mask

fill(maskC)

strokeWeight(1)

rect(eyeL - 25, height/1.7, faceWidth / 1.5, faceHeight /3.5 ) //mask

fill(0)

line(eyeL - 25, height/1.7 + 10, eyeL - 25 + faceWidth / 1.5, height / 1.7 + 10 ) // mask lines

line(eyeL - 25, height/1.7 + 20, eyeL - 25 + faceWidth / 1.5, height / 1.7 + 20 )

line(eyeL - 25, height/1.7 + 30, eyeL - 25 + faceWidth / 1.5, height / 1.7 + 30 )

line(eyeL - 25, height/1.7 + 40, eyeL - 25 + faceWidth / 1.5, height / 1.7 + 40 )

line(eyeL - 25, height/1.7 + 50, eyeL - 25 + faceWidth / 1.5, height / 1.7 + 50 )

line(eyeL - 25, height/1.7 + 60, eyeL - 25 + faceWidth / 1.5, height / 1.7 + 60 )

line(eyeL - 25, height/1.7 + 70, eyeL - 25 + faceWidth / 1.5, height / 1.7 + 70 )

line(eyeL - 25, height/1.7 + 80, eyeL - 25 + faceWidth / 1.5, height / 1.7 + 80 )

//eyelash

stroke(0)

strokeWeight(5)

line(eyeL- eyeSize/ 2, height/2, eyeL - eyeSize / 2 - 20, height/2.1)

line(eyeR + eyeSize/ 2, height/2, eyeR + eyeSize / 2 + 20, height/2.1)

line(eyeL - eyeSize/2, height/2.1, eyeL - eyeSize / 2 - 20, height/2.2)

line(eyeR + eyeSize/2, height/2.1, eyeR + eyeSize / 2 + 20, height/2.2)

//eyebrow

noStroke()

fill(eyebC)

rect(eyeL - eyeSize / 2, height/3, eyeSize, eyeSize / 6)

rect(eyeR - eyeSize / 2, height/3, eyeSize, eyeSize / 6)

}

function mouseClicked(){

faceWidth = random(250, 450);

faceHeight = random(270, 470);

eyeSize = random(40, 80);

eyeP = random(20, 40);

faceC = color(random (0, 255), random(0, 255), random (0, 255))

maskC = color(random (0, 255), random(0, 255), random (0, 255))

eyeC = color(random (180, 255), random(180, 255), random (180, 255))

pC = color(random (0, 255), random(0, 255), random (0, 255))

eyebC = color(random (0, 255), random(0, 255), random (0, 255))

hairC = color(random (0, 150), random(0, 150), random (0, 150))

}

For this project I decided I wanted a face with the mask. As I started coding I chose to make a female face that would change colors and sizes. The hard part was getting the mask to align correctly with each face same goes for the top of the hair. I wanted my faces to look like a cartoon would during Covid.

![[OLD FALL 2020] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2021/09/stop-banner.png)