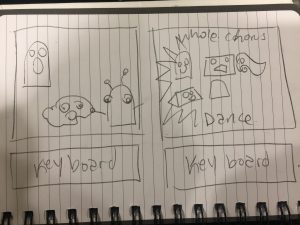

Regarding the project “Drum Kit” (http://ronwinter.tv/drums.html), this is an computational, interactive music project that plays drum-related sound that correlates with a specific key of the keyboard. I admire that it is under a certain theme (of drums), but I feel like there could have been volume control of the general program, so that users could put a background music and use “Drum Kit” at the same time.

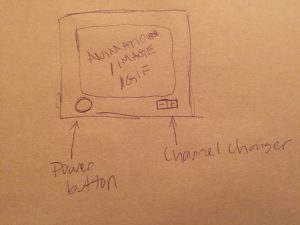

Regarding the project “Rave” (https://rave.dj/mix), this is a website that allows users to mash up two songs that are uploaded. It’s pretty cool in a way that it generates smooth mashup of music, but it would have been improved by allowing the users to change the mashup of songs, instead of automatically generating it.

The two above projects are very different from each other even though they both utilize music to create something new. Rave focuses more on using existing songs to create a new song, while Drum Kit focuses on using different sounds to create a song.

![[OLD FALL 2018] 15-104 • Introduction to Computing for Creative Practice](https://courses.ideate.cmu.edu/15-104/f2018/wp-content/uploads/2020/08/stop-banner.png)