(The audio seems to only work in wordpress on Chrome).

instructions:

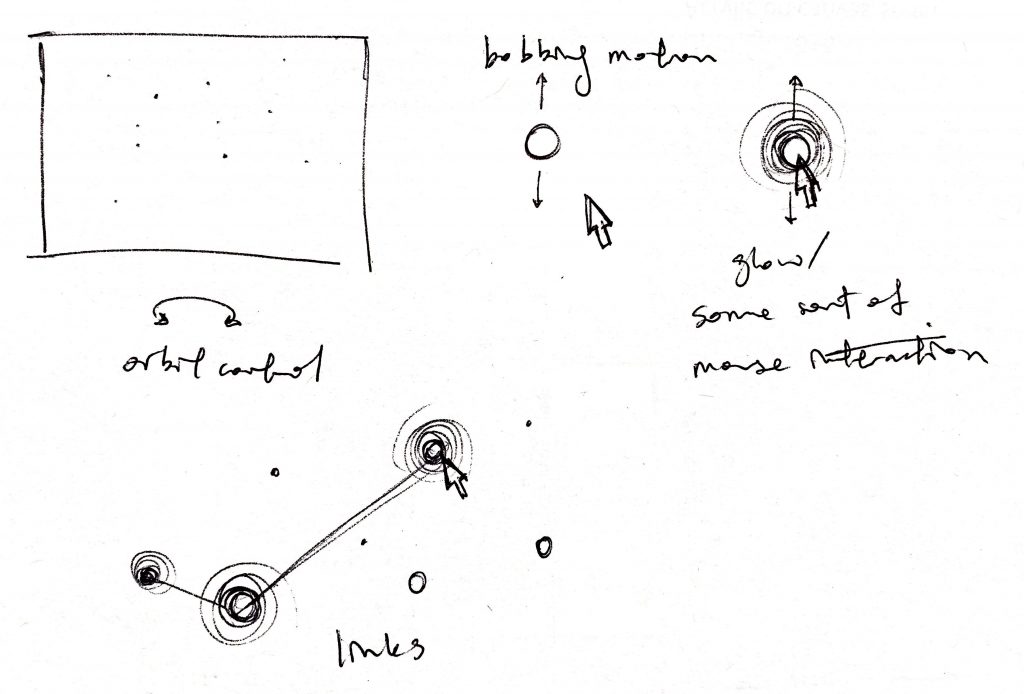

hover over points to hear their pitches.

click points to activate them.

click points again to deactivate them.

clicked points that are close enough to each other will link to each other. (try not to link too many or the program will lag lol)

explore and enjoy the soundscape!

/* Hannah Cai

Section C

hycai@andrew.cmu.edu

Final Project

*/

//particle position arrays

var particleNumber = 200; //number of particles

var psize = 1.5; //particle size

var px = []; //particle x position

var py = []; //particle y position

var pz = []; //particle z position

var distanceToPoint; //dist from (mouseX, mousY) to (px, py)

var amplitude = 3.14 * 3; //amplitude of bobbing animation

var waveSpeed; //speed of bobbing animation

var theta = 0; //plugin for sin()

//particle sound arrays

var threshold = 100; //minimum distance between mouse and particle to trigger glow/sound

var notes = [130.81, 146.83, 164,81, 174.61, 196, 220, 246.94, //pitches of whole notes from C3

261.63, 293.66, 329.63, 349.23, 392.00, 440, 493.88,

523.25, 587.33, 659.25, 698.46, 783.99, 880, 987.77,

1046.5, 1174.66, 1318.51, 1396.91, 1567.98, 1760, 2093]; //to C7

var ppitch = []; //pitch values for each particle

var pOsc = []; //oscillator for each particle

var pvolume = 0; //volume of each particle

var pOscOn = []; //array of booleans for if the oscillators are on

//misc other particle arrays

var pClicked = []; //array of booleans for if the particle was clicked

var glowSize; //size of particle glow

//arrays for cursor

var xarray = [0, 10, 20, 30, 40, 50];

var yarray = [0, 10, 20, 30, 40, 50];

var s;

//arrays for camera and perspective

var camX;

var camY;

var camZ;

var rotateX;

var rotateY;

//arrays for lines between particles

var connect = [];

var connectionThreshold = 500;

function setup() {

createCanvas(windowWidth, windowHeight, WEBGL); //fit canvas to window size

//set up variables; store in arrays

for (i = 0; i < particleNumber; i++) {

px.push(random(-width * 0.8, width * 0.8));

py.push(random(-height, height));

pz.push(random(-width, height / 2));

ppitch.push(notes[floor(random(0, notes.length))]);

pOscOn.push(false);

pClicked.push(false);

makeOsc(i);

}

}

function makeOsc(index) {

myOsc = new p5.SinOsc();

myOsc.freq(ppitch[index]);

pOsc.push(myOsc); //store oscillators in pOsc array

}

function playOsc(index) {

var maxVolume = 0.01;

pvolume = constrain(pvolume, 0, maxVolume);

//turn clicked particles permanently on

if (pClicked[index] === true) {

pvolume = maxVolume;

} else {

//unclicked particles get louder as the mouse gets closer

pvolume = map(distanceToPoint, threshold, 0, 0, maxVolume);

}

//make particles with lower pitches louder, so all ranges are heard clearly

var factor = map(ppitch[index], ppitch[0], ppitch[ppitch.length - 1], 5, 1);

pvolume *= factor;

pOsc[index].amp(pvolume);

}

function stopOsc(index) {

pOsc[index].stop();

}

function draw() {

background(0);

noStroke(); //get rid of default black stroke

//map camera position to mouse position to simulate orbit control

camX = map(mouseX, 0, width, -width / 2, width / 2);

camY = map(mouseY, 0, height, -height / 2, height / 2);

camZ = (height/2.0) / tan(PI*30.0 / 180.0);

camera(camX, camY, camZ, 0, 0, 0, 0, 1, 0);

//set up particles

for (i = 0; i < particleNumber; i++) {

drawLines(i); //draw lines between clicked particles

//create bobbing movement

waveSpeed = map(pz[i], -width, height, 20000, 70000); //create parallax effect

theta += (TWO_PI / waveSpeed);

if (theta > amplitude) {

theta = -theta;

}

py[i] += sin(theta);

push();

translate(px[i], py[i], pz[i]);

drawGlow(i); //draw glow of each particle

//draw each particle

fill(255);

smooth();

sphere(psize);

pop();

//play a particle's oscillator if the mouse's

//distance is less than the threshold

if (distanceToPoint <= threshold) {

if (pOscOn[i] == false) {

pOsc[i].start();

pOscOn[i] = true;

}

playOsc(i);

}

//stop a particle's oscillator if the mouse's

//distance is greater than the threshold

if (distanceToPoint > threshold & pClicked[i] == false) {

stopOsc(i);

pOscOn[i] = false;

}

}

//cursor

noCursor(); //turn off the cursor icon, display below instead

//this is basically the code from the snake lab we did

for (var i = 0; i < xarray.length; i++) {

fill(255, 255, 200);

s = 8 - (xarray.length - i);

ellipse(xarray[i], yarray[i], s, s);

}

xarray.push(mouseX - width / 2);

yarray.push(mouseY - height / 2);

if (xarray.length > 8) {

xarray.shift();

yarray.shift();

}

}

function drawGlow(index) {

push();

noStroke();

//rotate the (flat) ellipses to face the cameras to simulate 3d glow

rotateX(radians(map(camY, -height / 2, height / 2, 40, -40)));

rotateY(radians(map(camX, -width / 2, width / 2, -45, 45)));

//calculate distance from mouse to each point

distanceToPoint = dist(mouseX - width / 2, mouseY - height / 2, px[index], py[index]);

//clicked particles have a pulsing glow;

//unclicked particles glow when the mouse hovers close to them

if (pClicked[index] === true) {

glowSize = map(sin(theta), TWO_PI, 0, psize, 100);

} else {

glowSize = map(distanceToPoint, 100, psize, psize, 100);

}

//draw the actual glow (a radial alpha gradient)

for (r = psize; r < glowSize; r += 1.5) {

fill(255, 255, 200, map(r, psize, glowSize, 2, 0));

ellipse(0, 0, r);

}

pop();

}

function drawLines(index) {

push();

//push the indices of clicked particles in the "connect" array;

//turn off/remove particles from the array if clicked again

if (pClicked[index] == true & ! connect.includes(index)) {

connect.push(index);

} else if (pClicked[index] == false) {

connect.splice(index, 1);

}

//connect groups of particles that are clicked if the distance between is less than the threshold

stroke(255);

strokeWeight(1);

noFill();

for (i = 0; i < connect.length; i++) {

for (j = i + 1; j < connect.length; j++) {

if (dist(px[connect[i]], py[connect[i]], pz[connect[i]],

px[connect[j]], py[connect[j]], pz[connect[j]]) < connectionThreshold) {

beginShape(LINES);

vertex(px[connect[i]], py[connect[i]], pz[connect[i]]);

vertex(px[connect[j]], py[connect[j]], pz[connect[j]]);

endShape();

}

}

}

noStroke();

pop();

}

//if window is resized, refit the canvas to the window

function windowResized() {

resizeCanvas(windowWidth, windowHeight);

}

function mouseClicked() {

for (i = 0; i < particleNumber; i++) {

distanceToPoint = dist(mouseX - width / 2, mouseY - height / 2, px[i], py[i]);

//toggle pClicked on and off if mouse clicks within 10 pixels of a particle

if (distanceToPoint < 10 & pClicked[i] == false) {

pClicked[i] = true;

} else if (distanceToPoint < 10 & pClicked[i] == true) {

pClicked[i] = false;

}

}

}Here’s a zip file for the fullscreen version.

final project fin

There are still a few bugs I’m aware of that I don’t know how to fix:

1. sometimes the links will flicker, adding another grouped point sometimes fixes it

2. sometimes the volume is louder than it should be upon refreshing/starting the program. I constrained the volume to try and avoid this but it didn’t seem to help

3. sometimes all the oscillators start off turned on upon refreshing/starting the program (if you move your mouse close to a point, the sine wave will start and stop, instead of fading in and out).

Generally, refreshing the page fixes all of these bugs, so please refresh the page if you notice any of the above!

I enjoyed this project a lot. Even though I spent a lot of time struggling and debugging, I feel like I learned a lot about both WEBGL and using sound/oscillators. I’m pretty satisfied in the final visual effects as well, although unfortunately, the program will start to lag if too many linked points are formed. Also unfortunately, my aim with this project was to familiarize myself more with objects, but I was completely stuck trying to format things in objects so I made everything with a ton of arrays instead. I definitely want to revisit this project in the future and format it properly with objects. In general, I definitely want to keep adding to this project because it’s still pretty clunky and buggy right now. I was planning to add a start screen, instructions, the ability to record audio, and different modes (eg a “wander” mode where the cursor moves around on its own), but I didn’t have time to even try implementing most of those before the deadline. In the future, though, I definitely want to try and make this something that could be a standalone interactive website (and add it to my portfolio, lol).

In general, I loved this class and I learned a lot! Thank you to Dannenberg and all the TAs!

![[OLD FALL 2018] 15-104 • Introduction to Computing for Creative Practice](../../wp-content/uploads/2020/08/stop-banner.png)