Here is a video to see how the maze works.

Word Press doesn’t show you the camera screen so it is difficult to navigate the dots because of the file being so big. Please download this html to play the maze with the camera if you want to see where your tracker is: jinal2-final project. It works best on Chrome. Thank you!

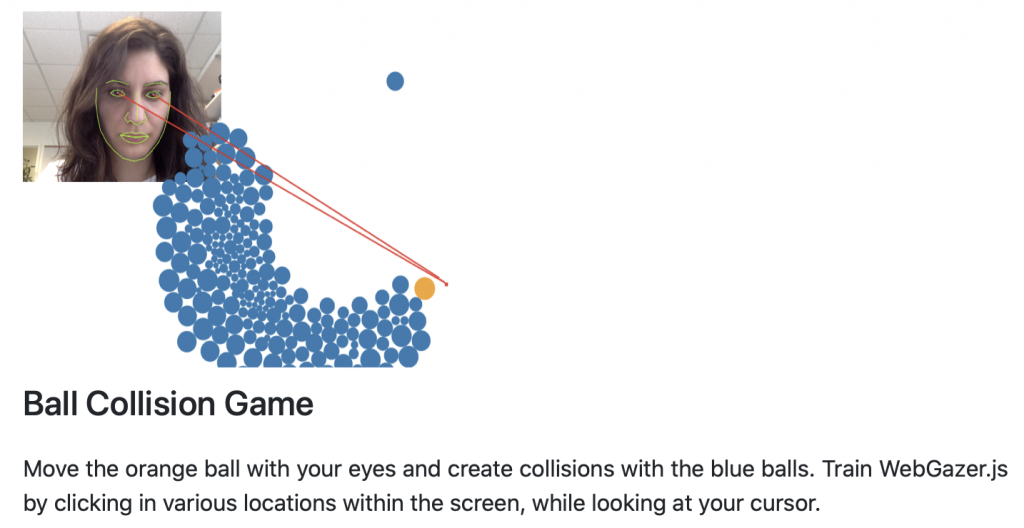

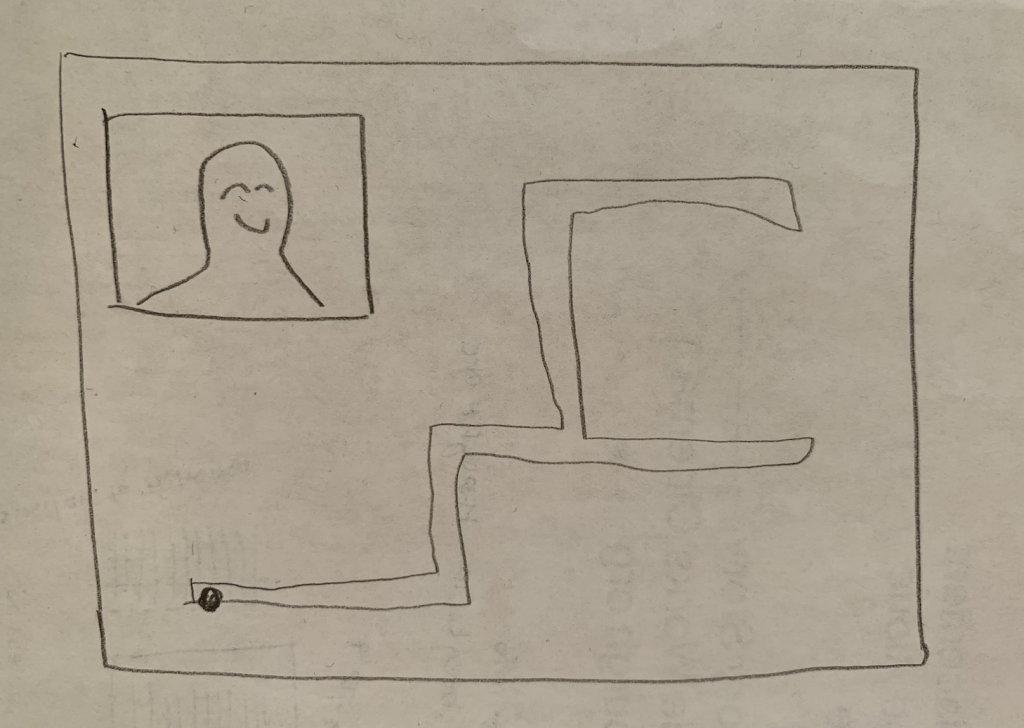

What is it? I know that we did not have much experience with using our webcam into our code, but I really wanted to implement an interactive game that used it. For my project, I wanted to create a camera censored maze game that was moved through your pupils. Before creating the final result, I researched how to sensor your pupils so that the dots follow wherever you look when you are playing the game. However, that was way too ambitious as I struggled to understand how to implement that concept into my code. In the end, I made the camera sensor for any objects that are red so that it will create dots on them.

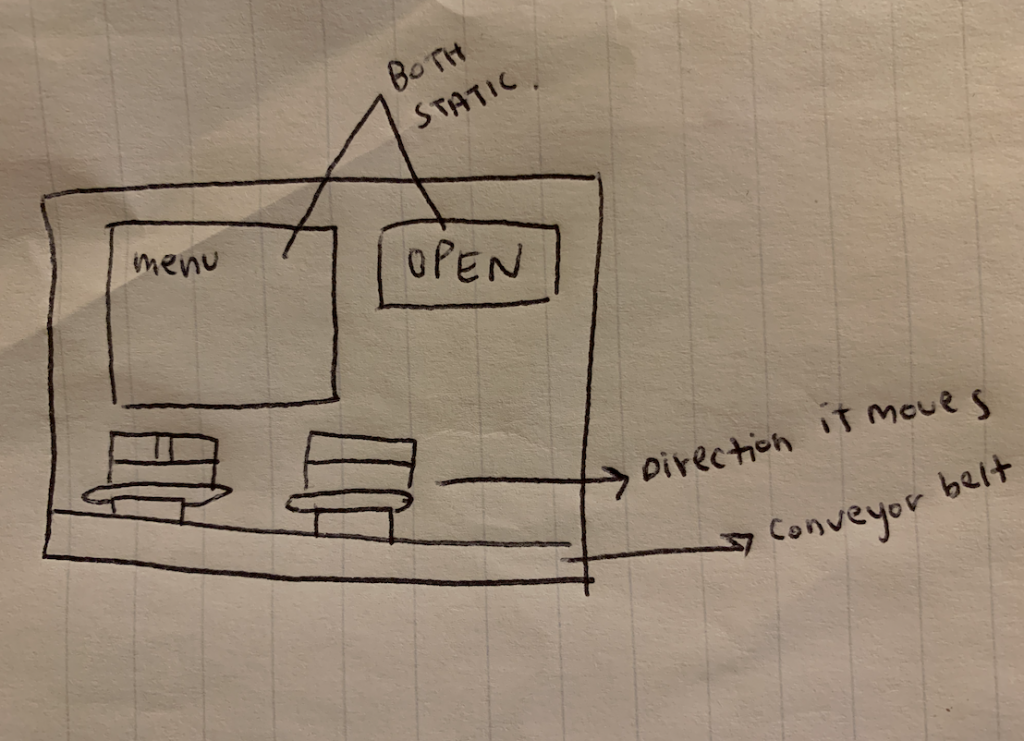

Due to me not being able to make the game restart when you hit the maze lines, I made the dots go back to the start position when the red tracker is out of the webcam frame. I know that this class is over now, but there are things that I would like to add to my project. Because it took so much longer to try to get the pupil sensors to work, I did not get to work more on other things that I wanted to get to. First, I want to make an “ERROR” sign when the dots hit the white lines of the maze. In addition, I would want to create an intro that would help people calibrate their red object with the screen so that it is easier to control. I had a lot of fun with this project while also pushing myself to try to create something that was way out of my comfort zone.

// Jina Lee

// jinal2@andrew.cmu.edu

// Section E

// 15-104 Final Project

// This codes uses the webcam to create red dots that will

// follow any red color on the camera.

var myCaptureDevice;

// This array stores the x value of the red positions

var xarray = [];

// This array stores the y value of the red positions

var yarray = [];

// Limits of x and y arrays

var sizeLimit = 10;

var redCircle = 1;

function setup() {

createCanvas(500, 500);

// Camera

myCaptureDevice = createCapture(VIDEO);

myCaptureDevice.size(500, 500);

}

function isColor(c) {

// Tests the colors

return (c instanceof Array);

}

function draw() {

// Background

fill(0);

rect(0, 0, 500, 500);

// Maze

stroke(255);

strokeWeight(10);

line(250, 250, 250, 320);

line(250, 250, 250, 320);

line(250, 320, 320, 320);

line(320, 320, 320, 190);

line(320, 320, 320, 180);

line(320, 180, 180, 180);

line(180, 180, 180, 370);

line(180, 370, 380, 370);

line(380, 370, 380, 130);

line(380, 130, 135, 130);

line(135, 130, 135, 420);

line(135, 420, 430, 420);

line(430, 420, 430, 70);

line(430, 70, 70, 70);

//boxes

fill(255);

noStroke();

rect(65, 65, 50, 50, 10);

rect(-5, 150, 100, 100, 10);

rect(60, 300, 80, 80, 10);

rect(-10, 430, 70, 70, 10);

// Starting Spot

fill(255);

rect(225, 230, 50, 50, 10);

textSize(10);

textFont('Helvetica');

fill('green');

text("START", 235, 260);

// Exit Spot

fill(255);

rect(440, 470, 50, 50, 10);

textSize(10);

textFont('Helvetica');

fill('red');

text("END", 455, 495);

// Red dots are created because of camera sensor

var xMin = 0;

var xMax = 500;

var yMin = 0;

var yMax = 500;

// For loop that draws the dots

for (var a = 0; a < xarray.length; a++){

// Ellipse

if (redCircle === 1){

noStroke();

fill('red');

ellipse(xarray[a], yarray[a], 5, 5);

}

}

for (var i = 0; i < width; i += 5){

for (var q = 0; q < height; q += 5) {

var currentColor = myCaptureDevice.get(i, q);

var r = red(currentColor) - 255;

var g = green(currentColor) - 0;

var b = blue(currentColor) - 0;

// If rbg matches criteria

// than get the center point of the red area

// and put it in an array

if (isColor(currentColor)){

var dist = sqrt(sq(r) + sq(g) + sq(b));

if (dist < 120) {

// Finds the location of the red areas on the web cam

// Finds the smallest x location

if (i > xMin){

xMin = i;

}

// Find the biggest x location

if (i < xMax){

xMax = i;

}

// Finds the smallest y loctaion

if (q > yMin){

yMin = q;

}

// Finds the biggest y location

if (q < yMax){

yMax = q;

}

}

}

}

}

// Push the new x of red from camera into the array

xarray.push((xMin + xMax) / 1.9);

// Push the new y of red from camera into the array

yarray.push((yMin + yMax) / 1.7);

// When array is full, it will pop something out from the beginning

while (xarray.length > sizeLimit) {

xarray.shift();

yarray.shift();

}

}![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../wp-content/uploads/2020/08/stop-banner.png)