For this looking outwards blog post, I decided to explore other sound art and a mobile game. I decided to look into François Quévillon’s Algorithmic Drive and the mobile game Tap Tap Revolution.

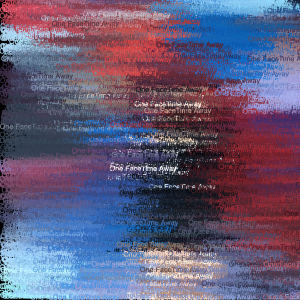

I found François Quévillon’s sound project intriguing because it invited audience members to experience sound from a slightly skewed perspective. It explores upon the intersection of sound with the concept of the unpredictable nature of the world. The description “unpredictable nature” inspired me to explore the idea of how unpredictable fires can be and how unpredictable of an impact it could have on society.

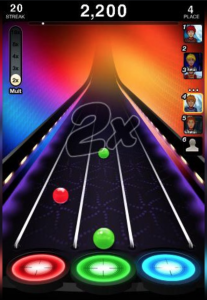

Tap Tap Revolution uses the user’s tap response on the screen to produce certain sounds– in the game, songs. This concept inspired me because I wanted to conjoin user’s response to produce a sound.

These two projects are similar in the way that they depend on data to produce various sounds that explore the intersection of the data and a concept– unpredictable nature of the world and music respectively.

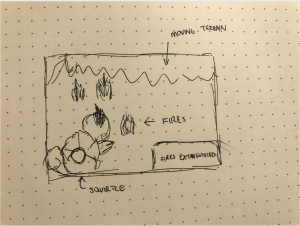

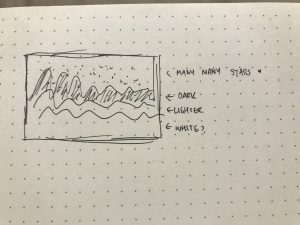

From these two projects and my childhood game– Pokemon– I decided to create an interactive game to demonstrate the unpredictable nature of the California fires and sounds accompanied with it.

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../wp-content/uploads/2020/08/stop-banner.png)