/* SooA Kim

sookim@andrew.cmu.edu

Final Project

Section C

*/

//webcam and image texture variables

var myCaptureDevice;

var img = [];

var frames = [];

var framelist = 0;

var link1= [];

var link2 = [];

var link3 = [];

//particle variables

var gravity = 0.1; // downward acceleration

var springy = 0.9; // how much velocity is retained after bounce

var drag = 0.0001; // drag causes particles to slow down

var np = 50;

var particles = [];

var Image1 = [];

var Image2 = [];

var Image3 = [];

var link1 = [

"https://i.imgur.com/qAayMED.png",

"https://i.imgur.com/86biLWe.png",

"https://i.imgur.com/r5UE5kg.png",

"https://i.imgur.com/I1BBRfH.png",

"https://i.imgur.com/D1l3aXi.png",

"https://i.imgur.com/NAHr7yB.png",

"https://i.imgur.com/F0SB9GM.png",

"https://i.imgur.com/B1kvNaM.png",

"https://i.imgur.com/Th9sahL.png",

"https://i.imgur.com/c9cuj2k.png"

]

var link2 = [

"https://i.imgur.com/z7BAFyp.png",

"https://i.imgur.com/8Ww7Vuo.png",

"https://i.imgur.com/QFjlLr2.png",

"https://i.imgur.com/0sdtErd.png",

]

var link3 = [

"https://i.imgur.com/ZD7IoUP.png"

]

var textures = [link1, link2, link3];

var textureIndex = 0;

function preload() {

for (var z = 0; z < link1.length; z++){

link1[z] = loadImage(link1[z]);

}

for (var z = 0; z < link2.length; z++){

link2[z] = loadImage(link2[z]);

}

for (var z = 0; z < link3.length; z++){

link3[z] = loadImage(link3[z]);

}

}

function setup() {

createCanvas(600, 480);

myCaptureDevice = createCapture(VIDEO);

myCaptureDevice.size(600, 480); // attempt to size the camera.

myCaptureDevice.hide(); // this hides an extra view.

}

function mousePressed(){

textureNew = floor(random(textures.length)); //to prevent from same number(texture) that's selected again,

while(textureNew == textureIndex){

textureNew = floor(random(textures.length));

}

textureIndex = textureNew;

}

function particleStep() {

this.age++;

this.x += this.dx;

this.y += this.dy;

this.dy = this.dy + gravity; // force of gravity

var vs = Math.pow(this.dx, 2) + Math.pow(this.dy, 2);

// d is the ratio of old velocty to new velocity

var d = vs * drag;

d = min(d, 1);

// scale dx and dy to include drag effect

this.dx *= (1 - d);

this.dy *= (1 - d);

}

function makeParticle(px, py, pdx, pdy, imglink) {

p = {x: px, y: py,

dx: pdx, dy: pdy,

age: 0,

step: particleStep,

draw: particleDraw,

image: imglink //making image as a parameter

}

return p;

}

function particleDraw() {

//tint(255, map(this.age, 0, 30, 200, 0)); //fade out image particles at the end

image(this.image, this.x, this.y);

}

function isColor(c) {

return (c instanceof Array);

}

function draw() {

tint(255, 255);

background(220);

imageMode(CORNER);

myCaptureDevice.loadPixels(); // this must be done on each frame.

image(myCaptureDevice, 0, 0);

framelist += 1;

imageMode(CENTER);

// get the color value of every 20 pixels of webcam output

for (var i = 0; i < width; i += 20) {

for (var j = 0; j < height; j+= 20) {

var currentColor = myCaptureDevice.get(i, j);

var rd = red(currentColor);

var gr = green(currentColor);

var bl = blue(currentColor);

// targetColor: green

if (rd < 90 & gr > 100 && bl < 80){

for (var s = 0; s < textures[textureIndex].length; s++) {

var pImage = makeParticle(i, j, random(-10, 10), random (-10, 10),textures[textureIndex][s]); //replaced position with i and j that tracks green pixels

if (particles.length < 10000) {

particles.push(pImage);

}

}

}

}

}

newParticles = [];

for (var i = 0; i < particles.length; i++) { // for each particle

var p = particles[i];

p.step();

p.draw();

if (p.age < 20) { //to last good amount of particle (due to laggy actions)

newParticles.push(p);

}

}

particles = newParticles;

}

// moving frames

function framePush(){

framelist += 1;

image(frames[framelist % frames.length], 0, 0);

}

Mouse click the screen!

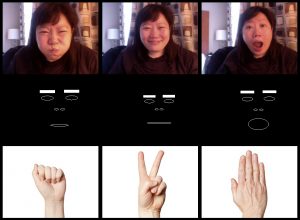

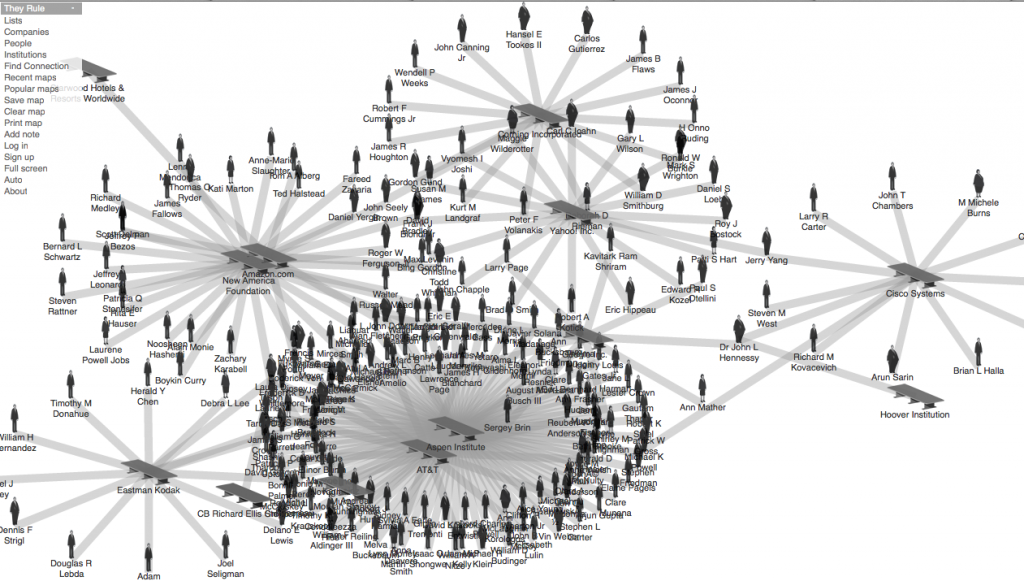

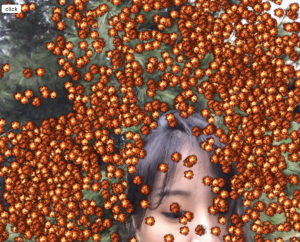

For this final project, I created a variety of sprite textures to apply into a particle effect animation that replaces the green pixels in the webcam screen. The sprite texture changes when the mouse is clicked. I approached this project with the basis of environmental concern. I wanted to convey a message on what happens with climate change with the imagery of air pollution covered by these sprite texture that imitates dusts and smokes.

I tried to be be playful in terms of this project as well by providing different textures. I started this code by tracking the green pixels first, then I moved onto figuring out how to replace the image texture to the particle effect.

The coding process was very challenging, but I am really happy with the outcome.

sprite textures

first try on getting the sprite texture to the image

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../wp-content/uploads/2020/08/stop-banner.png)