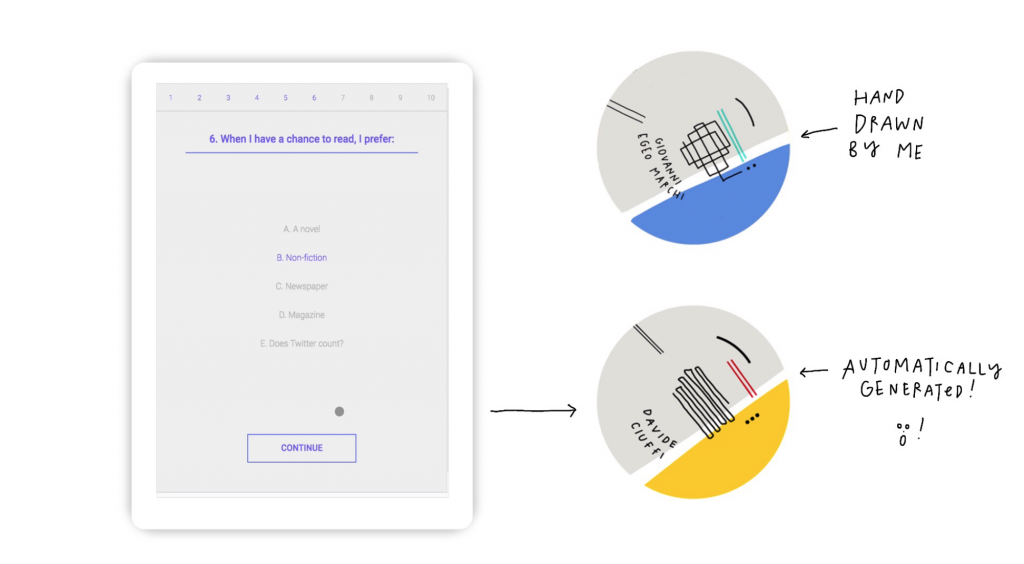

When approaching this project, I wanted to create my self-portrait that was angular with similarly toned hues. Using mostly quadrilaterals, I needed to make sure points lined up with each other to create a unified facial structure. Additionally, I added mouse tracking to a square in the background to add an extra element of depth.

sketch

//Yoshi Torralva

//Section E

//yrt@andrew.cmu.edu

//Project-01

function setup() {

createCanvas(600,600);

}

function draw() {

background(249,33,33);

//background with mouse tracking for added depth

fill(234,24,24);

rect(mouseX,mouseY,500,500);

//shirt

fill(51,3,14);

noStroke();

quad(122,517,374,408,374,600,91,600);

quad(374,408,507,432,579,600,292,600);

//right ear

fill(160,2,18);

noStroke();

quad(384,267,392,277,377,318,340,326);

//hair behind ellipse

fill(114,7,18);

noStroke();

ellipse(269,172,112,112);

//side hair

fill(114,7,18);

noStroke();

quad(200,194,225,177,218,244,197,274);

//hair

fill(114,7,18);

noStroke();

ellipse(290,163,112,112);

ellipse(330,224,125,174);

//neck shadow

fill(89,4,20);

noStroke();

quad(224,363,360,314,350,385,237,433);

//head

fill(191,16,25);

noStroke();

quad(256,322,350,331,326,362,274,368);

quad(207,346,257,322,274,368,224,363);

quad(207,241,257,323,207,346);

quad(197,273,207,241,207,346,199,323);

quad(225,273,297,283,256,322);

quad(250,323,353,227,357,270,324,329);

quad(305,359,357,270,370,292,350,331);

//ellipse for head

fill(191,16,25);

noStroke();

ellipse(281,229,146,135);

//neck

fill(191,16,25);

noStroke();

quad(254,390,334,371,374,408,220,477);

quad(334,371,357,328,351,387);

quad(219,477,374,407,345,459,269,483);

//hair in front of head

fill(114,7,18);

noStroke();

ellipse(324,172,106,76);

//nose shadow

fill(160,2,8);

noStroke();

quad(276,237,283,245,286,279,278,269);

quad(261,241,263,238,254,266,246,276);

//sunglasses

fill(114,7,18)

noStroke();

quad(206,225,264,233,250,268,203,260);

quad(279,235,338,240,334,275,284,273);

quad(240,230,361,242,360,249,239,237);

//eyebrows

fill(114,7,18)

noStroke();

quad(221,213,236,210,231,219,214,223);

quad(236,210,261,217,260,226,231,219);

quad(286,220,326,218,327,227,289,229);

quad(326,218,342,233,327,227);

//nostrils

fill(160,2,8);

noStroke();

ellipse(254,280,8,4);

ellipse(274,282,8,4);

//upper lip

fill(160,2,8)

noStroke();

quad(243,309,257,306,257,313,233,316);

quad(257,306,260,307,260,314,257,313);

quad(260,307,262,306,263,313,260,314);

quad(262,306,279,310,288,319,263,313);

//lower lip

fill(135,3,22);

noStroke();

quad(233,316,257,313,256,321,245,320);

quad(257,313,260,314,259,321,256,321);

quad(260,314,263,313,262,321,259,321);

quad(263,313,288,319,262,321);

}

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)