Taryn Southern is a singer active on YouTube who creates her own contents. I find her most recent album particularly interesting as it was entirely composed and produced with artificial intelligence. In an interview with Taryn, she informed us that she used an AI platform called Amper Music to create the instrumentation of her songs. In her creating process, Taryn would decide factors such as the BPM, rhythm, and key, and the AI would generate possibilities for her. From there, she could select the pieces that she enjoyed, and arrange them along with the lyrics that she wrote herself. She states that the advantage of working with an AI is that it gives her a lot of control over what she desires, as opposed to a human partner who may not understand her intents. However, I personally find her songs generic and lack the emotions that move the audience. There are many things that artificial intelligence can achieve, but I believe that music that truly conveys human sentiments should fully origin from the minds of people.

Category: LookingOutwards-10

Shannon Ha – Looking Outwards – 10

The mi.mu gloves can be defined as a wearable musical instrument for expressive creation, composition, and performance. These gloves were the creation of music composer and songwriter Imogen Heap, who wrote the musical score for Harry Potter and the Cursed Child. Her aim in creating these gloves is to push innovation and share resources. She believes that these gloves will allow musicians and performers to better engage fans with more dynamic and visual performances, simplify the hardware that controls music (laptops, keyboards, controllers) and connect movement with sound.

The flex sensors are embedded in the gloves which measure the bend of the fingers, the IMU measures the orientation of the wrist. All the information received by these sensors is communicated over wifi. There is also a vibration motor implemented for haptic feedback. With the glove comes software that allows the user to program movements to coordinate with different sounds. Movements can be coordinated with MIDI and OSC.

I believe this piece of technology really pushes the boundaries of computational music as it allows musicians to have complete agency over electronically generated sounds through curated body movement without having to control sounds through a stationary piece of hardware. Performers, in particular, could benefit heavily from these gloves as their artistry moves beyond music and into how body language is incorporated with sound. As a designer, I personally admire how she was able to use advanced technology to create these novel experiences not only for the performer but also for the audience. There are instances where the use of technology can hinder artistry especially when it is overused, but I think these gloves allow musicians to connect more with the music and how it’s being presented to the audience.

Shariq M. Shah – Looking Outwards 10 – Sound Art

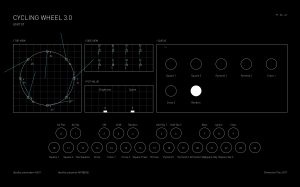

The Cycling wheel project, by Hong Kong based practice Keith Lam, Seth Hon and Alex Lai, takes the concept of Marcel Duchamp’s famed bicycle wheel, in his readymade explorations, and transforms it into a generative light and sound instrument. In order to turn it into this interactive performance art piece, it uses Processing to create a specially made control panel configuration that functions on three levels: controlling music, movement, and LED lights. The processes take the movement of the bicycle wheel and uses it to generate a variety of sounds that are augmented by the control panel computation. The result is a magical interplay of light, music, art, and computation that makes for quite a spectacular interactive art piece. It makes me think of ways that other physical movements can be translated into dynamic soundscapes, a concept similar to what I tried to do for this week’s project. It is also interesting to consider how a variety of different sounds can be layered to produce an immersive soundscape experience, as this orchestral performance demonstrates.

Margot Gersing – Looking Outwards- 10

This week I looked at a project called Weather Thingy by Adrien Kaeser. This project that uses a sound controller that takes weather and climate events to modify the musical instrument and the sound it makes.

Part of why I liked this project so much it is works in real time. It has sensors that collect data from the climate it is in and the controller interprets the data to create different sounds. The machine has a weather data collection station which includes a rain gauge, a wind vane and a anemometer. It also has a brightness sensor. The user then uses the interface to choose what sensor he is working with and uses a potentiometer to modify the data and create different sounds.

I think this project is so interesting because of its presence in real time and its ability to act as a diary. This device can also store data from a specific time and then you can use that pre-recorded data later on. This way it is almost like a window to a certain time and what was going on then. I can see the potential of using this to see how climate change has affected the same spot over time. I can almost imagine a soundscape being made in one spot and then in the same spot 20 years later and see how different it is.

Crystal Xue-LookingOutwards-10

Christine Sun Kim, deaf since birth, explores her subjective experiences with sound in her work. She investigates the operations o sound and various aspects of deaf culture in her performances, videos, and drawings. She uses a lot of elements like body language, ASL(Amerian Sign Language) and so as her interpretation to expand the traditional scope of communication.

This particular project shown above is called “game of skill 2.0” is displayed in MoMA PS1. Audiences are invited to listen to a reading of a text about the future. Pathways are created in concert with the game’s console, causes the device to emit sound at a pace proportional to the participant’s movement. The physical scrubbing process is the direct interaction with the sound.

Cathy Dong-Looking Outwards-10

“Apparatum,” commissioned by Adam Mickiewicz Institute, is a musical and graphical installation inspired by the Polish Radio Experimental Studio, which is one of the first studios to produce electroacoustic music. It is the fruit of digital interface meeting analog sound. The sound is generated based on magnetic tape and optical components. Boguslaw Schaeffer comes up with his personal visual language of symbols and cues and composes “symphony—Electronic Music” for the sound engineers. With two 2-track loops and three 1-shot linear tape samplers, they obtain noise and basic tones. They utilize analog optical generators based on spinning discs with graphical patterns.

Ghalya Alsanea -LO-10 – The Reverse Collection

An improvised performance with ten newly created instruments

The Reverse Collection (2013 – 2016)

Creator: Tarek Atoui is a Beirut based artist and composer. What I admire about his work is that they’re grounded in extensive knowledge about music history and tradition.

Photograph: Oli Cowling/Tate Photography

The Reverse Collection is an ensemble of newly created instruments that were conceived in a few steps. The first stage was born out of the storage facilities of Berlin’s Dahlem museum, which houses the city’s ethnographic and anthropological collections. Atoui found that in the museum’s stash of historical instruments, there were many instruments with hardly any information/indication of their sociocultural provenance or any instructions on how to play them. So, he invited established musicians and improvisers to play with them, and recorded what happened, even though they had no idea how to use them.

The second stage was in collaboration with instrument makers. He asked them to listen to those recordings – layered and edited – and create new instruments on which someone could play something that sounds similar. Around 8 new instruments where created, and were used for several performances and new compositions, called the Reverse Sessions.

Finally, the third stage, which is The Reverse Collection, had a new generation of ten instruments to work with. Atoui recorded several performances to create a collection. This was done by a multi-channel sound work in an exhibition space, that was evolving over the whole duration of a show and allowing multiple associations between object, sound, space and performance. More on the performance and what the instruments are here.

I chose this project because I really admire the translation of historical artifacts into a modern instrument. Even though the result was a physical manifestation, the process of creating and understand the instruments was computational.

Jai Sawkar – Looking Outwards – 10

This week, I looked at the work done by Mileece Petre, an English sound artist & environmental designer who makes generative & interactive art through plants. She believes that plants are observational and interactive beings, and she uses plants to make music by attaching electrodes to them. She uses data from the plants in the form of electromagnetic currents, and this data is translated into code. This code is then transformed into musical notes, in which she composes music from.

This project is super cool to me because it truly thinks out of the box. She is able to make minimalist, introspective music simply from small currents from plants. Moreover, it reflects the true possibilities of what music with computational tools can be!

Minjae Jeong-looking outwards-10-computational music

For this week’s looking outwards, I found a Tedx talk by Ge Wang, who makes computer music. He uses a programming language called “Chuck,” and what surprised me the most in the beginning of his lecture was that I expected the software to be something similar to Logic X pro and Qbase which are professional producing softwares, but he was literally “coding”

to generate a sound. Although the basic demonstration was very simple but with the use of technology, Stanford laptop orchestra performs a piece of music with each laptop as an instrument. One of the most attractive thing about computational music to me is the ability to generate any sound, and with such ability, computational music can really create any music or sound that the “composer” wants to express.

Jina Lee – Looking Outwards 10

This week I decided to look at Keith Lam, Seth Hon and Alex Lai’s The Cycling Wheel. This project utilized Arduino as well as other processing softwares to make the bicycle an instrument of light and sound. I was drawn to this project, because I am currently taking an Introduction to Arduino class. I was intrigued with how they use this application because I can barely make an LED light turn on and off. When you turn the wheel of the bicycle, it turns different aspects such as the music and light bean and color of the light would be altered. The bike itself becomes an instrument and the controllers of the wheel become the musician. This concept is something I have never seen and thought it was so extremely creative.

With this project I admire how it allows anyone to become a musician. From my limited experience with Arduino, I am assuming that they were able to alter the color of the LED strip though the influence of the motion of the wheel. I am still unsure how they were able to connect the motion of lights with sound. I think this is a great example of how someone can incorporate sound with computational lights.

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2020/08/stop-banner.png)