//Shannon Ha

//sha2@andrew.cmu.edu

//Section D

// Final Project

var soundLogos = [];

var amplitude; //initialize amplitude

var level; //initialize amp level

var rotatePath = 0;

var h;

var dY; // dot x position

var dX; // dot y position

function preload(){ //load sounds

soundLogos[1] = loadSound('https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/12/Apple-1-2.wav');

soundLogos[2] = loadSound('https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/12/Apple-2-text-2.wav');

soundLogos[4] = loadSound('https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/12/Intel-p2.wav');

soundLogos[5] = loadSound('https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/12/Microsoft-2-1.wav');

soundLogos[6] = loadSound('https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/12/Microsoft-3-1.wav');

soundLogos[7] = loadSound('https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/12/Netflix-1.wav')

soundLogos[8] = loadSound('https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/12/Nokia-1.wav');

soundLogos[9] = loadSound('https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/12/Skype-1.wav');

}

function setup() {

createCanvas(300, 300);

amplitude = new p5.Amplitude(); // p5.js sound library for amplitude

}

function draw() {

background(0);

push();

translate(width / 2, height / 2); // centers the epicycloid

drawEpicy();

pop();

drawThreeDots();

drawIntelEllipse();

drawMicroGrid()

}

function keyPressed(){ // assigns the number keys to respective sound files and animations.

if (key == '1'){

soundLogos[1].play();

drawEpicy();

} else if (key == '2'){

soundLogos[2].play();

dY = height / 2; // makes starting position of dot center of canvas

dX = width / 2; // makes starting position of dot center of canvas

drawThreeDots();

} else if (key == '3'){

soundLogos[4].play();

drawIntelEllipse();

} else if (key == '4'){

soundLogos[5].play();

drawMicroGrid();

} else if (key == '5'){

soundLogos[6].play();

} else if (key == '6'){

soundLogos[7].play();

} else if (key == '7'){

soundLogos[8].play();

} else if (key == '8'){

soundLogos[9].play();

}

}

//animation for apple

function drawEpicy() {

var a = 60; // variable that controls the size and curvature of shape

var b = a / 2; // variable that controls the size and curvature of shape

level = amplitude.getLevel(); //takes the value of measured amp level.

h = map(level, 0, 3, 20, 900); // maps amplitude to appropriate value

var ph = h / 2; // links mapped amp value as a variable that controls shape.

fill(h * 5, h * 10, 200); //fills color according to measured amp.

noStroke();

beginShape();

for (var i = 0; i < 100; i ++) { // for loop to draw vertex for epicycloid

var t = map(i, 0, 100, 0, TWO_PI); // radian value.

x = (a + b) * cos(t) - h * cos(ph + t * (a + b) / b);

y = (a + b) * sin(t) - h * sin(ph + t * (a + b) / b);

vertex(x, y); //curve line

}

endShape();

}

//animation for text

function drawThreeDots(){ //draws the three dots that fall

fill(255);

noStroke();

print(h);

ellipse(dX - 50, dY, 25, 25);

ellipse(dX, dY * 1.2, 25, 25);

ellipse(dX + 50, dY * 1.5, 25, 25);

dY += 10; // vertical falling

}

function drawIntelEllipse(){

level = amplitude.getLevel();

let h = map(level, 1, 8, 5, 50);

for (var i = 0; i < 15 ; i++){

var diam = rotatePath - 30 * i; // creates the spin out effect of the ellipse

if (diam > 0){ //pushes each new ellipse to show

noFill();

stroke(0, 113, 197); //intel blue

strokeWeight(2);

ellipseMode(CENTER)

push();

translate(width/8 - 90, height/4 - 10); //position of starting ellipse

rotate(cos(2.0));// rotation angle

ellipse(200, 200, diam / h, 40 / h); // the size of the ellipse is affected by amp.

}

}

rotatePath = rotatePath + 2; // controls the speed of the spin out effect.

}

function drawMicroGrid(){ // suppose to imitate the microsoft grid

level = amplitude.getLevel();

let h = map(level, 1, 8, 20, 200);

noStroke();

rectMode(CENTER);

for (var y = 50; y < height + 50; y += 100) { // nested for loop for tiling

for (var x = 50; x < width + 50; x += 100) {

fill(x, y + 100, h * 5); // color is affected by amp

rect(x , y, h * 3, h * 3); // size is affected by amp

}

}

}How does it work:

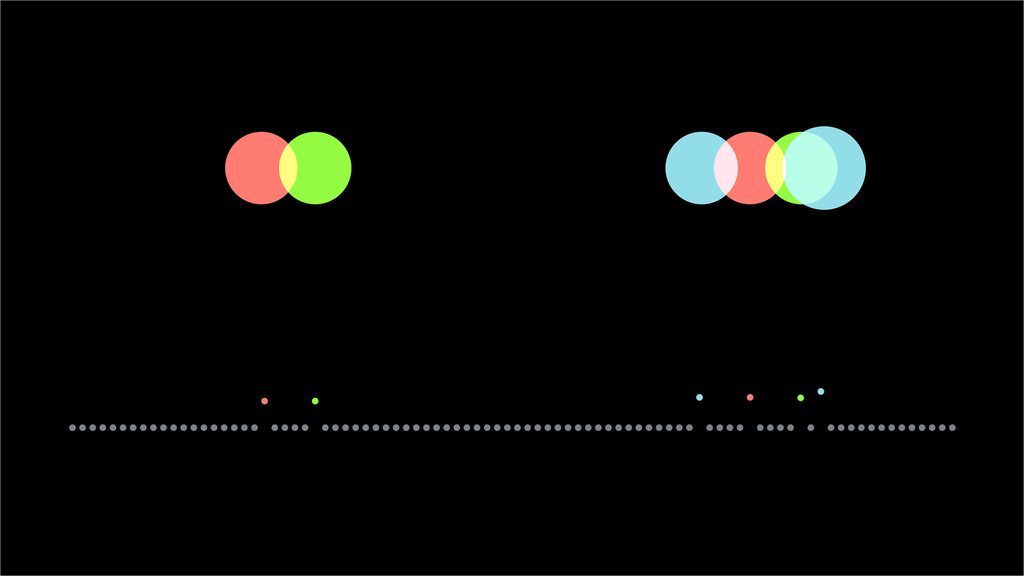

Press all the number keys from 1 to 8 and watch the patterns move and change to familiar sound logos!

(For some reason there are problems when Safari is used to view my project so please use Chrome or Firefox!)

In my proposal, I wanted to do some sort of sound visualization either in a form of a game or something similar to patatap. Sticking to my proposal, I used some iconic sounds that can be recognized through our day to day usage of technology. I realized how easy it is to overlook the importance of how these sounds inform our usage of technology so I ended up creating a sonic art piece that creates patterns according to the amplitude measured through the sound files.

My final project does not quite achieve the level of fidelity I originally intended my sonic art to have as the visuals are a bit too noisy and I want it to have a simple yet sophisticated look. I spent quite a bit of time trying to figure out how to use sound libraries in p5.js and mapping the amplitude to each of the various patterns, so I had a bit of difficulty debugging the whole code.

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../wp-content/uploads/2020/08/stop-banner.png)