sketch

I got this week’s project inspiration from the facebook emojis and decided to animate them with sound. You can click on each emoji and it will generate the corresponding mood sound. The hardest part of this project was trying to figure out how to use a local host and uploading it to WordPress, but overall, it was really fun!

/*

Min Ji Kim Kim

Section A

mkimkim@andrew.cmu.edu

Project-10

*/

var laugh;

var wow;

var crying;

var angry;

function preload() { //load sound files

laugh = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/10/laugh.wav");

wow = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/10/wow.wav");

crying = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/10/crying.wav");

angry = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/10/angry.wav");

}

function setup() {

createCanvas(480, 300);

noStroke();

//create different background colors

fill("#184293"); //laughing emoji

rect(0, 0, width / 4, height);

fill("#05B5C3"); //wow emoji

rect(width / 4, 0, width / 4, height);

fill('#BC2D15'); //angry emoji

rect(width / 2, 0, width / 4, height);

fill(0); //sad emoji

rect(width * 3 / 4, 0, width / 4, height);

}

function draw() {

noStroke();

//create 4 emoji heads

for (i = 0; i < 4; i++) {

fill("#FBD771");

circle(i * width / 4 + 60, height / 2, 90);

}

//laughing emoji

//eyes

stroke(45);

line(35, 130, 50, 135); //left

line(35, 140, 50, 135);

line(70, 135, 85, 130); //right

line(70, 135, 85, 140);

//mouth

noStroke();

fill(45);

arc(60, 155, 55, 50, 0, PI);

fill('#F35269');

ellipse(60,170,38,20);

//wow emoji

//eyes & mouth

fill(45);

ellipse(160, 140, 13, 20);

ellipse(200, 140, 13, 20);

ellipse(180, 170, 25, 35);

//eyebrows

noFill();

stroke(45);

strokeWeight(3);

curve(130, 180, 152, 125, 166, 120, 140, 120);

curve(170, 140, 193, 120, 207, 125, 200, 150);

//angry emoji

//eyebrows

stroke(45);

strokeWeight(4);

line(270, 150, 290, 155);

line(310, 155, 330, 150);

//eyes

fill(45);

circle(283, 157, 5);

circle(318, 157, 5);

//mouth

ellipse(300,170,20,3);

//crying emoji

//eyes

ellipse(400, 150, 10, 12);

ellipse(440, 150, 10, 12);

//eyebrows

noFill();

stroke(45);

strokeWeight(3);

curve(410, 130, 392, 140, 405, 135, 450, 160);

curve(410, 150, 435, 135, 448, 140, 450, 165);

//mouth

arc(420, 175, 20, 15, PI, TWO_PI);

noStroke();

//tear

fill("#678ad6");

circle(445, 185, 15);

triangle(438, 182, 445, 165, 452, 182);

}

function mousePressed() {

if(mouseX < width / 4) { //play lauging sound

laugh.play();

}

if(mouseX > width / 4 & mouseX < width / 2) { //play wow sound

wow.play();

}

if(mouseX > width / 2 & mouseX < width * 3 / 4) { //play angry sound

angry.play();

}

if(mouseX > width * 3 / 4) { //play crying sound

crying.play();

}

}

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)

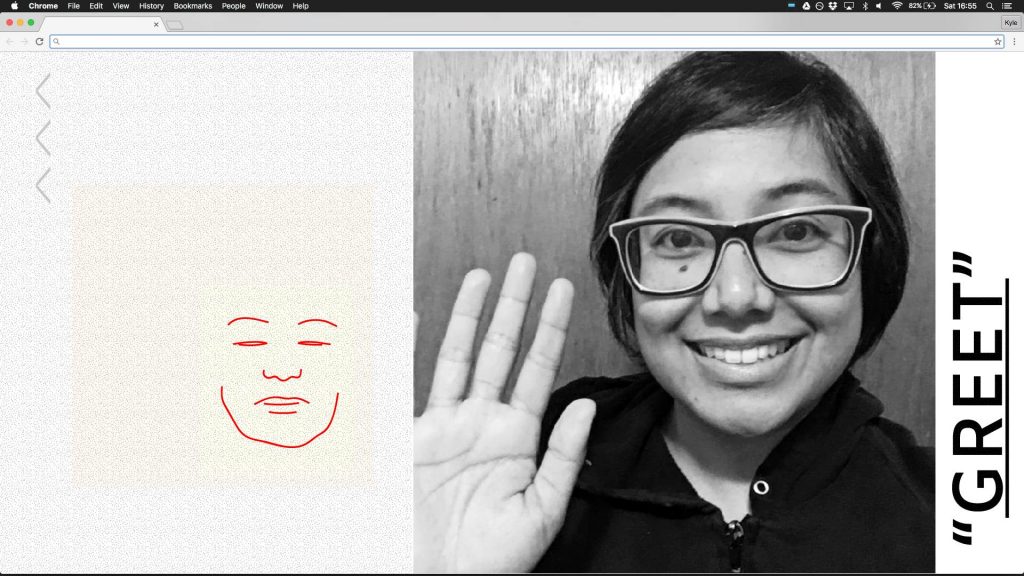

This is the original image

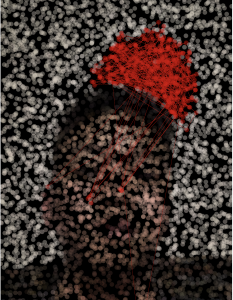

This is the original image This is what has been generated by the algorithm

This is what has been generated by the algorithm