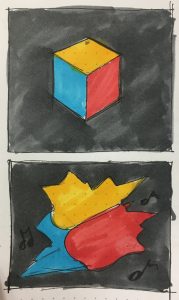

For this project, we (Elena Deng and Dani Delgado) wanted to collaborate and create something that synthesizes user interaction, sound, and generative visuals in order to create an interesting digital experience. Our plan is to start by displaying a still image (we are thinking of it being a graphic cube but are currently entertaining other possibilities) which changes a little bit when the user presses certain keys. The whole interaction would work as so: Each key has an assigned sound and “cube distortion” to it. So, when the user presses a key, a note will play and the visual will change slightly, so that when the user plays a full “song” the visual would have morphed a lot. A possible idea we had was with lower pitches the visual could retain the change that the lower pitch enacted, while with a higher pitch, the visual would go back to its original state before the key was enacted.

![[OLD FALL 2018] 15-104 • Introduction to Computing for Creative Practice](wp-content/uploads/2020/08/stop-banner.png)