sketch

//Carly Sacco

//Section C

//csacco@andrew.cmu.edu

//Project 10: Interactive Sonic Sketch

var x, y, dx, dy;

function preload() {

bubble = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/11/bubble-3.wav");

boatHorn = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/11/boat.wav");

bird = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/11/bird.wav");

water = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/11/water-2.wav");

}

function setup() {

createCanvas(640, 480);

x = 200;

y = 40;

dx = 0;

dy = 0;

useSound();

}

function soundSetup() {

osc = new p5.TriOsc();

osc.freq(880.0); //pitch

osc.amp(0.1); //volume

}

function draw() {

background(140, 216, 237);

//ocean

noStroke();

fill(26, 141, 173);

rect(0, height / 2, width, height);

//fish head

fill(50, 162, 168);

noStroke();

push();

translate(width / 2, height / 2);

rotate(PI / 4);

rect(200, -100, 100, 100, 20);

pop();

fill(184, 213, 214);

noStroke();

push();

translate(width / 2, height / 2);

rotate(PI / 4);

rect(215, -85, 70, 70, 10);

pop();

//fish eyes

fill('white');

ellipse(520, 355, 15, 25);

ellipse(545, 355, 15, 25);

fill('black');

ellipse(520, 360, 10, 10);

ellipse(545, 360, 10, 10);

//fish mouth

fill(227, 64, 151);

noStroke();

push();

translate(width / 2, height / 2);

rotate(PI / 4);

rect(240, -60, 40, 40, 10);

pop();

fill(120, 40, 82);

ellipse(533, 395, 30, 30);

//fins

fill(209, 197, 67);

quad(565, 365, 590, 325, 590, 430, 565, 400);

quad(500, 365, 500, 400, 475, 430, 475, 325);

//bubbles

var bub = random(10, 40);

fill(237, 240, 255);

ellipse(575, 315, bub, bub);

ellipse(550, 275, bub, bub);

ellipse(580, 365, bub, bub);

x += dx;

y += dy;

//boat

fill('red')

quad(40, 200, 250, 200, 230, 260, 60, 260);

fill('white');

rect(100, 150, 80, 50);

fill('black')

ellipse(110, 165, 15, 15);

ellipse(140, 165, 15, 15);

ellipse(170, 165, 15, 15);

//birds

noFill();

stroke(141, 160, 166);

strokeWeight(5);

arc(475, 75, 75, 60, PI * 5 / 4, 2 * PI);

arc(550, 75, 75, 60, PI, 2 * PI - PI / 5);

arc(550, 100, 50, 35, PI * 5 / 4, 2 * PI);

arc(600, 100, 50, 35, PI, 2 * PI - PI / 5);

arc(430, 125, 50, 35, PI * 5 / 4, 2 * PI);

arc(480, 125, 50, 35, PI, 2 * PI - PI / 5);

//waterlines

stroke(89, 197, 227);

bezier(300, 400, 250, 300, 200, 500, 50, 400);

bezier(50, 350, 75, 500, 250, 200, 300, 375);

}

function mousePressed() {

//plays sound if clicked near boat

if (mouseX < width / 2 & mouseY < height / 2) {

boatHorn.play();

}

//plays bubbles if clicked near fish

if (mouseX > width / 2 & mouseY > height / 2) {

bubble.play();

}

//plays birds if clicked near birds

if (mouseX > width / 2 & mouseY < height / 2) {

bird.play();

}

//plays water if clicked near water

if (mouseX < width / 2 & mouseY > height / 2) {

water.play();

}

}

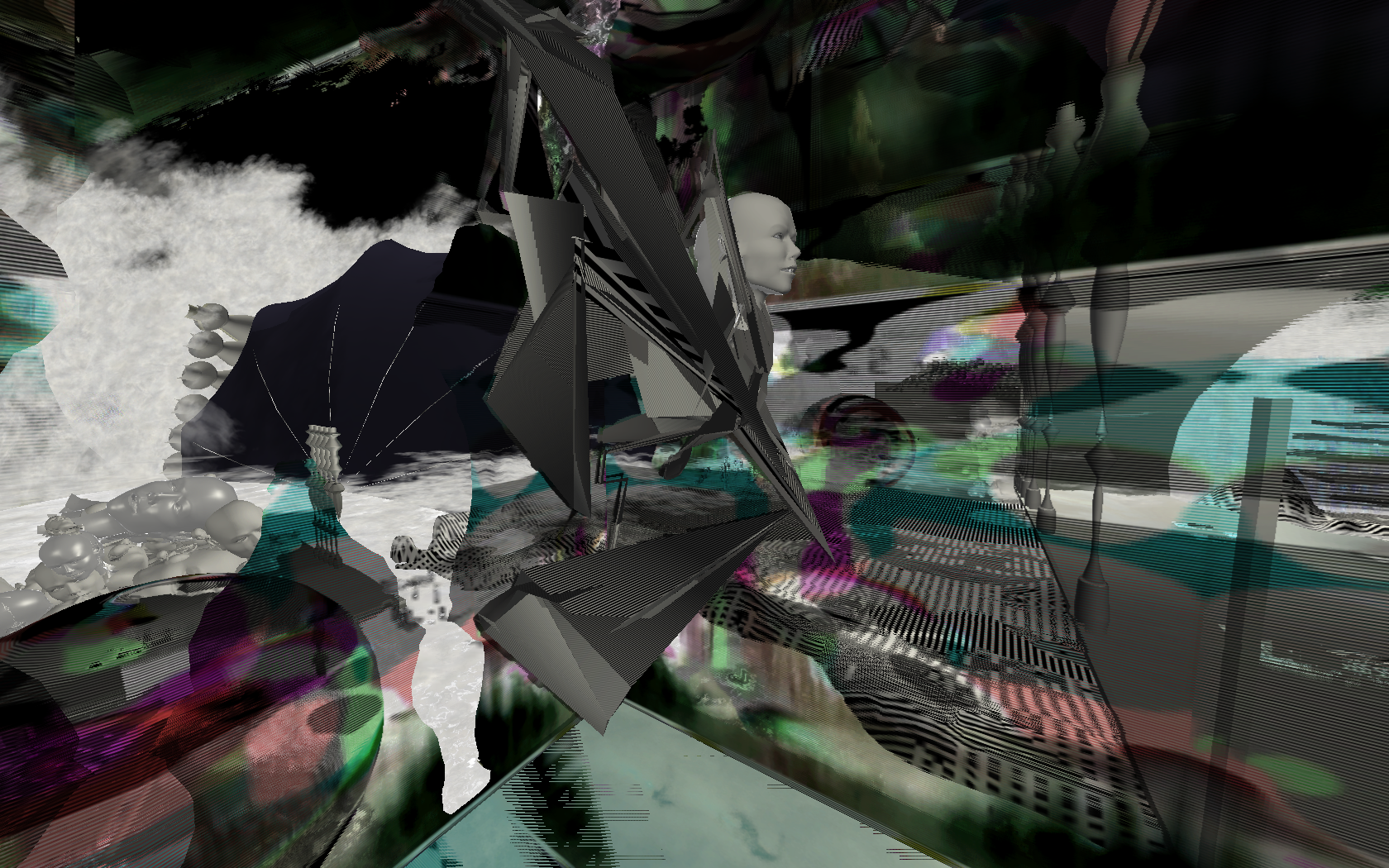

For my project this week, I began by starting with my project 3, which was the fish and bubbles. I wanted to add more options than a bubble sound so I decided to add a boat, birds, and a water noise. I kept it simple, so that whenever you click in the area closest to each icon, the associated sound plays.

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)