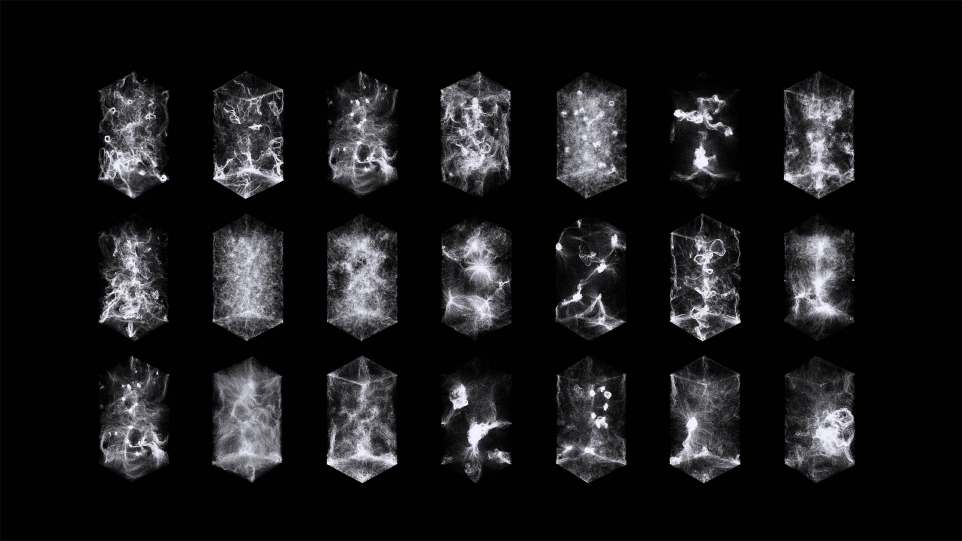

For this week’s looking outwards, I discovered an audiovisual installation called “Multiverse” created by fuseworks. This installation discovers the evolution of possible universes through the generation of haunting visual graphics and sound and strives to define the theorization of the multiverse: where infinite numbers of universe co-exist parallel outside space-time.

In the video, it presents the installation almost like a digital painting, which produces beautiful visuals accompanied by the audio. The installation explores and tries to imagine the birth/death of infinite parallel universes, and this “narrative” is based on American theoretical physicist Lee Smolin’s scientific theory. From the fall of black holes comes their decedents, the parameters and physical laws constantly tweaked and modified. This installation tries to create intimacy between the art and the viewer, yet creating two hierarchies: an impermanent, vulnerable human figure vs a vast, impenetrable universe.

The artworks are completely generated by the software developed in openFrameworks, which interacts with the generative system soundtrack of Ableton Live and Max/MSP. In the simulation, the physical laws are constantly being adjusted, which leads to the origin of a “new universe”. After thirty minutes, the previous sequences “evolve” and provide infinite new various outcomes. The creator of this installation explains: “Particularly, the particles react with each other and with the surrounding space, changing the information perceived by modifying a vector field that stores the values within a voxel space. The strategy involved the massive use of shader programs that maximize the hardware performance and optimize the graphics pipeline on the GPU.”

I really admire the creativity of this installation, but what amazes me is that these beautiful visuals are generated purely from the software. Creators provide the framework and allow the program to develop freely from there to present its creativity. I wish I have the chance to see the installation in real life.

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)