var star = []; // stores the star objects

var ufo = []; // stores UFO's

var moonY = 400; // where the moon surface is

function setup() {

createCanvas(480, 480);

// Pushes background stars on to array

for(i = 0; i < 20; i ++) {

star.push(makeStar());

}

}

function draw() {

noStroke();

background(0);

// Populates the background with stars of random location, size, and color

for(i = 0; i < star.length; i ++) {

var s = star[i];

s.draw();

}

// Draws sun in the top right

fill(255, 220, 70);

circle(410, 50, 60);

// Draws UFOs offscreen and has them travel left until they vanish

for(u = 0; u < ufo.length; u ++) {

var p = ufo[u];

p.draw();

p.move();

}

removeUFO(); // Removes UFOs when they get offscreen

addUFO(); // Keeps the remaining UFOs

// Draws the moon surface at the bottom of the canvas

fill(220);

rect(0, moonY, width, height - moonY);

fill(180);

ellipse(30, 460, 180, 40);

ellipse(300, 420, 220, 25);

}

// Returns the star object that gets pushed into the array

function makeStar() {

return {x: random(0, 410), y: random(moonY) - 20, size: random(10),

draw: drawStar, color: color(250, 200, random(255)) };

}

// Draws stars of random color, location, and size

function drawStar() {

fill(this.color);

circle(this.x, this.y, this.size);

}

// Returns the UFO object that gets pushed into the array

function makeUFO() {

return {x: width + 100, y: random(moonY), width: random(60, 130),

draw: drawUFO, color: color(random(255), random(255), random(255)),

speed: random(-2, -0.5), move: moveUFO};

}

// Draws UFOs of random color, width, location, and speed

function drawUFO() {

fill(this.color);

ellipse(this.x, this.y - 10, this.width / 2, 30);

fill(240);

ellipse(this.x, this.y, this.width, 20);

}

// This makes sure that the screen isnt flooded with 60 UFOs a second

function addUFO() {

// Small chance of adding a UFO

var newUFOChance = 0.007;

if (random(0, 1) < newUFOChance) {

ufo.push(makeUFO());

}

}

// Takes UFOs off the array when they go offscreen

function removeUFO() {

var ufoToKeep = [];

for(i = 0; i < ufo.length; i ++) {

if (ufo[i].x > 0 - ufo[i].width) {

ufoToKeep.push(ufo[i]);

}

}

ufo = ufoToKeep;

}

// Moves each UFO from left to right

function moveUFO() {

this.x = this.x + this.speed;

}

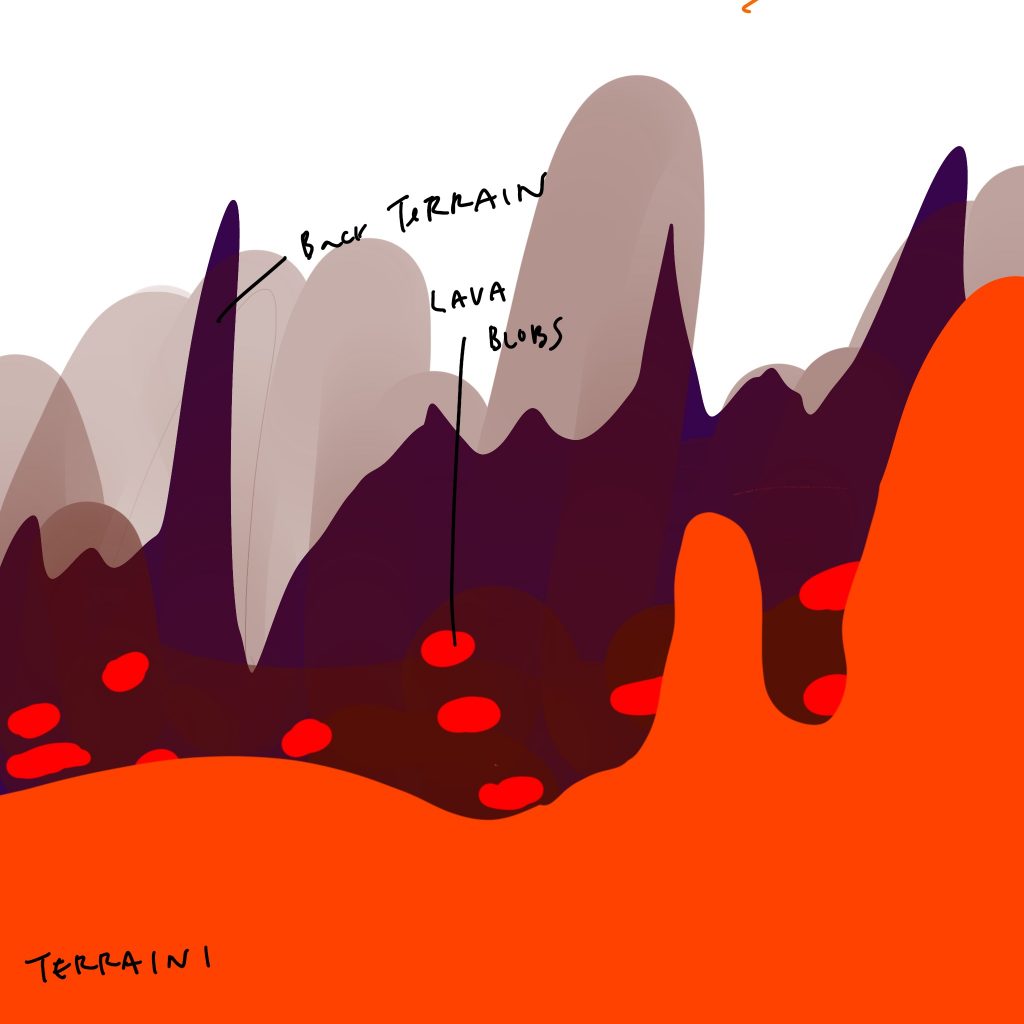

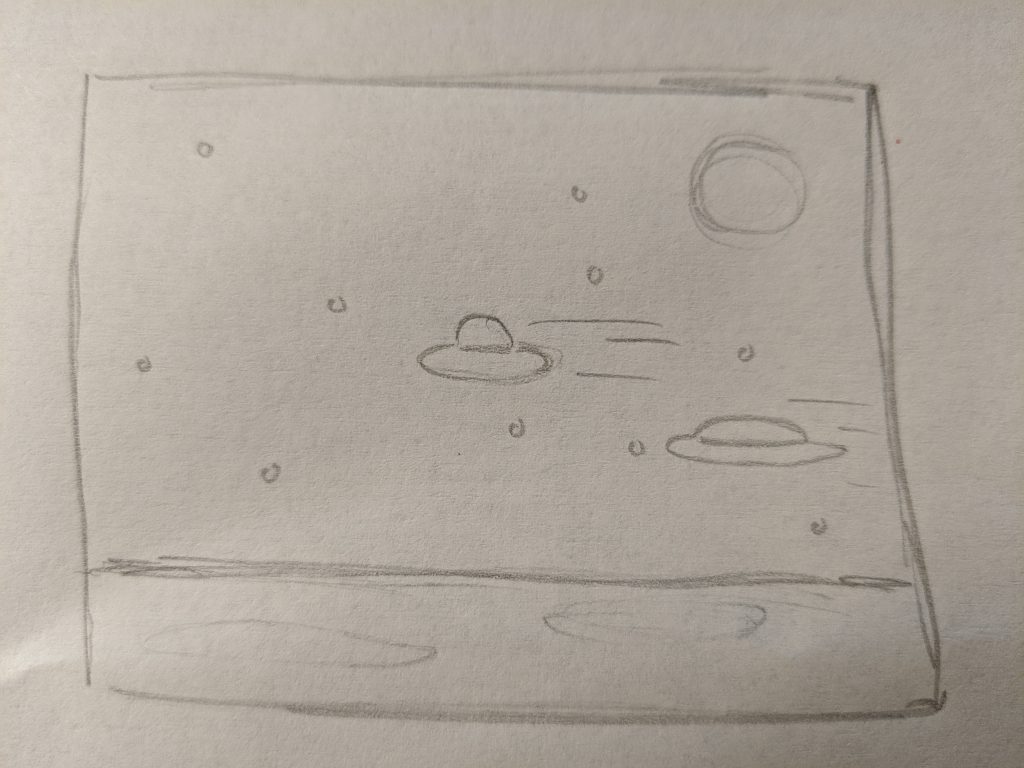

Although for this project I didn’t really create the illusion that the viewer is moving. I went by this project’s other name of “stuff passing by”. I wanted this project to feel like you were standing on the moon watching UFOs fly across space. UFOs of random color, size, and speed should fly across the screen from right to left. Also the stars in the background will be different each time you refresh the page. The hardest part about this project for me was keeping track of all the function that I had to make in order to fulfill the requirements of the project. Overall I’m pretty proud of how true to my original idea i was able to stay. While the sketch above is pretty simple, I think it translated very well to an animated project.

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)