I was inspired by these projects:

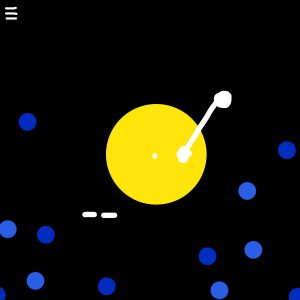

For my project, I was originally thinking of just doing something like the first video, which would basically just be integrating sound into my generative landscape. However, I wanted to something with generative audio, and not just a simple audiovisual, like the glowing dot, which I personally don’t think is very exciting. I then thought of doing something with particles. Ideally, my end product’s visuals would be something like the second video, with particles that feel dynamic and “alive”. However, instead of just being static recorded segments, I want my project to be interactive, reacting to mouse position or something like that. I also want the user to be able to interact with the view/perspective, so I’m thinking about using WEBGL and orbit control.

Category: Section C

Alexandra Kaplan – Project 12 – Proposal

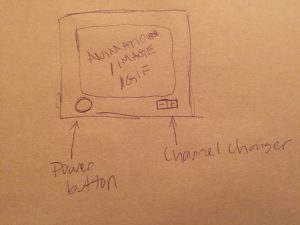

For my final project, I am planning on making an interactive tv set. It will have with pre-loaded animations, images, and gifs that can be flipped through by pressing channel changer on the tv. I think it could also be cool if the animations were different depending on the time of day, much like a real television programming. I would separate the day into 4 different parts of 6 hours each for the different animations to play. I also want to incorporate an on/off button to turn ‘off’ the tv. If I have time, I would love to incorporate the camera in the computer to show people’s faces “reflected”on the tv screen when it is off like with television sets with glass screens. Also if I have time, it would be fun to have different sounds for the different buttons on the tv.

Alexandra Kaplan – Looking Outwards – 12

Video of Samsung’s “The Frame”

The two projects I am focusing on for this week’s Looking Outwards is Samsung’s “The Frame” and a piece called “All the Minutes”. These pieces are very different from each other, but they both are somewhat connected to what I want to do for my final project, an interactive tv set. Samsung’s “The Frame”, created by the company Leo Burnett Sydney, takes place in an art gallery, but some of the paintings/photographs are actually tv’s with which people interact with videos on them. The videos also interact with each other. I think that it is a really fun idea, but I feel like they could have invested more in the quality of the painting videos and tried to disguise them better.

The piece ‘All the Minutes’, created by Studio Puckey, is a clock that uses tweets with a specific time in them. I think that it is much more effective than Samsung’s project, even if it is so different. It is fun to watch the different tweets as the times change throughout the day.

Connor McGaffin – Proposal

For my final project I am interested in exploring the intersection of physical aesthetics and music playback. When I go to concerts, one of the largest factors in my opinion of the performance is the quality of the visuals used to accompany the artists. I am interested in making this sort of experience more accessible through a music player. I will likely have several different songs to choose from and different visuals to accompany them. I would still like to ground the visuals in some literal manifestation, so I am considering using an interface which resembles a turntable.

Sometimes at parties I see people use their Chromecast or Apple TV to stream music on, which only displays the album cover and song title. I imagine this program to be used in this sort of social setting, where it is not necessarily the primary focus of the occasion, but accompanies the energy in an appropriate fashion.

Below is a rough digital sketch of what the program may look like. Centered is an abstract representation of a record player, surrounded by graphics that accompany and supplementally visualize the music.

Connor McGaffin – Looking Outwards 12

From the beginning of this course, I have been interested in exploring the intersection of the visual arts and music. Additonally, for the past five years or so I have been into vinyl records, and have reignited this passion after coming to Carnegie Mellon and joining WRCT.

I recently downloaded an app called djay2, which simulates the process of live mixing with records. It has been fun to play with, but has a slightly intimidating interface to those who have no experience with real live mixing.

The only visualizations of the music in djay2 are the scrolling bar at the above the turntable and the picture disks themselves. This limitation inspired me to search for more unique audio visualization, and in this process I stumbled upon the video for “Coast Modern” by Coast Modern.

I am particularly drawn to this project because of its graphic nature. Daiana Ruiz , a visual artist whose work often explores the living as a person of color, created this video for Coast Modern. I feel that her visual sensibilities of this piece are aligned with my usual visual approach. I admire this video’s efforts to push two dimensional shapes to their farthest affordances.

With both of these projects side by side, djay2 provides a very literal representation of music, while Daiana Ruiz’s work is far more interpretive. I am interested in how this more interpretive style could be incorporated into a project like djay2 while still maintaining its basic functionality.

Joanne Lee – Looking Outward 12

For my final project, I plan to create a virtual Rube Goldberg machine geared towards children ages 5-9. Because my project is not necessarily “art”, I took inspiration from a media sample and contest design. One project that I found relevant and interesting was a media sample of a simple Rube Goldberg machine being explained on Sesame Street.

Sesame Street explaining how a simple Rube Goldberg machine works.

I was unable to find the year of this media sample however they call it the “What Happens Next Machine”. I found it to be useful to see how the machine was shown and expressed in very simple terms and helped me to better understand the type of terminology and level of difficulty I will implement in my actual experiment. Something that this media sample lacks is interactivity due to it being shown via television. I believe learning is done best through interaction and trial & error!

.jpg?1539882413)

Another project I found was the Rube Goldberg exhibit at Children’s Museum of Pittsburgh. At this exhibit, children are able to activate chain reactions in order to complete every day tasks. I think this is a great exhibit because as a child, I was very fascinated by Rube Goldberg machines and still am! It is important to foster creativity in children and by using these inefficient machines to complete every day tasks, it helps to keep the creative juices flowing! I don’t think they overlooked anything large, but I would like to increase accessibility to these interactive exhibits by creating my own virtually. I hope my project turns out well.

Rjpark – Project 12 – Final Project Proposal

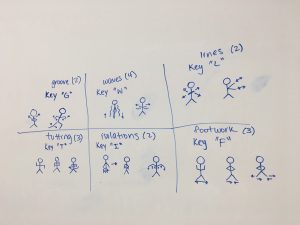

For my final project, I wanted to create something that is personally interesting to me. So, I’ve decided to create a visual and interactive computer keyboard dance generator. The objective of my project is to allow the user to see a dance move that’s generated based off of the key they pressed. As you can see below, if the user presses the “f” key, he or she will see dance moves surrounding footwork.

For now, I plan on creating one move per key for many keys. From there, I will try to create multiple moves per key so that when the user presses the key, the moves are randomized. This will allow for more diverse dance moves to be generated.

Sophia Kim – Looking Outwards 12 – Sec C

“BAD SIGNALS” and “FUZZY BLOB” were both created in the beginning of 2018. Both projects are created by Avseoul. While both projects use webgl, “BAD SIGNALS” uses the webcam as a part of their visuals, and “FUZZY BLOB” uses the microphone to make audio. I noticed the use of vibrant colors throughout both projects. I admire how the sound transitions are shown visually through change of colors, because they are bold and noticeable. For “BAD SIGNALS,” I noticed how the glitches are responsive to sound. I admire how the glitches are not subtle, but rather exaggerative to show change. “FUZZY BLOB” allows the user to interact with the webgl not only with realtime audio, but also with mouse movement (can make affects and indents on the ‘fuzzy blob’). Similar to “FUZZY BLOB,” “BAD SIGNALS” utilizes realtime audio to make glitches on the visuals, which is from the web camera. I admire both projects, because they get the user to interact with the visuals and audio. Also, these projects depend on the user’s interaction (ie the sounds made by the user/their environment, their mouse movement).

Sophia Kim – Project 12 – Proposal – Sec C

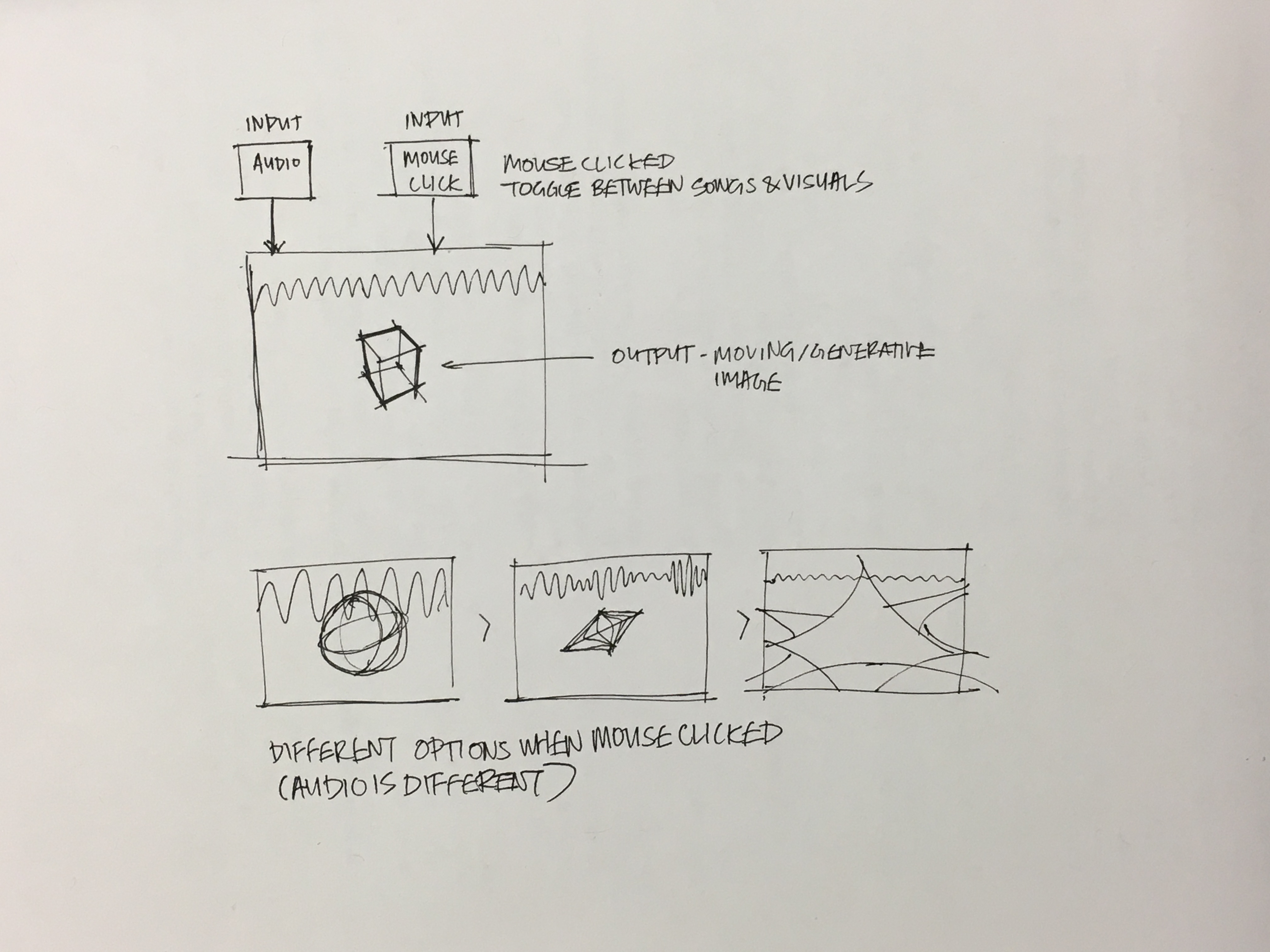

Mimi Jiao and I plan on collaborating for this final project. We want to further explore interactive sound implementation and WEBGL. We started off exploring currently existing work and discovered code that integrates sound and visuals together. They utilized the frequency and amplitude of imported songs to alter the imported image. We started off looking at how static imported images are altered based off of sound with this video and we branched off static images by looking at more dynamic and generative shapes through WEBGL. We found this interactive particle to be really interesting and cool and we definitely want to play around with geometries. Since our current skillsets are not developed enough to create something as complicated and fleshed out as this particle equalizer, we want to stay confined to shapes like ellipses, boxes, and custom shapes generated by basic math functions like sin, cos, and tan. From this, we want to play around with the idea of interacting with multiple human senses to create an experience. The audience is able to have a more heightened experience because of the mix of visuals and audio. The use of sound can make visuals easier to comprehend. In a way, the visuals will almost be a method of data visualization of the structure of the song.

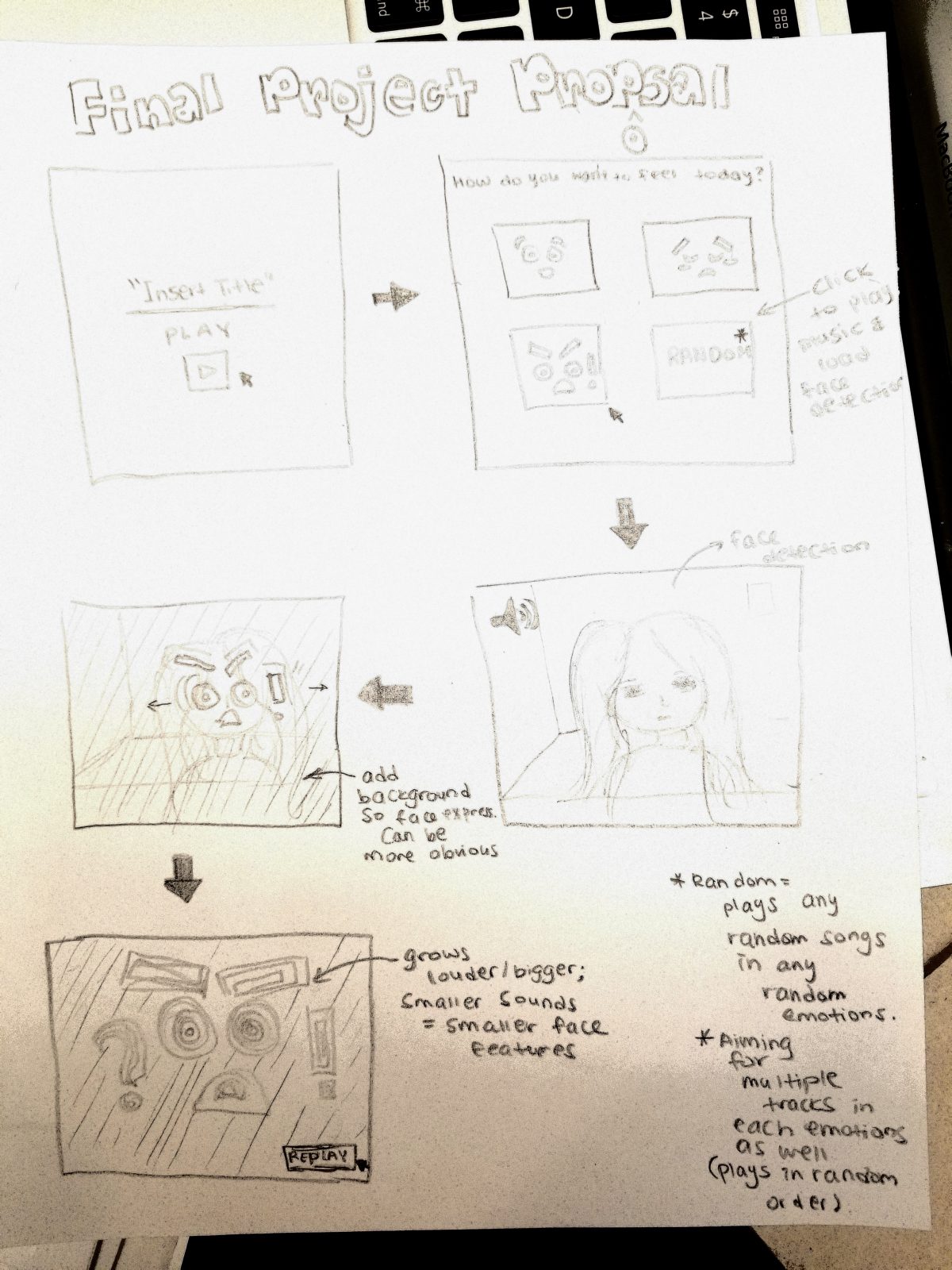

Christine Seo – Project Proposal

Initially, I wanted to make a visual representation of sound and music because I personally love to listen to music but there is no way for deaf people to listen to this and have this experience. I wanted to program something to share my emotions and experiences when I listen to certain music to these individuals in some way. Throughout the course, my favorite parts were when we had to incorporate the camera and when we had to allow music in our assignments. So for my Looking Outwards 12, I looked for inspirations that interacted with these two topics; this inspired me to come up with my ideas for my final project which incorporates the two ideas in one. Thus, in my project, I want to have options to listen to songs that act to different emotions. These emotions would be visually portrayed through a face tracker in each that has elements that would also react to the music, volume and sounds (multiple tracks). I found out that I can use JavaScript libraries for face tracking called ClmTrackr and p5.js for creating sound visualization. Through this project, I want to make something visually interesting but also wanted to involve a topic that I care about. I believe that this project can help me achieve my aesthetic ideas, show what I’ve learned through this class, and create something meaningful.

![[OLD FALL 2018] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)