sketch

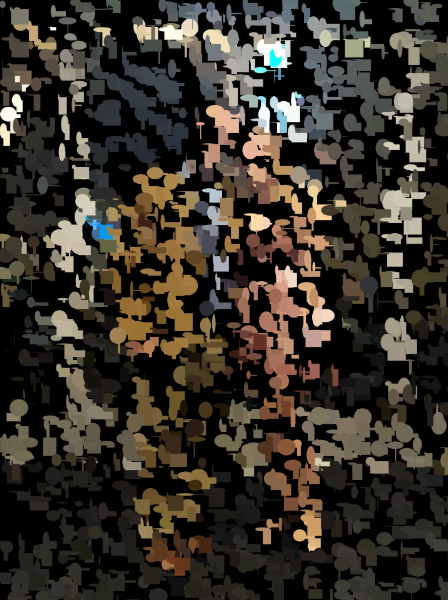

var theImage;

function preload() {

//loading my image

var myImageURL = "https://i.imgur.com/3SFfZCZ.jpg";

theImage = loadImage(myImageURL);

}

function setup() {

createCanvas(480, 480);

background(250,250,250);

theImage.loadPixels();

// going through each pixel

for (x = 0; x < width+10; x = x+3) {

for (y = 0; y < height+10; y = y+2) {

var pixelColorXY = theImage.get(x, y);

if (brightness(pixelColorXY) >= 0 & brightness(pixelColorXY) <= 20) {

//light pink

stroke(255,230,230,70);

line(x, y, x-1,y-1);

} else if (brightness(pixelColorXY) >= 20 & brightness(pixelColorXY) <= 50) {

//orange

stroke(250,170,160);

line(x, y, x+3, y+3);

} else if (brightness(pixelColorXY) >= 50 & brightness(pixelColorXY) <= 55) {

//pink

stroke(230,130,160);

line(x, y, x+3, y+3);

} else if (brightness(pixelColorXY) >= 55 & brightness(pixelColorXY) <= 60) {

// light green

stroke(180,195,200);

line(x, y, x-1,y-1);

} else if (brightness(pixelColorXY) >= 65 & brightness(pixelColorXY) <= 70) {

//yellow orange

stroke(235,180, 100);

line(x, y, x-2, y-2);

} else if (brightness(pixelColorXY) >= 75 & brightness(pixelColorXY) <= 85) {

//blue

stroke(196,130,130);

line(x, y, x-1, y-1);

} else if (brightness(pixelColorXY) >= 85 & brightness(pixelColorXY) <= 95) {

//dark red

stroke(220,80,80);

line(x, y, x-1, y-1);

} else if (brightness(pixelColorXY) >= 95 & brightness(pixelColorXY) <= 110){

//pink

stroke(220,69,90);

line(x, y, x+2, y+2);

} else if(brightness(pixelColorXY) >= 110 & brightness(pixelColorXY) <= 130){

//medium blue

stroke(80,130,60);

line(x, y, x+1, y+1);

} else if (brightness(pixelColorXY) >= 130 & brightness(pixelColorXY) <= 160){

//light orange

stroke(220,170,130);

line(x, y, x+1, y+1);

} else if (brightness(pixelColorXY) >= 130 & brightness(pixelColorXY) <= 160){

//light orange

stroke(202,70, 100);

line(x, y, x+1, y+1);

} else if (brightness(pixelColorXY) >= 160 & brightness(pixelColorXY) <= 190){

//white

stroke(255,255, 255);

line(x, y, x+3, y+3);

} else if (brightness(pixelColorXY) >= 190 & brightness(pixelColorXY) <= 220){

//yellow

stroke(150,130, 90);

line(x, y, x+3, y+3);

} else if (brightness(pixelColorXY) >= 220 & brightness(pixelColorXY) <= 255){

//yellow

stroke(200,60,60);

line(x, y, x+3, y+3);

}

}

}

}

function draw() {

}

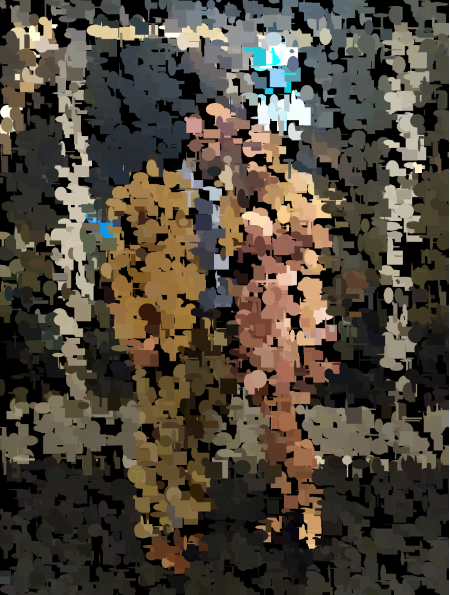

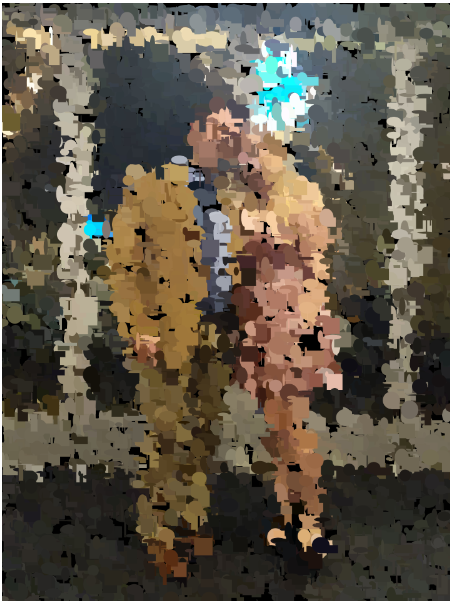

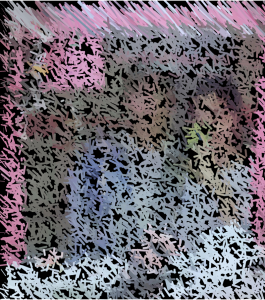

In this project, I was thinking about memories with my family and about my grandma. I took some photos this summer of her teaching the rest of my family how to make Lithuanian dumplings. In my psychology class it is interesting because we have been learning about how distortion happens to what we remember over time. This is why I chose to make my image a bit distorted, and not so clear. I also love gradient maps, and wanted to emulate this with this piece. Yellow, green and red are the colors of the Lithuanian flag as well.

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)