The technological field has been and still is filled with a lot of stereotypes and biases surrounding people’s gender, race, or sexuality. It is a concrete fact that only 20% of professional computer scientists are women and only 5.8% of professional computer scientists are African American. Even though the 21st century US appears to be a progressive utopia, there’s still a lot of stigma around the technology and its developers.

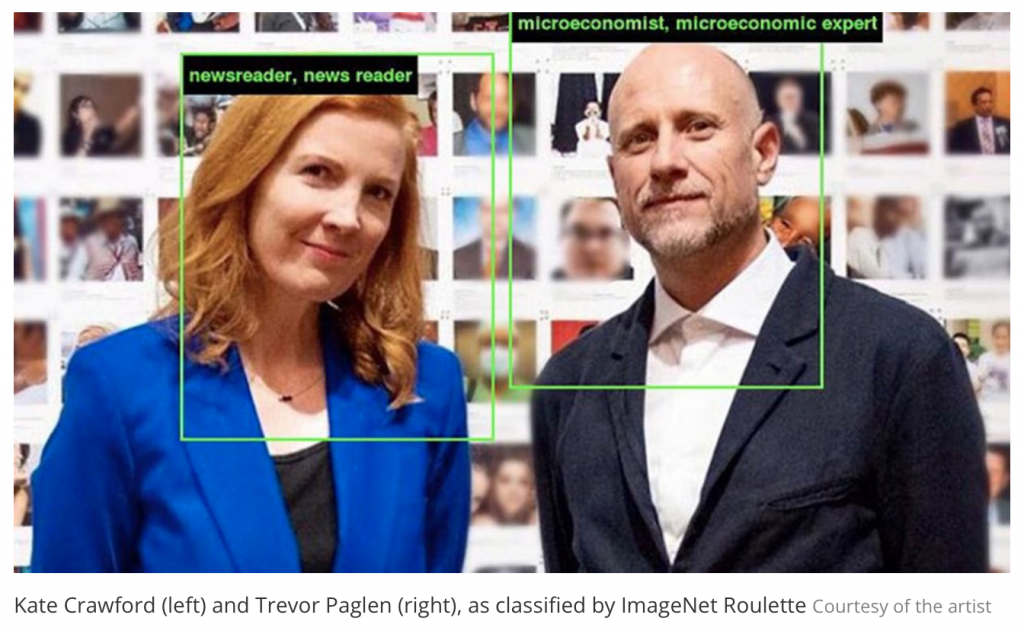

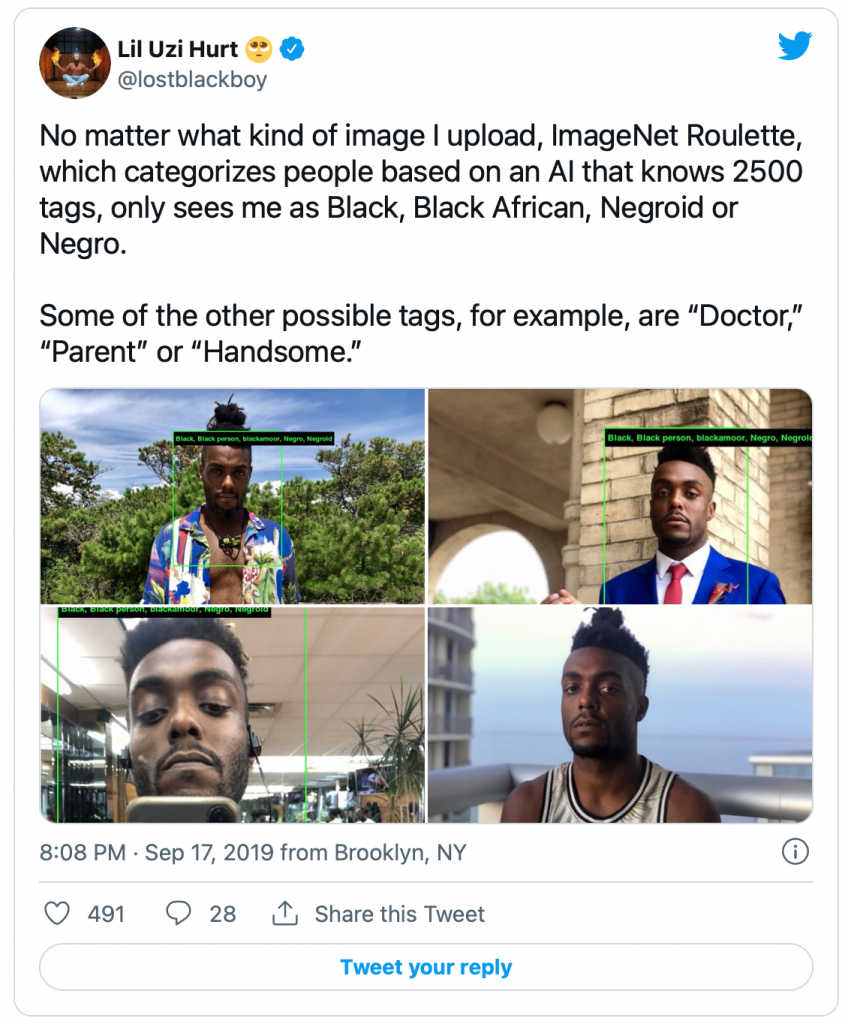

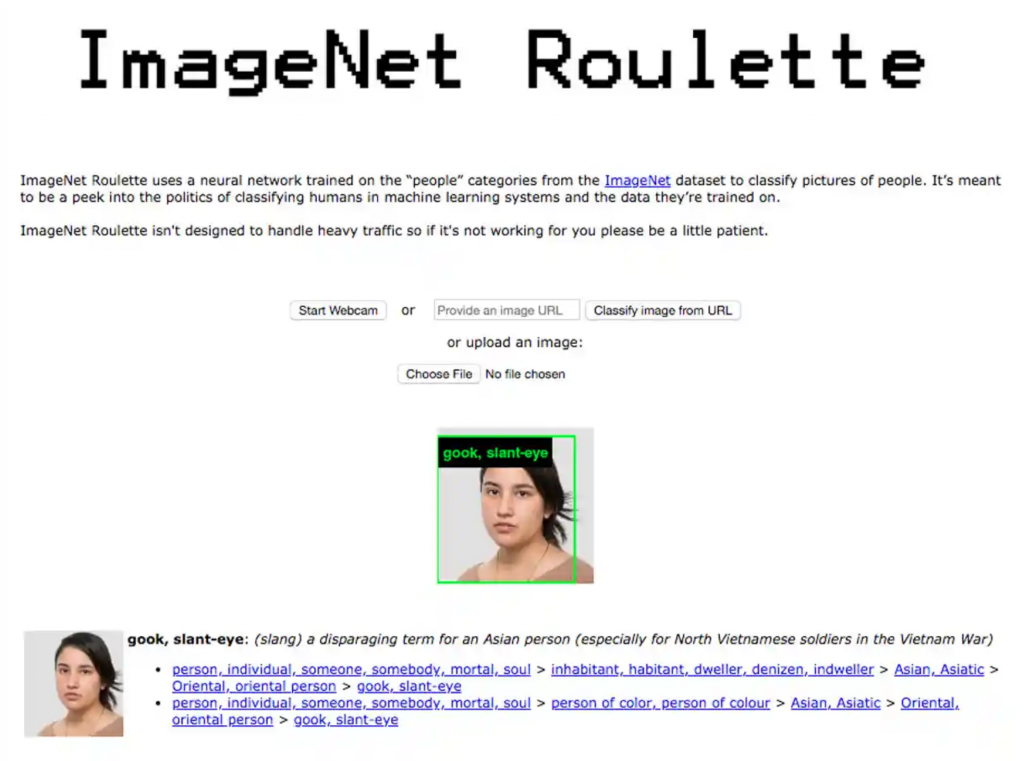

For this week’s Looking Outwards I chose an article by Meilan Solly called “Art Project Shows Racial Biases in Artificial Intelligence System”. This article addresses an issue of racial bias in the artificial intelligence tool ImageNet Roulette that was developed by artist Trevor Paglen and A.I. researcher Kate Crawford. What this tool was programmed to do is identify some characteristics of a person based on the photograph such as a photo of John F. Kennedy would be labeled “Politician” and a photo of Shakira would be labeled “Singer”. This tool seems to be impressive, right? But not everything is so simple, so perfect, so equal. When an African American young man, Tabong Kima, uploaded a photograph of himself and his friend to the tool, the ImageNet Roulette labeled him as “Wrongdoer, Offender”. “I might have a bad sense of humor, but I don’t think this is particularly funny”, said Kima on his Twitter page. The developers of the tool programmed descriptions such as dog, Boy Scout, or hairdresser, while others were rapist, adultress, loser, etc. The program seemed to identify white individuals largely in terms of occupation or other functional descriptors, but it classified those with darker skin solely by race and skin color: when an African American man uploaded a picture of himself the tool was only to describe him as “Black” and when an East Asian woman uploaded a photo of herself the tool described her as “Gook, Slant-eye”. The bias and racism here are seen crystal clear.

This tool was taken off the Internet on September 27th, 2020, due to the existence of so many offensive and upsetting terms that were used to describe human beings. “We want to show how layers of bias and racism and misogyny move from one system to the next,” Paglen tells the New York Times’ Cade Metz. “The point is to let people see the work that is being done behind the scenes, to see how we are being processed and categorized all the time.” The creators of the tool told the press that the point of this project was to show the bias towards the race but my question would be just: Why? What for? Not everyone knew the point of this tool so it upset and offended a lot of people on the Internet when racist slurs popped up as their descriptions. This article pointed out how ImageNet Roulette was wrong and how it only increased the stigma around race and technology rather than got rid of it. The bias obviously exists and we shouldn’t prove it by creating more racist technology – we should fix it!

Solly, M. (2019, September 24). Art Project Shows Racial Biases in Artificial Intelligence System. Smithsonian.com. Retrieved November 13, 2021, from https://www.smithsonianmag.com/smart-news/art-project-exposed-racial-biases-artificial-intelligence-system-180973207/.

![[OLD SEMESTER] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2023/09/stop-banner.png)