sketch

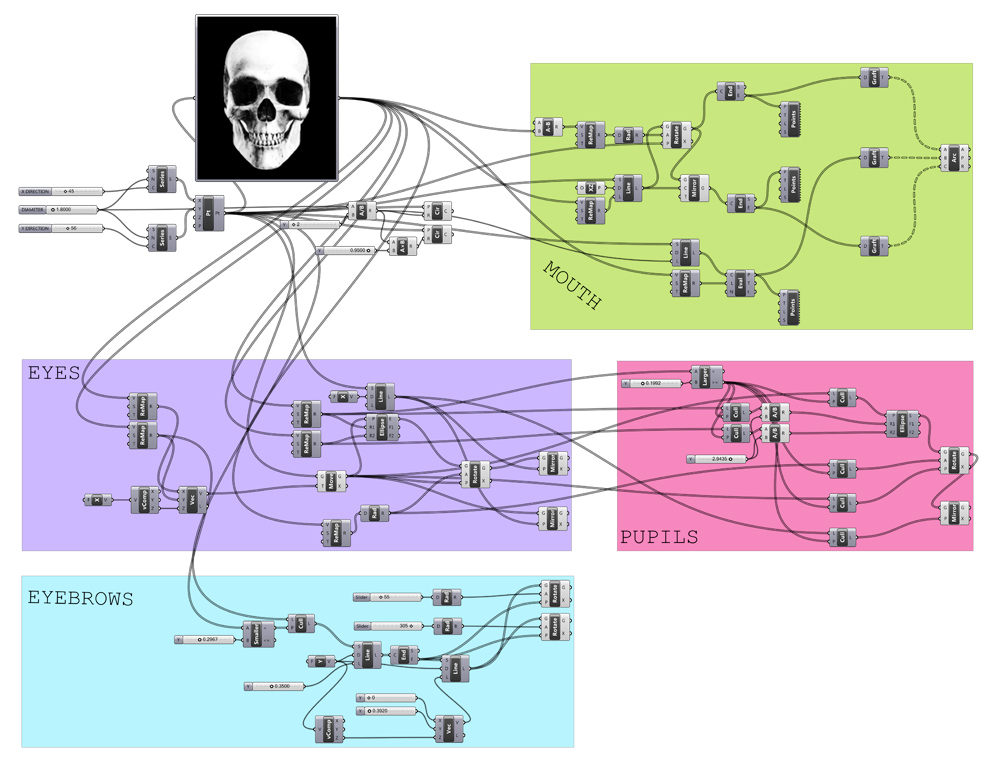

var facewidth = 10;

var faceheight = 10;

var eyex = 5;

var eyey = 5;

var eyewidth = 5;

var eyeheight = 5;

var pupilsize = 5;

var r = 0;

var g = 0;

var b = 0;

var fr = 30;

var mouthsize = 0;

var mouthx = 0;

var mouthy = 0;

var hat = -1;

var mouth = -1;

var frame = 0;

var ang = 0;

var ang2 = 0;

function setup() {

var height = 400;

var width = 400;

createCanvas(width, height);

rectMode(CENTER);

noStroke();

}

function mousePressed() {

fr = 30;

ang = 0;

ang2 = 0;

}

function draw() {

if (fr > 0) {

fr--;

frameRate(fr);

hat = -1;

mouth = -1;

}

if (fr > 0) {

noStroke();

fill(170,180,210);

rect(width/2, height/2, width, height, 20);

facewidth = random(150,250);

faceheight = random(150,250);

r = random(200, 225);

g = random(200, 225);

b = random(200, 225);

eyewidth = random(25, 35);

eyeheight = random(25, 35);

eyex = random(5, 35);

eyey = random(-35, 35);

pupilsize = random(10, 12);

if (mouth < 1) {

mouthsize = random(10, 20);

mouthx = random(width/2 - facewidth/4, width/2);

mouthy = random(height/2 + eyeheight/2 + eyey + 5, height/2 + faceheight/4);

}

if (mouth >= 1) {

mouthsize = random(10, 20);

mouthx = random(width/2, width/2 + facewidth/4);

mouthy = random(height/2 + eyeheight/2 + eyey + 5, height/2 + faceheight/4);

}

fill(r, g, b);

rect(width/2, height/2, facewidth, faceheight, faceheight/5);

fill(255);

rect(width/2 - 15 - eyex, height/2 - 20 + eyey, eyewidth, eyeheight, 15);

rect(width/2 + 15 + eyex, height/2 - 20 + eyey, eyewidth, eyeheight, 15);

fill(0);

rect(width/2 - 15 - eyex, height/2 - 20 + eyey, pupilsize, pupilsize, 5);

rect(width/2 + 15 + eyex, height/2 - 20 + eyey, pupilsize, pupilsize, 5);

}

if (fr == 0) {

frameRate(30);

frame++;

noFill();

stroke(253, 253, 150);

strokeWeight(4);

for (i = 1; i <= 16; i++) {

line(width/2 + (ang * cos(2 * PI * (i/16))),

height/2 + (ang * sin(2 * PI * (i/16))),

width/2, height/2);

}

if (ang > -width) {

ang -= 12;

}

noStroke();

fill(170,180,210);

rect(width/2, height/2 + faceheight/2 + 30, 250, 40, 10);

if (frame >= 10) {

fill(255);

textAlign(CENTER);

textStyle(BOLD);

textSize(32);

text("It's party time!!", width/2, height/2 + faceheight/2 + 40);

}

if (frame == 18) {

frame = 0;

}

if (ang <= -width) {

noStroke();

fill(170,180,210);

ellipse(width/2, height/2, 2*ang2, 2*ang2);

if (ang2 > -width*(2/3)) {

ang2 -= 8;

}

}

noStroke();

if (ang2 <= -width * (2/3)){

background(255);

fill(170,180,210);

rect(width/2, height/2, width, height, 20);

}

fill(r, g, b);

rect(width/2, height/2, facewidth, faceheight, faceheight/5);

fill(255);

rect(width/2 - 15 - eyex, height/2 - 20 + eyey, eyewidth, eyeheight, 15);

rect(width/2 + 15 + eyex, height/2 - 20 + eyey, eyewidth, eyeheight, 15);

fill(0);

rect(width/2 - 15 - eyex, height/2 - 20 + eyey, pupilsize, pupilsize, 5);

rect(width/2 + 15 + eyex, height/2 - 20 + eyey, pupilsize, pupilsize, 5);

if (ang2 <= -width/2) {

if (hat == -1) {

hat = random(0, 2);

}

if (mouth == -1) {

mouth = random(0,2);

}

if (mouthy + mouthsize/2 > height/2 + faceheight/2) {

mouthy = height/2 + faceheight/2 - mouthsize/2 - 5;

}

if (hat >= 1) {

fill("red");

triangle(width/2 + facewidth/2 - faceheight/5, height/2 - faceheight/2,

width/2 + facewidth/2, height/2 - faceheight/2 + faceheight/5,

width/2 + facewidth/2 + 20, height/2 - faceheight/2 - 20);

fill("yellow");

ellipse(width/2 + facewidth/2 + 20, height/2 - faceheight/2 - 20, 25);

} else {

fill("red");

triangle(width/2 - facewidth/2 + faceheight/5, height/2 - faceheight/2,

width/2 - facewidth/2, height/2 - faceheight/2 + faceheight/5,

width/2 - facewidth/2 - 20, height/2 - faceheight/2 - 20);

fill("yellow");

ellipse(width/2 - facewidth/2 - 20, height/2 - faceheight/2 - 20, 25);

}

if (mouth < 1) {

fill(25);

rect(mouthx, mouthy, mouthsize, mouthsize,

mouthsize/5, mouthsize/5, mouthsize/2, mouthsize/5);

} else {

fill(25);

rect(mouthx, mouthy, mouthsize, mouthsize,

mouthsize/5, mouthsize/5, mouthsize/5, mouthsize/2);

}

}

}

}

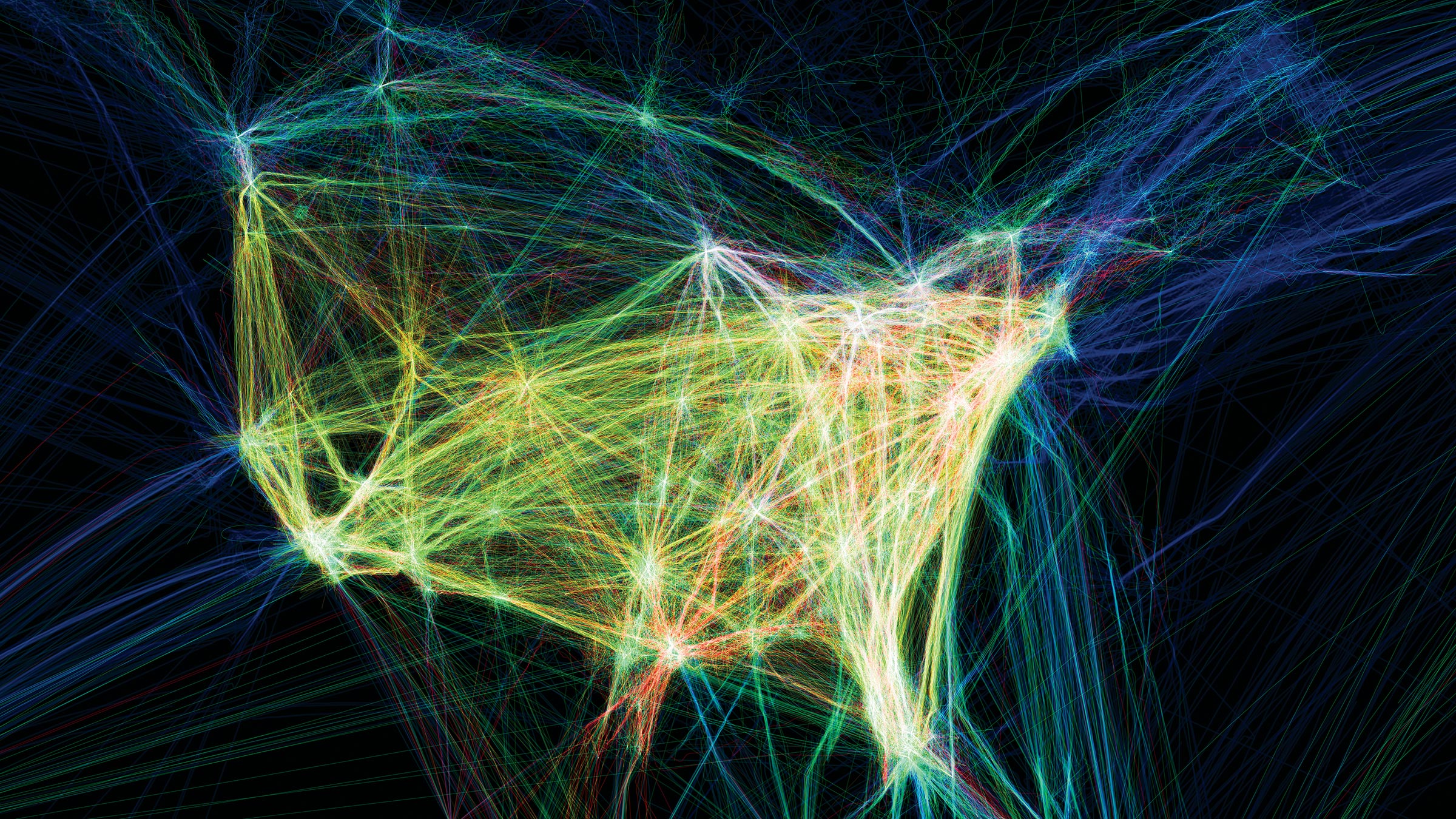

In the beginning, I knew that I wanted to add an interesting transition because randomization makes transitions look cool. After I finished the transition, I realized that my project had an overall happy feel. I added a party hat and text to make sure that the user knew that they were supposed to be having a fun time with all the faces they generated.

![[OLD FALL 2018] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)