sketch

/* Jason Zhu

Section E

jlzhu@andrew.cmu.edu

Project-10

*/

var terrainSpeed = 0.0003;

var terrainDetail = 0.0008;

var flags = [];

function setup() {

createCanvas(480, 300);

frameRate(50);

for (var i = 0; i < 10; i++){

var rx = random(width);

flags[i] = makeflag(rx);

}

}

function draw() {

background(246,201,116)

push();

beginShape();

noStroke();

fill(104,176,247)

vertex(0, height);

for (var x = 0; x < width; x++) {

var t = (x * terrainDetail) + (millis() * terrainSpeed);

var y = map(noise(t), 0,1, 0, height);

vertex(x, y - 50);

}

vertex(width, height)

endShape();

pop();

displayHorizon();

updateAndDisplayflags();

removeflagsThatHaveSlippedOutOfView();

addNewflagsWithSomeRandomProbability();

}

function updateAndDisplayflags(){

// Update the flag's positions, and display them.

for (var i = 0; i < flags.length; i++){

flags[i].move();

flags[i].display();

}

}

function removeflagsThatHaveSlippedOutOfView(){

var flagsToKeep = [];

for (var i = 0; i < flags.length; i++){

if (flags[i].x + flags[i].breadth > 0) {

flagsToKeep.push(flags[i]);

}

}

flags = flagsToKeep; // remember the surviving flags

}

function addNewflagsWithSomeRandomProbability() {

// With a very tiny probability, add a new flag to the end.

var newflagLikelihood = 0.007;

if (random(0,1) < newflagLikelihood) {

flags.push(makeflag(width));

}

}

// method to update position of flag every frame

function flagMove() {

this.x += this.speed;

}

// draw the flag

function flagDisplay() {

var floorHeight = 10;

var bHeight = this.nFloors * floorHeight;

noStroke();

// pole

push();

translate(this.x, height - 30);

fill(30, 37, 35);

rect(0, -bHeight * 1.03, this.breadth, bHeight);

// flag

fill(12, 36, 112);

triangle(5, -bHeight * 1.03, 40, 20-bHeight, 5, 30 - bHeight);

pop();

}

function makeflag(birthLocationX) {

var bldg = {x: birthLocationX,

breadth: 6,

speed: -.75,

nFloors: round(random(1,10)),

move: flagMove,

display: flagDisplay}

return bldg;

}

function displayHorizon(){

noStroke();

fill(55,222,153)

rect (0,height-30, width, height-30);

}

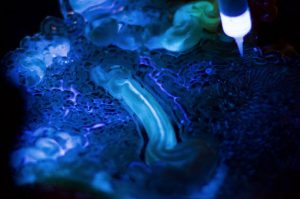

For this project, I wanted to look at recreating a scene from an old film. I created flags underwater to get the look and feel of what I was going for. It was a bit hard to get what I wanted to happen so I had to simplify quite a bit. This project was definitely a struggle for me compared to past projects.

![[OLD FALL 2018] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)