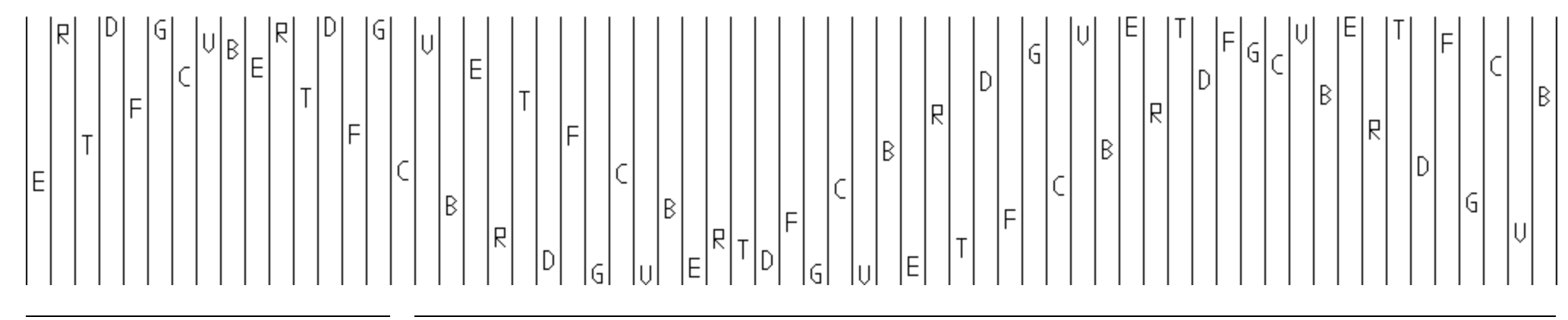

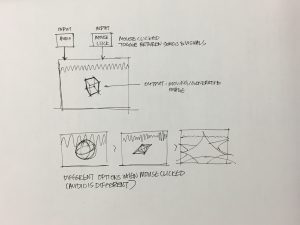

Andrea Gysin’s website is an inspiration for my and Jaclyn’s proposed project. Her website is full of examples of interactive type, which flow across the screen and shift with the position of the mouse. Other than the changes with mouseX and mouseY in the background, the body of text left aligned on the page flickers through and ‘rotates’ when the mouse hovers, creating a really cool loading characters effect. Andrea Gysin’s other work beyond just the construction of her website also include a program created for graphic designers to build simple, animated alphabets, and other tools to create visuals and installations. A lot her work is inspiring and along the lines of typographic interaction that Jaclyn and I are trying to build.

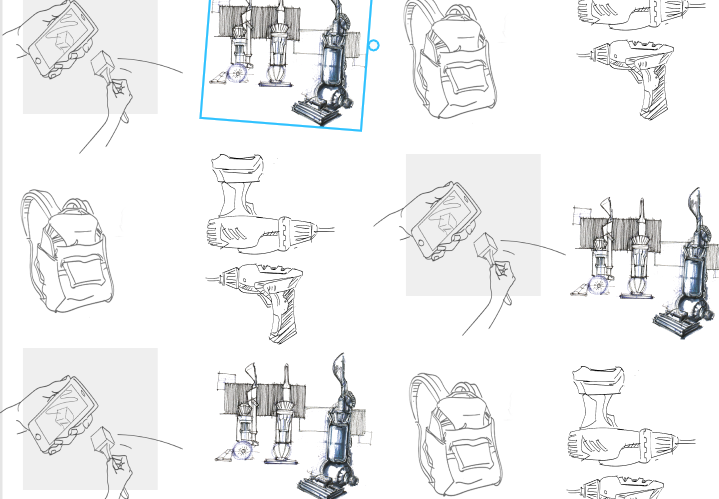

A project along similar but slightly different lines is Amnon Owed’s CAN Generative Typography. Using processing, he created an alphabet of generated letters, with different graphic characteristics. While it does not deal with bodies of text, as Andrea Gysin’s website does, the generative part of this video is what I find interesting; it would be really cool if we could apply a generative aspect to how the lines or stanzas of the poem for our project appear onto the canvas. Owed’s alphabet is not interactive, but a hybrid with the interactions seen in Gysin’s website could produce results that we want.

CAN Generative Typography from Amnon Owed on Vimeo.

A demo video of his generative typography

![[OLD FALL 2018] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)