// Alec Albright

// aalbrigh

// Section B

// Project 12 - Final Project

var video;

var nPoints = 100; // number of points used to draw curves

var previous; // previous image from camera

var threshold = 150; // used to determine if a pixel is different enough to consider it "moving"

var motionX = 0; // main coordinate of X motion

var motionY = 0; // main coordinate of Y motion

var lerpX = 0; // X coordinate for smoothing of motion

var lerpY = 0; // Y coordinate for smoothing of motion

var higherLove; // Whitney Houston's "Higher Love"

var low; // Flo Rida's "Low"

var irreplaceable; // Beyonce's "Irreplaceable"

var newBooty; // Bubba Sparxxx's "Ms. New Booty"

var higherVol; // Higher love volume

var lowVol; // low volume

var irrepVol; // irreplaceable volume

var bootyVol; // new booty volume

function preload(){

higherLove = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/12/Higher-Love.wav");

low = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/12/Low.wav");

irreplaceable = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/12/Irreplaceable.wav");

newBooty = loadSound("https://courses.ideate.cmu.edu/15-104/f2019/wp-content/uploads/2019/12/New-Booty.wav");

}

function soundSetup() { // setup for audio generation

// making sine

sine = new p5.Oscillator();

sine.setType("sine");

//sine.start();

// making sawtooth

sawtooth = new p5.Oscillator();

sawtooth.setType("sawtooth");

//sawtooth.start();

// making square wave

square = new p5.Oscillator();

square.setType("square");

square.freq(440);

//square.start();

}

function setup(){

createCanvas(480, 480);

angleMode(RADIANS);

video = createCapture(VIDEO);

video.size(480, 480); // attempt to size the camera.

video.hide(); // this hides an unnecessary extra view.

// prepping to copy previous frame for difference in motion

previous = createImage(480, 480, RGB);

useSound();

higherLove.play();

higherLove.setVolume(0);

higherLove.loop();

low.play();

low.setVolume(0);

low.loop();

irreplaceable.play();

irreplaceable.setVolume(0);

irreplaceable.loop();

newBooty.play();

newBooty.setVolume(0);

newBooty.loop();

}

function draw(){

var count = 0; // number of pixel instances we've looped through

var sumX = 0; // sum of motion X coordinates

var sumY = 0; // sum of motion X coordinates

// making camera actually mirror user

push();

translate(width, 0);

scale(-1, 1);

image(previous, 0, 0);

pop();

loadPixels();

video.loadPixels(); // this must be done on each frame.

previous.loadPixels();

// comparing all pixels to previous image

for (var x = 0; x < video.width; x ++) {

for (var y = 0; y < video.height; y ++) {

var location = (x + y * video.width) * 4;

// finding previous and current colors

// previous

var red1 = previous.pixels[location];

var green1 = previous.pixels[location + 1];

var blue1 = previous.pixels[location + 2];

// current

var red2 = video.pixels[location];

var green2 = video.pixels[location + 1];

var blue2 = video.pixels[location + 2];

var diff = distSquared(red1, green1, blue1, red2, green2, blue2);

// checking whether they are different enough to call motion

if (diff > threshold * threshold) {

sumX += x;

sumY += y;

count ++;

}

}

}

updatePixels();

// only count it as a different frame if more than 30 pixels have changed

// find main X and Y coordinate of motion, this will be our control for everything

if (count > 100) {

motionX = sumX / count;

motionY = sumY / count;

}

// maintaining mirrored scale for user interface

push();

translate(width, 0);

scale(-1, 1);

// smoothing out how the point of focus is travelling

lerpX = lerp(lerpX, motionX, 0.1);

lerpY = lerp(lerpY, motionY, 0.1);

// drawing point so user knows where the main motion point is

stroke("black");

fill("white");

ellipse(lerpX, lerpY, 20, 20);

pop();

push();

translate(width / 2, height / 2);

// draw all the shapes

drawHippopede();

drawEpicycloid();

drawHypotrochoid();

pop();

previous.copy(video, 0, 0, video.width, video.height, 0, 0, video.width, video.height);

// creating slight boundaries for better sound isolation

if(lerpY < 200 || lerpY > 320) {

// letting contrast between top and bottom come through

bootyVol = 0;

irrepVol = 0;

newBooty.setVolume(bootyVol);

irreplaceable.setVolume(irrepVol);

// as we move up, more higher love/less low

// volume 0 to 1

higherVol = map(lerpY, 0, width, 0, 1);

higherLove.setVolume(1 - higherVol);

lowVol = map(lerpY, 0, width, 0, 1);

low.setVolume(lowVol);

} else {

// letting contrast between right and left come through

higherVol = 0;

lowVol = 0;

higherLove.setVolume(higherVol);

low.setVolume(lowVol);

// as we move right, more new booty/less irreplaceable

// volume 0 to 1

bootyVol = map(lerpX, 0, width, 0, 1);

newBooty.setVolume(1 - bootyVol);

irrepVol = map(lerpX, 0, width, 0, 1);

irreplaceable.setVolume(irrepVol);

}

}

// draws Hippopede

function drawHippopede() {

var x; // x coordinate of vertex

var y; // y coordinate of vertex

var r; // polar coordinate

var a = lerpX / 3 // main parameter of the curve

var b = map(a, 0, 480, 100, 200); // circle radius

var rotation = map(lerpY, 0, 480, 0, TWO_PI); // amount of rotation

// thickness of line proportional to the circle radius

strokeWeight(b / 6);

stroke(255, 255, 255, 150);

noFill();

// rotate shape

push();

rotate(rotation);

// start drawing the shape, one point at a time

beginShape();

for(var i = 0; i < nPoints; i++){

var t = map(i, 0, nPoints, 0, TWO_PI);

// find r (polar equation)

r = sqrt(4 * b * (a - b * sinSq(t)));

// convert to x and y coordinates

x = r * cos(t);

y = r * sin(t);

// draw a point at x, y

vertex(x, y);

}

endShape();

pop();

}

// draws hypotrochoid

function drawHypotrochoid() {

var x; // x coordinate of vertex

var y; // y coordinate of vertex

var a = map(lerpX, 0, 480, 20, 100); // radius of the interior circle

var b = 3; // radius of the petals

var h = lerpX / 10; // distance from center of interior circle

var red = map((lerpX + lerpY) / 2, 0, 480, 0, 255); // how much red

var blue = map(lerpY, 0, 480, 0, 255); // how much blue

var alpha = map(lerpX, 0, 480, 50, 150); // how opaque

var rotation = map(lerpY, 100, 300, 0, TWO_PI); // amount of rotation

strokeWeight(2)

stroke(255, 255, 255, 150);

// control color and opacity with mouse location

fill(red, 0, blue, alpha);

// control rotation with lerpY

push();

rotate(rotation);

// create the shape itself

beginShape();

for(var i = 0; i < nPoints; i++) {

var t = map(i, 0, nPoints, 0, TWO_PI);

// use parametric euqations for hypotrochoid to find x and y

x = (a - b) * cos(t) + h * cos((a - b) / b * t);

y = (a - b) * sin(t) - h * sin((a - b) / b * t);

// draw a point at x, y

vertex(x, y)

}

endShape(CLOSE);

pop();

}

// draws an epicycloid

function drawEpicycloid() {

var x; // x coordinate of vertex

var y; // y coordinate of vertex

var a = map(lerpX, 0, 480, 20, 100); // radius of interior circle

var b = map(lerpY, 0, 480, 5, 30); // radius of petals

var blue = map((lerpX + lerpY) / 2, 0, 480, 0, 255); // how much blue

var red = map(lerpY, 0, 480, 0, 255); // how much red

var rotation = map(lerpY, 100, 300, 0, TWO_PI); // how muhc rotation

// control color with mouse location

strokeWeight(10)

stroke(red, 0, blue, 150);

// control rotation with mouse location

push();

rotate(rotation);

// start drawing shape

beginShape();

for(var i = 0; i < nPoints; i++) {

var t = map(i, 0, nPoints, 0, TWO_PI);

// find coordinates using epicycloid parametric equations

x = (a + b) * cos(t) - b * cos((a + b) / b * t);

y = (a + b) * sin(t) - b * sin((a + b) / b * t);

// draw a point at x, y

vertex(x, y);

}

endShape();

pop();

}

// defines sin^2 using trigonometric identities

function sinSq(x) {

return((1 - cos(2 * x)) / 2);

}

function distSquared(x1, y1, x2, y2) {

let dx = x2 - x1;

let dy = y2 - y1;

return (dx * dx) + (dy * dy);

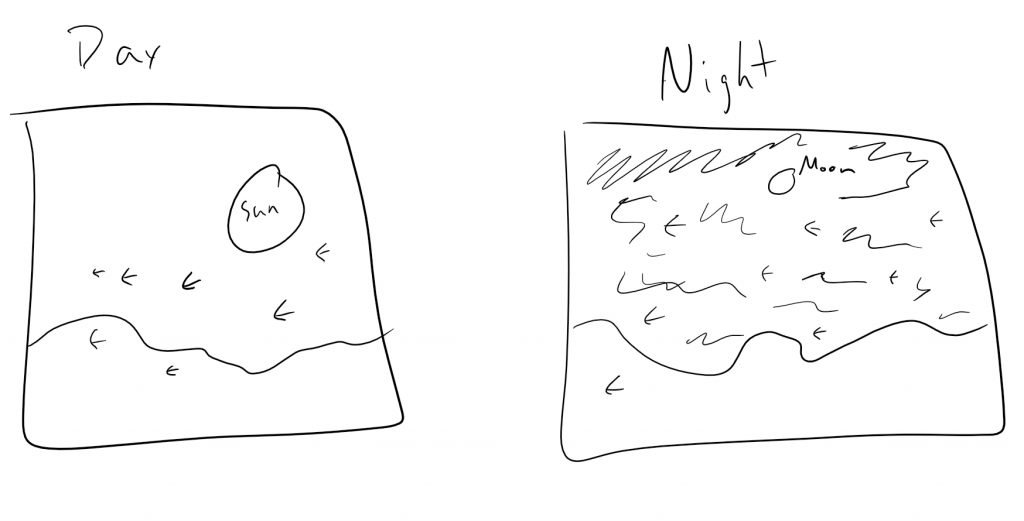

}For the Final Project, I wanted to do something that had to deal with music, as it is my primary focus at CMU, but I also wanted to try doing something computationally complex that I would certainly be challenged by. Thus, the idea to create a motion detection music player was born! I also wanted to keep it fun, so I decided to play music related to each quadrant: “Irreplaceable” – Beyonce for the left (to the left, to the left…), “Higher Love” – Whitney Houston for the top, “Low” – Flo Rida for the bottom, and “Ms. New Booty” – Bubba Sparxxx for the right (get it right, get it right get it tight). For better user interfacing, I also included a floating point that denotes where the program has tracked the average motion.

The process was most difficult in rendering and comparing one frame to its previous frame in order to create a successful motion detection program. It was interesting mapping the visualization to the motion detection as well, giving each song its own visual identity.

In order to run the program, you must allow the website to access your video camera. However, I have included a demo video below for those who would rather see that.

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../wp-content/uploads/2020/08/stop-banner.png)