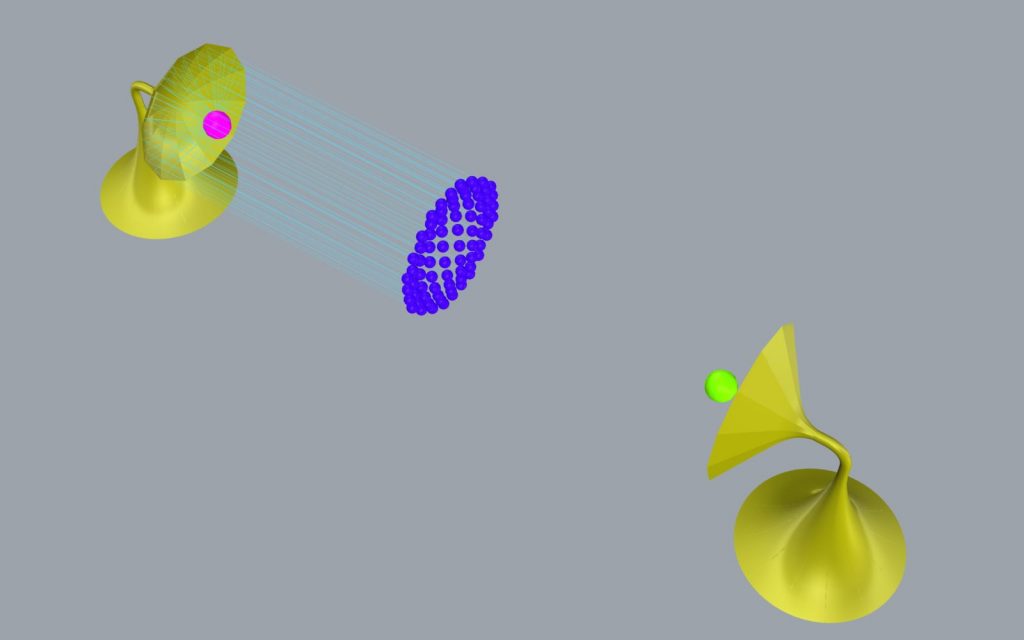

“Apparatum” is a sound installation with sound inspired by Bogusław Schaeffer and the aesthetics inspired by Oskar Hansen. The installation in general also draws inspiration from the heritage of the Polish Radio Experimental Studio. The project consists of analog sound generators which are controlled through a digitized sheet music touch pad. I admire the speculative nature of the piece. Because it’s not commercial music that has to appeal to a wide audience, it feels much more thoughtful and edgy, and I am drawn to the process of creating it. I think that the artistic sensibilities manifested in the visual design. The aesthetics complement the sound art without overpowering it, since it has minimal grayscale colors, limited use of textures, and consistent forms. The textures of the sound are also quite interesting, challenging you to think of new ways to weave sounds and tones together.

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)