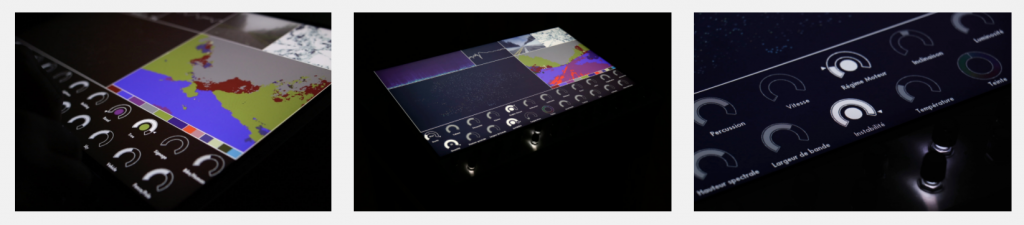

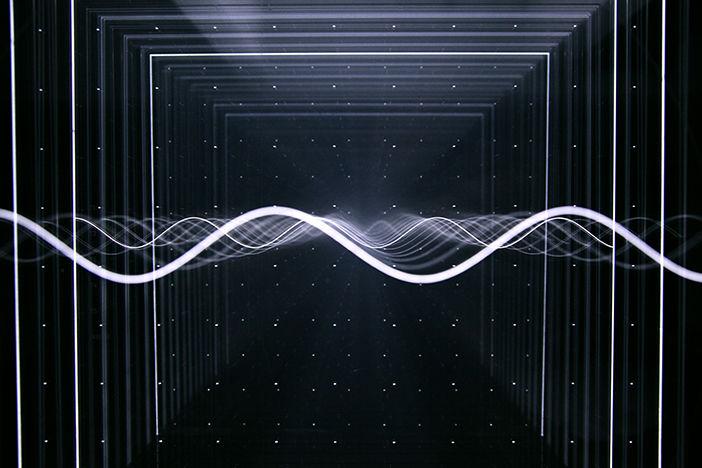

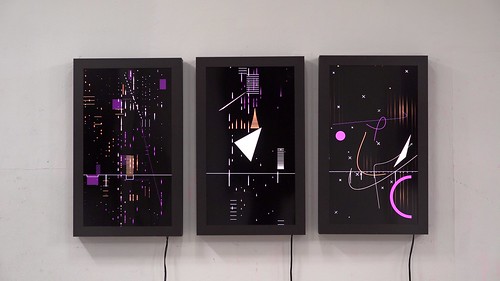

The inspirational project that I found is called Forms- Screen Ensemble , created in 2020. I found this artwork very interesting, as the graphic scores that are created by random probability are transformed into sound through a unique algorithm, creating music as played in the video. From the description it was interesting that the project was described as something that the audience can literally simultaneously hear what they see. The algorithms that generated the work are that briefly, the three screens each contribute to create sound as they each cover rhythm, texture, and harmony. The screens display graphics that are endless, and can never be repeated again.

![[OLD SEMESTER] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2023/09/stop-banner.png)