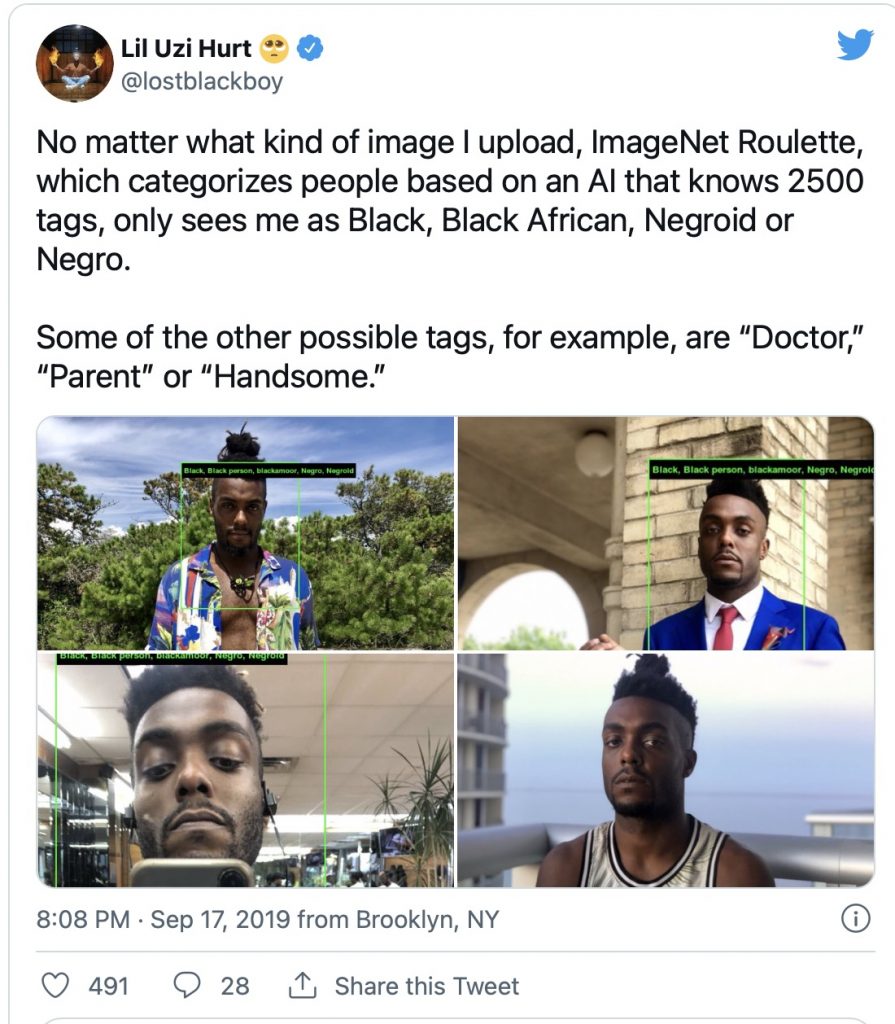

The article “Art Project Shows Racial Biases in Artificial Intelligence System” spoke about ImageNet Roulette, which is an artificial intelligence tool that classifies images of people with different tags, such as politician, doctor, father, newsreader, etc. The tool was created by Trevor Pagan and Kate Crawford specifically to reveal “how layers of bias and racism and misogyny move from one system to the next.” (Pagan) The creators were using this project to reveal how ImageNet, which is use to train many artificial intelligence systems, inherently has racist biases.

The structure of this project was quite effective, as it revealed how so many of the systems we use have biases built into them, which often leads to significantly detrimental effects. Reading the article makes me wonder about to what extent the other systems we use have racial bias built into them – infrastructure, social media, architecture, etc. However, it’d be harder to reveal the inherent biases in physical systems like infrastructure, since there isn’t a pool of data you can pull from like in the case of artificial intelligence learning systems. Regardless, it’s important that we reveal the racial biases that are entrenched in our society. We can’t build future systems that are equal without changing our current ones.

![[OLD SEMESTER] 15-104 • Introduction to Computing for Creative Practice](wp-content/uploads/2023/09/stop-banner.png)