Dog Filter

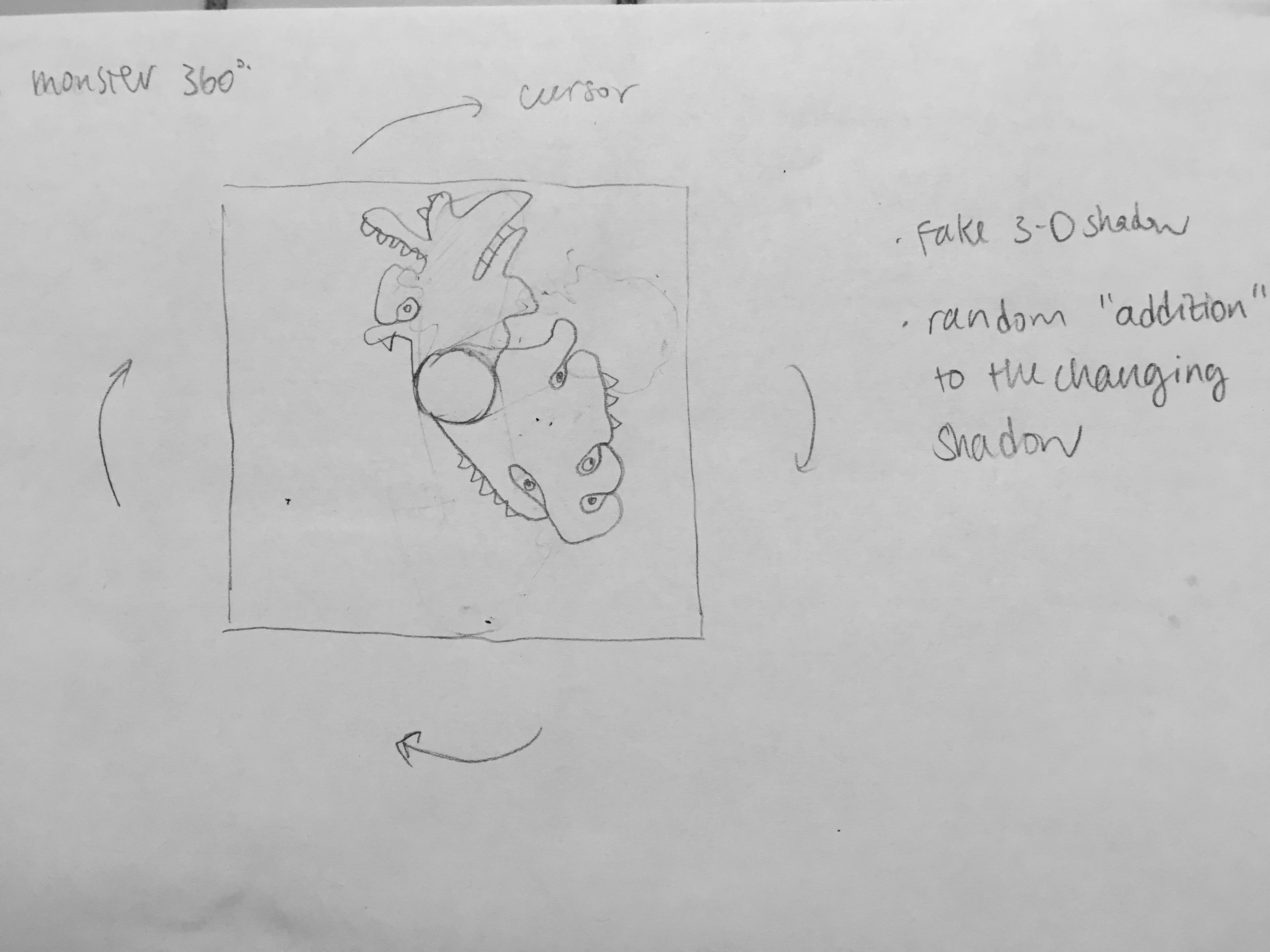

My initial proposal was to create a piece of shadow art. However as I proceeded with the project, I realized that it wasn’t feasible because of two reasons. First is that based on what I am capable of, creating a sufficiently detailed silhouette will cause the image to load very slowly, but in order to make it load at an acceptable speed, the compromise in quality is too big. Second is that the implementing different “monsters” further bogged down the program and was not able to achieve what I pictured.

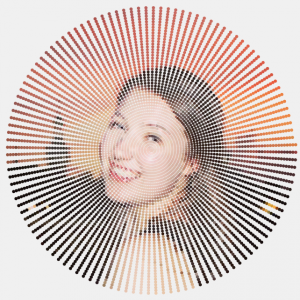

However, during the course of exploration, I figured out that the falling effect resembles that of a snapchat filter to some extent. So I decided to recreate a primitive version of Snapchat’s signature filter – The Snapchat Dog Filter – and add in some falling snow, since it’s almost Christmas.

To play around with it, carefully place one eye at its corresponding crosshair point, and the filter will come into effect! Have fun!

//Sihan Dong

//sihand@andrew.cmu.edu

//Section B

//FINAL PROJECT: snapchat filter

var px;

var py;

var darknessThreshold = 10;

var outlines = [];

//update the falling stuff

function outlineUpdate() {

var theColorAtPxPy = myCaptureDevice.get(this.px, this.py);

if(isColor(theColorAtPxPy)){

var theBrightnessOfPxPy = brightness(theColorAtPxPy);

if (theBrightnessOfPxPy > darknessThreshold) {

this.py = this.py + 3;

}

while (this.py > 0 & theBrightnessOfPxPy < darknessThreshold) {

this.py = this.py - 3;

col = myCaptureDevice.get(this.px, this.py);

if (isColor(col)) {

theBrightnessOfPxPy = brightness(col);

}

}

}

}

function isColor(c) {

return (c instanceof Array);

}

//draw the falling snow

function outlineRender() {

var theColorAtPxPy = myCaptureDevice.get(this.px, this.py);

if(isColor(theColorAtPxPy)){

var theBrightnessOfPxPy = brightness(theColorAtPxPy);

stroke(255);

strokeWeight(theBrightnessOfPxPy/20);

//render the flake

line(this.px - theBrightnessOfPxPy, this.py, this.px+theBrightnessOfPxPy, this.py);

line(this.px, this.py + theBrightnessOfPxPy, this.px, this.py - theBrightnessOfPxPy);

line(this.px - theBrightnessOfPxPy/2, this.py - theBrightnessOfPxPy/2,

this.px + theBrightnessOfPxPy/2, this.py + theBrightnessOfPxPy/2);

line(this.px + theBrightnessOfPxPy/2, this.py - theBrightnessOfPxPy/2,

this.px - theBrightnessOfPxPy/2, this.py + theBrightnessOfPxPy/2);

fill(255, 255, 255, 100 - theBrightnessOfPxPy);

}

}

//start from the top once the falling snow reach the bottom

function outlineReset() {

if (this.py > height-3) {

this.py = 0;

}

}

//the object function that make the falling snow

function makeOutline(inputX, inputY){

outline = {px: inputX, py: inputY,

update: outlineUpdate,

reset: outlineReset,

render: outlineRender

};

return outline;

}

//draw the eye detector

function drawGrid(){

stroke(246, 192, 94);

strokeWeight(1);

line(300, 0, 300, height);

line(400, 0, 400, height);

line(0, 2*height/7, width, 2*height/7);

}

//draw the left ear of the filter

function drawLeft(lx, ly){

fill(149, 108, 67);

noStroke();

triangle(lx, ly + noise(60), lx - 20, ly - 30+ noise(60),

lx + 30, ly - 50+ noise(60));

}

//draw the right ear of the filter

function drawRight(rx, ry){

fill(149, 108, 67);

noStroke();

triangle(rx, ry + noise(60), rx + 20, ry - 30,

rx - 30, ry - 50);

}

//draw the nose of the filter

function drawMiddle(mx, my){

fill(117, 76, 43);

ellipseMode(CENTER);

ellipse(mx, my + 10, 90, 60);

fill(152, 111, 77);

ellipse(mx - 20, my + 10, 30, 20);

ellipse(mx + 20, my + 10, 30, 20);

fill(86, 58, 43);

ellipse(mx, my, 30, 30);

fill(39, 19, 12);

ellipse(mx - 8, my + 3, 10, 10);

ellipse(mx + 8, my + 3, 10, 10);

fill(255);

ellipse(mx + 8, my - 8, 10, 5);

}

function setup() {

createCanvas(640, 480);

myCaptureDevice = createCapture(VIDEO);

myCaptureDevice.size(640, 480); // attempt to size the camera.

myCaptureDevice.hide();

var nb = 20;

var pointPosition = 0;

for (var i = 0; i < nb; i++) {

pointPosition++;

var outlineFormation = makeOutline(pointPosition*width/nb, 0);//, height - 20);

outlines.push(outlineFormation);

}

}

function draw(){

background(220);

myCaptureDevice.loadPixels(); //camera setup

image(myCaptureDevice, 0, 0);

noStroke();

drawGrid();

var colorOfEye1 = myCaptureDevice.get(width*4/9, 2*height/7);

var colorOfEye2 = myCaptureDevice.get(2*width/3, 2*height/7);

var bOfEye1 = brightness(colorOfEye1);

var bOfEye2 = brightness(colorOfEye2);

//if either eye is detected at the crosshair, start the filter

if (bOfEye1 < 20 || bOfEye2 < 20){

push();

translate(30, 50);

drawLeft(width/3, height/10);

drawRight(2*width/3, height/10);

drawMiddle(width/2, height/5 + 30);

pop();

for (var i = 0; i < outlines.length; i++) {

var silhouette = outlines[i];

silhouette.update();

silhouette.reset();

silhouette.render();

}

}

print(bOfEye1);

}

![[OLD – FALL 2016] 15-104 • COMPUTING for CREATIVE PRACTICE](../../wp-content/uploads/2020/08/stop-banner.png)