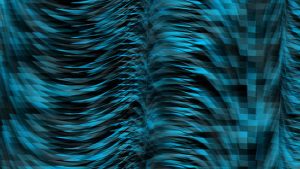

“Frequencies” (2017) is a computational digital fabrication by convivial studio.

In “Frequencies,” a triptych of 3-D, map-like reliefs were made by CNC machines using Perlin Noise algorithm frequencies. The reliefs were meant to imitate rocky and fluid patterns, to match those often found on relief maps. According to convivial studio’s description of the project, “The generative application [used to make “Frequencies”] allows an infinite number of outcomes,” and so convivial studio sought to show a range of patterns across the triptych.

Here’s a video that details the creation of “Frequencies.”

I was intrigued by “Frequencies” for a number of reasons. For one, I’m in the early stages of a project about map-making (specifically with regards to redistricting), and I was curious to investigate map-making from a very different angle. In addition, I had just read about Perlin Noise in one of the required readings for our class, and I don’t know if I fully understand it but I am intrigued by it. I also love the combination of huge machines making very delicate-seeming art. I had to look up what a CNC machine is (read about it here) and I am looking forward to going down the rabbithole of other CNC machined artworks.

convivial studio used openFrameworks with add-ons including ofxMtlMapping2D, ofxFlowTools, ofxAutoReloadedShader for the generative 3D and projection software. They used artCam to generate the code needed for the CNC machine. A more detailed description is available here.

As seen in the video, a projection layer is added on top of the reliefs that, according to convival studio, “aims to challenge the perception of relief.” Personally, I found myself more interested in the reliefs themselves and the means by which they were made, but they do make for some striking images.

convival studio, based in London, describes itself as working “at the intersection of art, design and technology. Merging the digital with the physical, convivial creates emotionally engaging experiences with an element of wonder.” I certainly felt wonder watching the creation of “Frameworks.” I hope you do, too!

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](../../wp-content/uploads/2020/08/stop-banner.png)