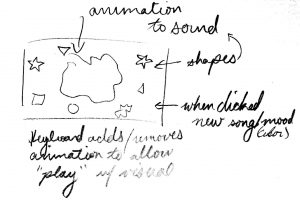

I was very inspired by the ideas of Real Slow, a project that visualizes music, as well as AV Clash, a project that involves audiovisual compositions through audio and sound effects. Throughout the course, my favorite parts were when we had to incorporate the camera and when we had to allow music in our assignments. Thus, I decided to look for inspirations that interacts with these two topics. First, these both have very interesting visuals that react to sounds and music. For Real Slow, there is face detection that allows the components of the face to react to the music and volume, which I found was very fascinating. This interactive project was originally inspired by the music experience for an Australian indie-electronic band, “Miami Horror”. The artist wanted to create a program to fit the mood & tone of their electro music “Real Slow”. As I researched, I found out that the first prototype was developed on OpenFrameworks and then implemented the idea on the web using JavaScript libraries for face tracking called ClmTrackr and p5.js for creating sound visualization. For AV Clash, I though that it was interesting how there is interaction between the objects that responded to the sounds. The project allows the creation of audiovisual compositions, consisting of combinations of sound and audio-reactive animation loops. Objects can be dragged and thrown, creating interactions (clashes) between them. Both of these projects not only have some sort of interaction with music and the program, but also an interaction with the audience as well, whether it is through face detection, or mouse detection.

Caption: Video documentation of Real Slow (2015), a Face Sound Visualization with music by Nithi Prasanpanich

http://prasanpanich.com/2016/01/01/real-slow/

Caption: Video representation of AV Clash (2010), a project by Video Jack, that creates audiovisual compositions, consisting of combinations of sound and audio-reactive animation loops

https://www.creativeapplications.net/flash/av-clash-flash-webapp-sound/

![[OLD FALL 2018] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)