My final project uses the webcam to generate simple animations that will follow motion/gestures of the user through a red color marker. For the best experience, make sure to only have one red object in front of the camera at a time.

Instructions:

-

grab something red (any relatively small red object would work best, or just pull up a red image on your phone)

-

hold it within range of the webcam, with the red facing the camera

-

wave/draw/play

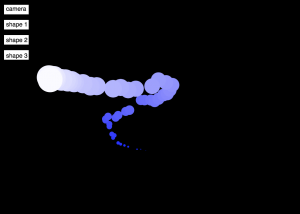

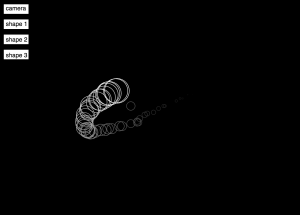

*to see the animations alone, you can turn the camera image on/off by pressing camera button

press on shape 1, shape 2, and shape 3 to see different shapes

works best in a brightly lit room, so the computer can recognize the red object

// Sophie Chen

// sophiec@andrew.cmu.edu

// 15-104 Final Project

// Dec 2018

// This program uses the webcam and generates a simple animation that will

// follow a red color marker. For best user experience, make sure to only have

// one red object in front of the camera at a time.

// declare variables

var myCaptureDevice;

var xarray = []; // array to store the value of x positions

var yarray = []; // array to store the value of y positions

var limitSize = 50; // length limit of x and y arrays

var cameraOn = 1; // camera switch - default on

var shapeOne = 1; // default starts with shape one

var shapeTwo = -1; // shape two starts false

var shapeThree = -1; // shape three starts false

var turtle; // define turtle graphics variable

// setup loads the webcam input and initializes turtle graphics

function setup() {

createCanvas(600, 430);

myCaptureDevice = createCapture(VIDEO);

myCaptureDevice.size(600, 430);

myCaptureDevice.hide();

turtle = makeTurtle(0, 0);

turtle.penDown();

turtle.setColor(255);

}

// this function tests for color as a condition

function isColor(c) {

return (c instanceof Array);

}

// this function draws the animations on top of the camera image

function draw() {

myCaptureDevice.loadPixels();

// if cameraOn is true, load camera output

// if cameraOn is false, camera output is not visible, load black background

if (cameraOn === 1){

image(myCaptureDevice, 0, 0);

} else {

background(0);

}

//call functions that draw the buttons

drawCamButton();

drawShapeButton();

drawShapeTwoButton();

drawShapeThreeButton();

//declare variables used to calculate centerpoint of red object/marker

var xMin = 0; // x value minimum

var xMax = 600; // x value maximum

var yMin = 0; // y value minimum

var yMax = 430; // y value maximum

// for loop that draws the shape animations

for (var a = 0; a < xarray.length; a++){

// declare color and size variables based on forloop

var size = (50 / xarray.length) * a;

var r = map(a, 0, xarray.length, 0, 255);

var g = map(a, 0, xarray.length, 0, 255);

var b = map(a, 0, xarray.length, 0, 255);

// Shape 1: filled ellipse

if (shapeOne === 1){

shapeTwo = -1;

shapeThree = -1;

noStroke();

fill(r, g, 255);

ellipse(xarray[a], yarray[a], size, size);

}

// Shape 2: outlined ellipse

if (shapeTwo === 1) {

shapeOne = -1;

shapeThree = -1;

noFill();

stroke(r, g, b);

strokeWeight(1);

ellipse(xarray[a], yarray[a], size, size);

}

// Shape 3: turtle graphics

if (shapeThree === 1) {

shapeOne = -1;

shapeTwo = -1;

turtle.setColor(color(205, 255, 10));

turtle.goto(xarray[a], yarray[a]);

turtle.forward(25);

turtle.right(90);

}

}

// get the color value of every 5 pixels of webcam output

for (var i = 0; i < width; i += 5){

for (var j = 0; j < height; j+= 5) {

var currentColor = myCaptureDevice.get(i, j);

// targetColor: color(255, 0, 0);

// calculate the difference between current color and target color

var dr = red(currentColor) - 255;

var dg = green(currentColor) - 0;

var db = blue(currentColor) - 0;

// if current color is close enough to target color (~120), calculate

// center point of the red area

if (isColor(currentColor)){

var dist = sqrt(sq(dr) + sq(dg) + sq(db));

if (dist < 120) {

// find center point of red marker

if (i > xMin){

xMin = i;

}

if (i < xMax){

xMax = i;

}

if (j > yMin){

yMin = j;

}

if (j < yMax){

yMax = j;

}

}

}

}

}

// push the newly discovered x, y into the array

xarray.push((xMin + xMax) / 2);

yarray.push((yMin + yMax) / 2);

// if array is full, pop something out from the beginning

while (xarray.length > limitSize) {

xarray.shift();

yarray.shift();

}

}

// functions to trigger responses of buttons pressed

function mouseClicked(){

// if camera button is pressed, toggle on/off

if (mouseX > 10 & mouseX < 60 && mouseY > 10 && mouseY < 30){

cameraOn = -cameraOn;

}

// if shape 1 button is pressed, show shape 1, disable shape 2 & 3

if (mouseX > 10 && mouseX < 60 && mouseY > 20 && mouseY < 60){

shapeOne = 1;

shapeTwo = -1;

shapeThree = -1;

}

// if shape 2 button is pressed, show shape 2, disable shape 1 & 3

if (mouseX > 10 && mouseX < 60 && mouseY > 60 && mouseY < 90){

shapeTwo = 1;

shapeOne = -1;

shapeThree = -1;

}

// if shape 3 button is pressed, show shape 3, disable shape 1 & 2

if (mouseX > 10 && mouseX < 60 && mouseY > 90 && mouseY < 120){

shapeThree = 1;

shapeTwo = -1;

shapeOne = -1;

}

}

// camera button

function drawCamButton(){

fill(255);

stroke(0);

rect(10, 10, 50, 20);

noStroke();

fill(0);

text('camera', 15, 23);

}

// shape 1 button

function drawShapeButton(){

fill(255);

stroke(0);

rect(10, 40, 50, 20);

noStroke();

fill(0);

text('shape 1', 15, 54);

}

// shape 2 button

function drawShapeTwoButton(){

fill(255);

stroke(0);

rect(10, 70, 50, 20);

noStroke();

fill(0);

text('shape 2', 15, 85);

}

// shape 3 button

function drawShapeThreeButton(){

fill(255);

stroke(0);

rect(10, 100, 50, 20);

noStroke();

fill(0);

text('shape 3', 15, 116);

}

//////////////////////////////////////////////////////////////////////////////

function turtleLeft(d){this.angle-=d;}function turtleRight(d){this.angle+=d;}

function turtleForward(p){var rad=radians(this.angle);var newx=this.x+cos(rad)*p;

var newy=this.y+sin(rad)*p;this.goto(newx,newy);}function turtleBack(p){

this.forward(-p);}function turtlePenDown(){this.penIsDown=true;}

function turtlePenUp(){this.penIsDown = false;}function turtleGoTo(x,y){

if(this.penIsDown){stroke(this.color);strokeWeight(this.weight);

line(this.x,this.y,x,y);}this.x = x;this.y = y;}function turtleDistTo(x,y){

return sqrt(sq(this.x-x)+sq(this.y-y));}function turtleAngleTo(x,y){

var absAngle=degrees(atan2(y-this.y,x-this.x));

var angle=((absAngle-this.angle)+360)%360.0;return angle;}

function turtleTurnToward(x,y,d){var angle = this.angleTo(x,y);if(angle< 180){

this.angle+=d;}else{this.angle-=d;}}function turtleSetColor(c){this.color=c;}

function turtleSetWeight(w){this.weight=w;}function turtleFace(angle){

this.angle = angle;}function makeTurtle(tx,ty){var turtle={x:tx,y:ty,

angle:0.0,penIsDown:true,color:color(128),weight:1,left:turtleLeft,

right:turtleRight,forward:turtleForward, back:turtleBack,penDown:turtlePenDown,

penUp:turtlePenUp,goto:turtleGoTo, angleto:turtleAngleTo,

turnToward:turtleTurnToward,distanceTo:turtleDistTo, angleTo:turtleAngleTo,

setColor:turtleSetColor, setWeight:turtleSetWeight,face:turtleFace};

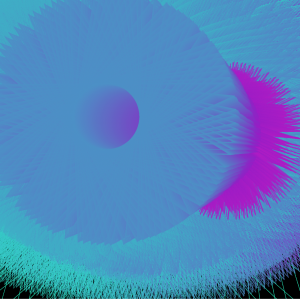

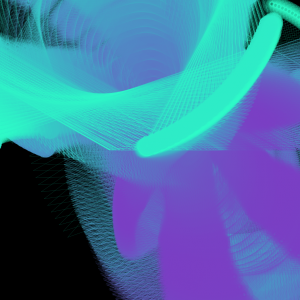

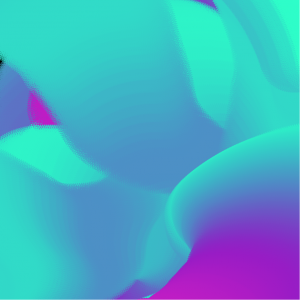

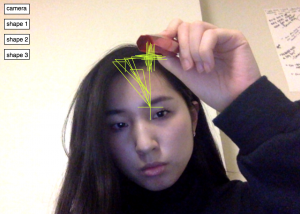

return turtle;}I really enjoyed the camera interaction aspect of the text rain assignment, which is why I wanted to work more with live cam for this project and create something where the user has even more direct control. Overall this project was a lot more challenging than I expected, but that also made it more rewarding when I finally got it to work. I’m glad I went with color recognition for the marker because it allows for precise control and is more forgiving towards the user in terms of what environment they should be in when using this program. The most time-consuming and unexpected challenge was the lagging and freezing that comes with working with so many pixels, so trying to figure out what was causing the freezing took a lot longer than changing the code to fix it. Since the animations are on the simple side, I decided to include 3 different options. Ideally the animations would’ve been more complex, that’s something I hope to keep working on in the future. Below are screenshots from me using the program to give an idea of how it would look like when it’s working.

![[OLD FALL 2018] 15-104 • Introduction to Computing for Creative Practice](../../wp-content/uploads/2020/08/stop-banner.png)