/* Ammar Hassonjee

ahassonj@andrew.cmu.edu

Section C

Project 09

*/

var underlyingImage;

var theColorAtLocationXY;

// Variable for adjusting the frame rate

var frames = 100;

function preload() {

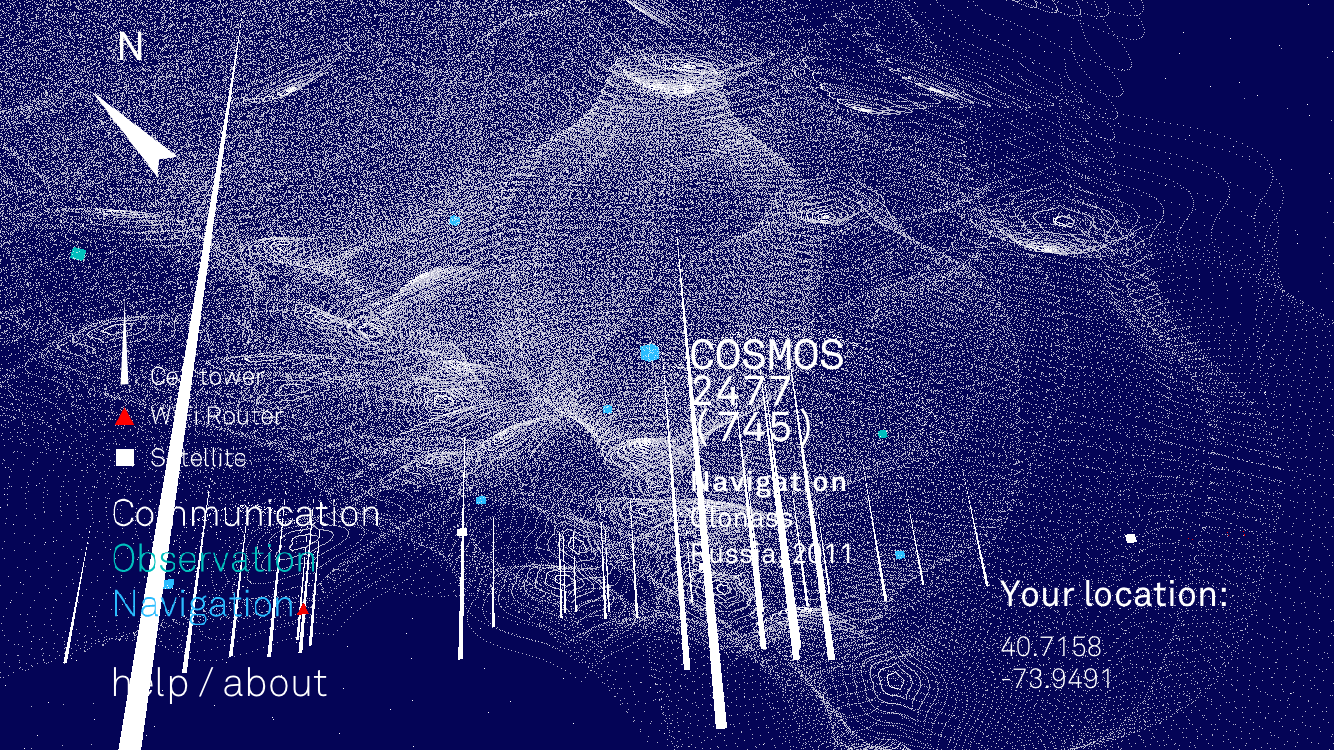

var myImageURL = "https://i.imgur.com/o4lm5zF.jpg";

underlyingImage = loadImage(myImageURL);

}

function setup() {

createCanvas(480, 480);

background(0);

underlyingImage.loadPixels();

}

function draw() {

// Changing the frame rate each time draw is called

frameRate(frames);

// Initial variables declared to return pixel from image at random location

var px = random(width);

var py = random(height);

var ix = constrain(floor(px), 0, width-1);

var iy = constrain(floor(py), 0, height-1);

// Getting the color from the specific pixel

theColorAtLocationXY = underlyingImage.get(ix, iy);

// Varying the triangle size and rotation for each frame based on

// the pixel distance from the mouse

var triangleSize = map(dist(px, py, mouseX, mouseY), 0, 480 * sqrt(2), 1, 10);

var triangleRotate = map(dist(px, py, mouseX, mouseY), 0, 480 * sqrt(2), 0, 180);

noStroke();

fill(theColorAtLocationXY);

// rotating the triangle at each pixel

push();

translate(px, py);

rotate(degrees(triangleRotate));

triangle(0, 0, triangleSize * 2, 0, triangleSize, triangleSize);

}

function keyPressed() {

// reducing the frame count each time a key is pressed

frames = constrain(int(frames * .5), 1, 10);

}

function mousePressed() {

// increasing the frame rate each time the mouse is pressed

frames = constrain(int(frames * 2), 1, 1000);

}

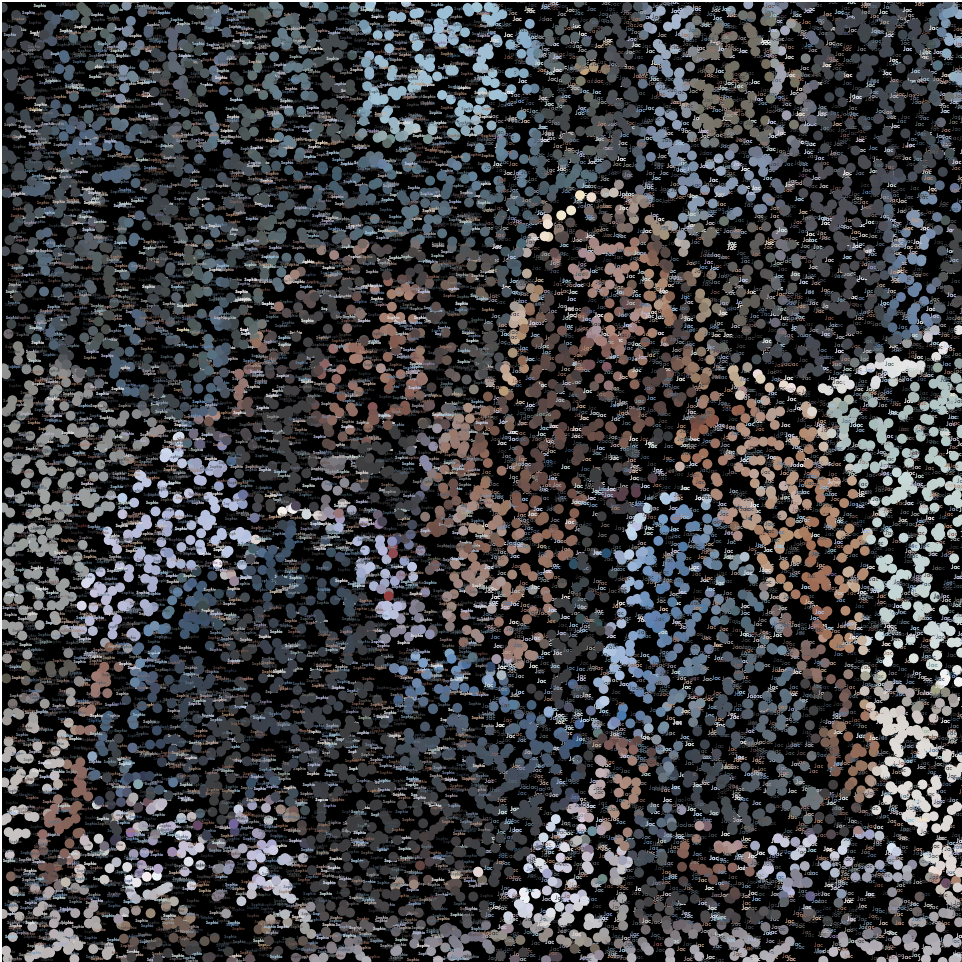

I was inspired to make an interactive self portrait that is based from my favorite shape, the triangle! By using the mouse to generate values, my program allows users to make my portrait more realistic or more distorted depending on where they keep the mouse. I think the combinations of rotation and triangle size create really interesting renditions of my selfie.

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)