After looking through the reflections of several other students, I found a post from Julia Nishizaki that she wrote for the Week 6 Looking Outwards on randomness. It was the series of images that she included that really attracted me to wanting to learn more about this project.

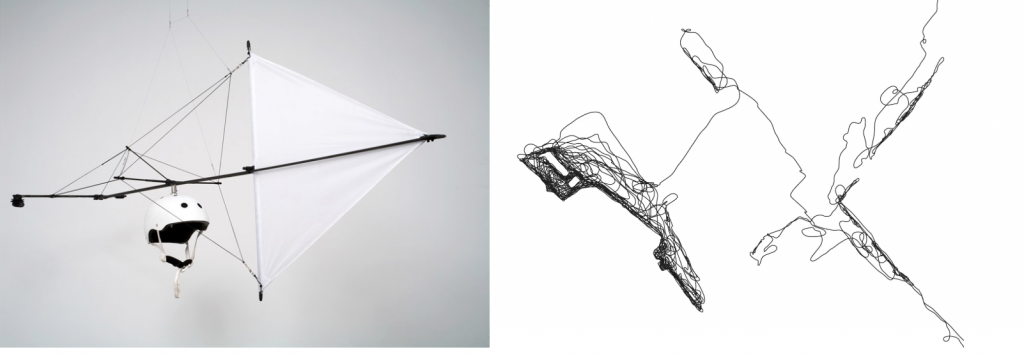

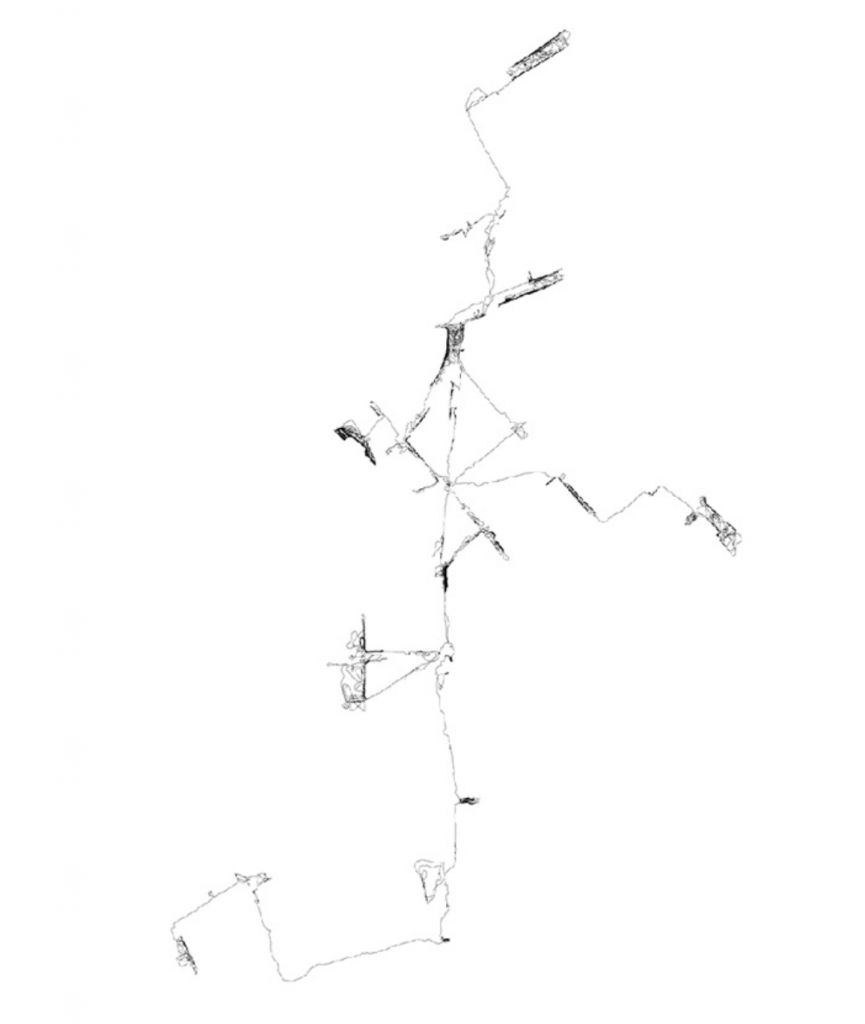

The project is called Windwalks, created by British artist Tim Knowles. A lot of his work is focused on movement and creating images through the movement of forms and objects that he can not control. This project utilizes the movement of the wind to create line drawings. Participants in the project are made to wear helmets with large arrows affixed to them. As the participant moves, the arrow is pushed by the wind, and the direction of the arrow determines where they will move next. Knowles collects data from the helmet about the path that the wearer took and uses that to create drawings.

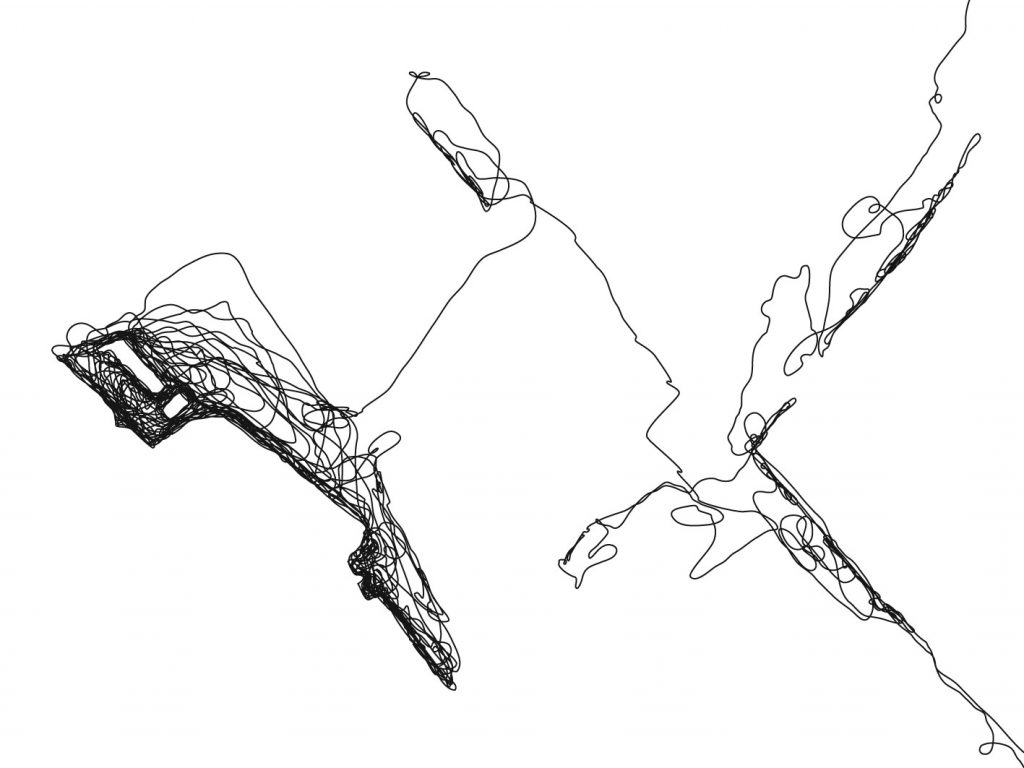

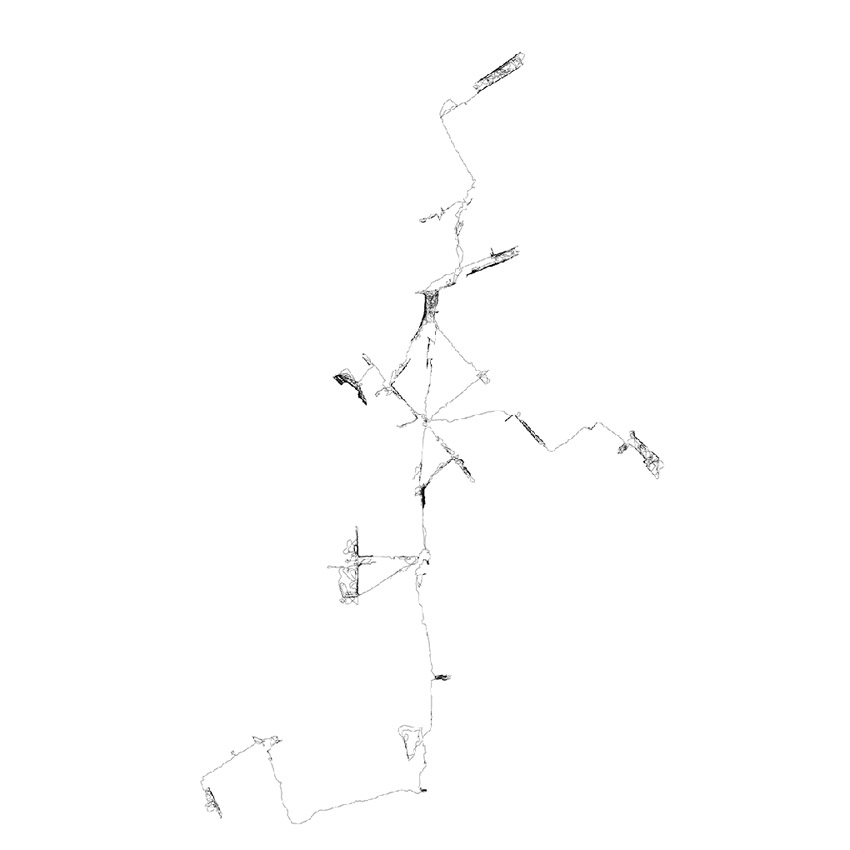

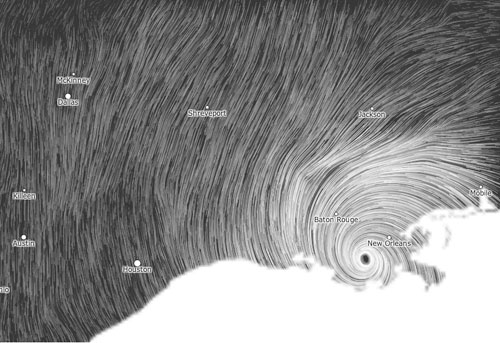

Map of Movement

Map of Movement

I appreciate that Julia mentions that the project is not directly related to computation, but it definitely has to do with random image generation. The path that the wind creates cannot be expected. But it is not just the wind that is random. The wind then informs random movement of the wearers and then informs a randomly generated line drawing. I find the different layers of this project to be super intriguing. The other element that I really enjoy about the project is that we often dont think about the winds affect in our daily movement (except when its super strong…then people notice). But generally, the movement of the wind doesnt usually determine our pathways through an environment, so it is interesting to see the wind being the controlling force in this instance. I also really appreciate the line drawings. You can see the map like qualities in them, but they are also simple line forms that draw attention.

One point of Julia’s that I thought was very interesting was pointing out the ways that the line drawings suggest the environment that the walks take place in. The buildings and other structures inform the movement of the wind and therefore also determine the map that is translated for the drawings.

Overall I found this project super intriguing and Im glad I was able to find something really interesting by looking through someone else’s reflections. Im really interested in learning more about this artist and his other work.

![[OLD FALL 2019] 15-104 • Introduction to Computing for Creative Practice](../../../../wp-content/uploads/2020/08/stop-banner.png)

Mark Kirkpatrick’s commission piece for Apple, Inc. 2017

Mark Kirkpatrick’s commission piece for Apple, Inc. 2017